With top-rated shows like “Orange is the New Black” or “House of Cards,” Netflix needs to have a well-versed integration test team to make sure each of its 80 million users are getting a great experience. With such a fast-paced environment, challenges are sure to come to the surface.

Netflix’s product engineering integration test team recently looked at three major challenges that it has encountered while ensuring quality experiences for Netflix users. These challenges included testing and monitoring for “High Impact Titles (HITs),” A/B testing, and global launches.

(Related: Automation is critical for testing)

HITs pose a problem for the test team because they are the shows that have the highest visibility and need to be tested extensively. (For instance, “Orange is the New Black” drew 6.7 million viewers in the first 72 hours it was online.) This means the team will be testing weeks before and long after the launch date of a HIT to make sure the platform’s running smoothly.

Netflix has two phases with different strategies in order to make sure HITs deliver a good member experience. The first strategy starts before the title launches. Complex test cases are created to “verify that the right kind of titles are promoted to the right member profiles,” according to a Netflix tech blog post. Automated tests won’t work here since the “system is in flux,” so most of the testing during this phase is manual.

The testing doesn’t end here. Netflix engineers will write tests that check to see if the title continues to find its correct audience organically. Netflix said it has 600 hours of original programming in addition to all of its licensed content, which means manual testing in this phase is just not enough.

“Once the title is launched, there are generic assumptions we can make about it, because data and promotional logic for that title will not change—e.g. number of episodes > 0 for TV shows, title is searchable (for both movies and TV shows), etc.,” according to the Netflix tech team. “This enables us to use automation to continuously monitor them and check if features related to every title continue to work correctly.”

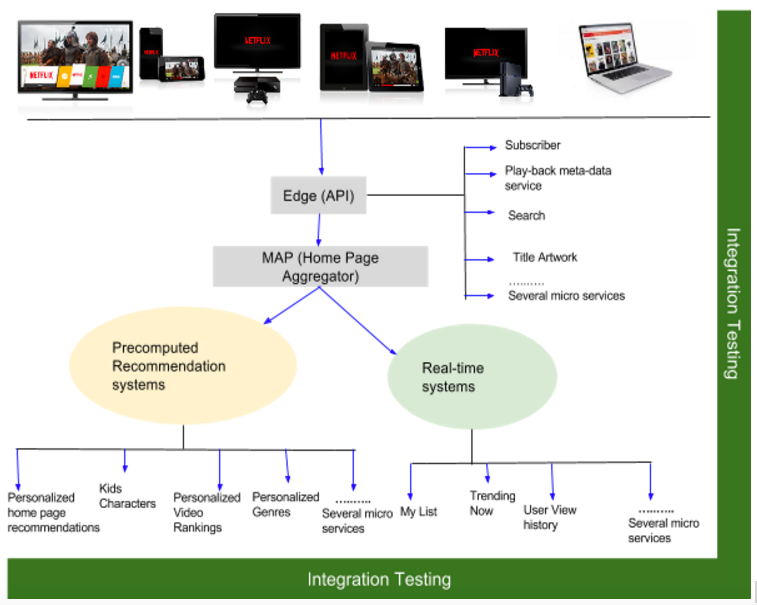

Netflix also has a variety of A/B tests running, and a major challenge with adding end-to-end automation for its A/B tests is the variety of components that needed to be automated, according to the tech team. To solve this, Netflix decided to implement its automation by accessing microservices through their REST endpoints.

With this approach, the team was able to obtain test runtimes within a range of four to 90 seconds. With Java-based automation, they estimated a median runtime to have taken between five and six minutes, according to their blog post.

Another challenge for Netflix was when it had a simultaneous launch in 130 countries in 2015. Originally, Netflix designed to run the test code in a loop for each country. This made each test log significantly larger, which would make it difficult to investigate failures.

The team decided to use the Jenkins Matrix plug-in to parallelize its tests. Now, tests in each country would run in parallel. Additionally, Netflix used an opt-in model so the team could write automated tests that are global-ready. Currently, automation is running globally for Netflix, and it covers all high-priority integration test classes, including HITs in the regions where a title is available, according to the blog post.

For the future, Netflix’s automation projects in its road map include workflow-based tests, alert integration, and chaos integration.