From the golden robots of Hephaestus, to Dr. Frankenstein’s monster, to Hal 9000, we’ve been fascinated by the idea of artificial intelligence (AI) for centuries. Creating artificial self-directing intelligence is the #2 dream of mankind—second only to being able to fly like a bird. However, while the Wright brothers were off the ground in 1903, AI hasn’t been ready to take off until very recently. AI requires data + computing power + algorithms. We’ve had the algorithms for a long time; now, big data and colossal computing power have brought us past the tipping point.

The feasibility of AI couldn’t come at a better time. Today, “Digital Disruption” is forcing enterprises to innovate at lightning speed. We need to dedicate resources to creating new sources of customer value while continuously increasing operational agility. Otherwise, we risk waking up one day to find out—like Nokia—that even though we did nothing “wrong,” we somehow lost.

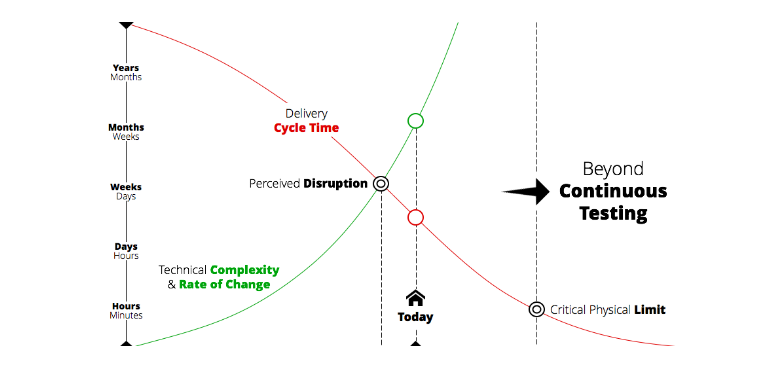

Anyone responsible for producing software knows that the traditional ways of developing and delivering software aren’t adequate for meeting this new demand. Not long ago, most companies were releasing software annually, bi-annually, or quarterly. Now, iterations commonly last 2 weeks or less. While delivery cycle time is decreasing, the technical complexity required to deliver a positive user experience and maintain a competitive edge is increasing—as is the rate at which we need to introduce compelling innovations.

In terms of software testing, these competing forces create a gap. We’ve turned to Continuous Testing to bridge the gap that we’re facing today. But how do we test when, over time, these trends continue and the gap widens? We will inevitably need to go beyond Continuous Testing. We will need additional assistance to be able to deliver a positive user experience given such high delivery speeds and technical complexity.

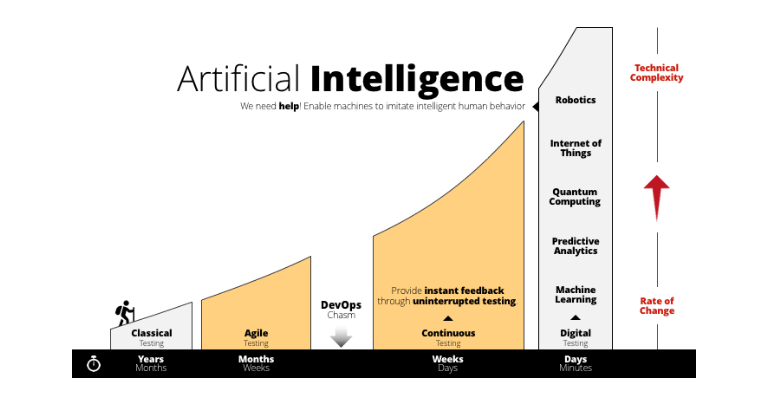

We’ve already undergone quite a journey to arrive at Continuous Testing. “Classical” testing was designed for software delivery cycles that span months (or sometimes even a year). Agile has made 2-week development iterations the norm—but now even more is needed to meet today’s voracious demand for software. Attempts to further accelerate the process exposed a chasm between Development, Test, and Operations. That chasm needed to be bridged with DevOps and Continuous Testing in order to move beyond that acceleration plateau. Today, the vast majority of organizations are talking about Continuous Testing and trying to implement it.

Nevertheless, when we look into the future, it’s clear that even Continuous Testing will not be sufficient. We need help.

We need “Digital Testing” to achieve further acceleration and meet the quality needs of a future driven by IoT, robotics, and quantum computing. AI, imitating intelligent human behavior for machine learning and predictive analytics, can help us get there.

What is AI?

Before we look more closely at how AI can take software testing to the next level, let’s take a step back and review what AI really means.

Forrester defines AI as

“A system, built through coding, business rules, and increasingly self-learning capabilities, that is able to supplement human cognition and activities and interacts with humans natural, but also understands the environment, solves human problems, and performs human tasks. “

Another interesting definition is that AI is

“A field of study that gives computers the ability to learn without being explicitly programmed.”

One of the key points of AI is that you do not need to explicitly program algorithms. Algorithms are certainly used, but they’re not designed for an explicit solution of a distinct problem. The machines can learn, and they use data to do so.

Applying AI to Software Testing

To meet the challenges presented by accelerating delivery speed with increasing technical complexity, we need to follow a very simple imperative:

Test smarter, not harder

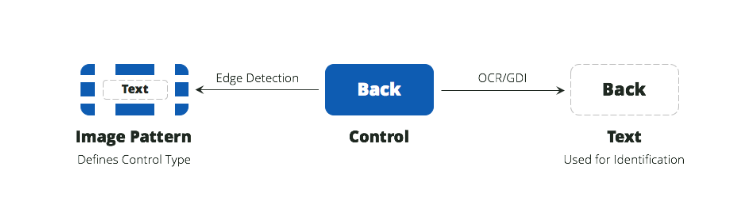

For example, consider how image recognition can be used to bring UI testing to the next level so that dynamic UI controls (e.g., for responsive sites) can be automatically recognized in all their various shapes and forms.

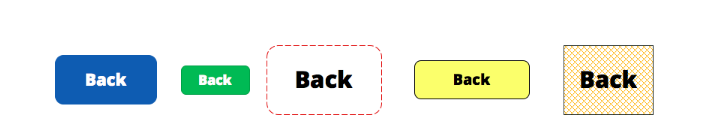

We can already recognize UI controls from the human perspective, beyond plain template matching. The UI’s pixel structures can be interpreted with the goal of recognizing image patterns and identification information such as text. For example, edge detection can be used to identify the following control as a button and OCR/ GDI layers can be used to identify its text.

Using AI, the software testing tool can learn to identify controls during the scanning and test execution process—independent of the control size, color, text alignment, etc.. A learning approach is applied: images are generalized by adding new image patterns and adapting existing image patterns during the scan and test execution process.

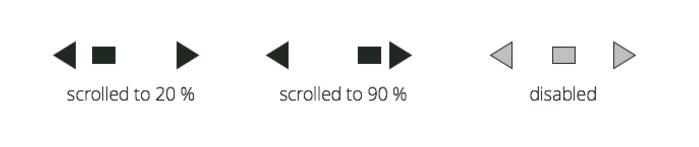

Each control knows its context on the graphical interface via anchor identification. Control properties are extracted from the image pattern, and can in turn be used to constrain automation of certain controls (for example, a scrollbar) during test execution. This eliminates the need to recognize them from their technical implementation (ID, etc.).

The end result of this learning? It enables repeatable and stable test execution, even on responsive UIs. This will be the next chapter of UI test automation.

Not AI—But Does That Matter?

Given AI’s buzzword status, it’s all-too-commonly applied to innovations that are incredibly exciting and valuable—but are not AI because they don’t involve self-learning. “Self-healing” technologies fall into this category.

One of the most interesting examples of this is technology that updates broken (out-of-sync) test cases at runtime. There are typically multiple ways to identify a control (such as a button) that you’re trying to automate. Each control is usually identified by a technical property, such as an ID, a name, etc.. In many cases, multiple sets of properties are redundantly unique to a control. For example, assume 2 of the 3 identifiers being used to identify a particular control can no longer locate it. If just 1 of the 3 identifiers is still current, self-healing technology can be used to identify the control, as well as update the other 2 identifiers.

I don’t dispute the value of this technology at all. In fact, it’s something we’ve developed at Tricentis, and we have seen first-hand how it can save testers from having to constantly update test cases as applications evolve. Yet, despite the “self” in its title, self-healing technology is driven by advanced algorithms, not self-learning. It’s not AI. It’s what I call “cognitive testing.” Nevertheless, it is a prime example of the type of innovation we need to satisfy the demands of “digital testing” beyond Continuous Testing.