If you Google “how to organize your data for a database,” you’ll get millions of results. That’s a lot of hits for something that you shouldn’t have to think about.

But for the last 40 years, that’s exactly what we’ve been forced to do. The people in our organizations have spent an ungodly amount of time and money massaging our data, planning and re-planning how to organize it, and dealing with the fallout when our theoretical data model comes face to face with reality and the real world wins.

The database needs to spend more time working for us, rather than the other way around. It has to. There is simply too much data being generated, in too many disparate systems, of too many varied formats, to put that burden on humans. Organizations can’t generate any differentiated value from their data if they’re spending 70 percent of their budget just keeping the system happy. Those that are able to leverage their data will dominate those that aren’t.

Let’s face it, it’s hard work to generate differentiated value. You need your best people focused on that – not spending their time dealing with minutiae that the system should handle for them. Remember, the ‘M’ in DBMS stands for Management. The database should be managing the data. It should be smart about how it does that and help users get better answers faster without doing so much work up-front.

How much data?

The explosion of data is upending the user-database dynamic.

Today, a doctor at the bedside of a patient creates reams of data with notes on patient skin tone, test results, comments, mood, conversations with other doctors, expectations and likelihoods. Digital cameras are dumping loads of information into databases as they capture pictures, sounds, scenes, emotions and then data pours in simultaneously from social media sites on how those emotions are being perceived. Financial firms are accessing untold amounts of data on transactions, trends, global forces and connections.

Now consider the Internet of Things (IoT). Gartner, in its 2016 ‘Planning Guide for the Internet of Things,’ predicts that by 2020, “the world will contain more than 20 billion IoT devices, generating trillions of dollars’ worth of business value.”

Every industry is already being impacted. A connected car alone will kick out 25 gigabytes of data — every hour — as it creates data on such things as route, speed, road conditions, and performance.

That’s the real world creating data in real-time and enterprises need databases that provide the best mechanisms for handling that data, as it is, and then providing insights and taking action based on that data. All the while they must keep track of governance data, lineage, provenance, and security. That’s critical for industrial IoT.

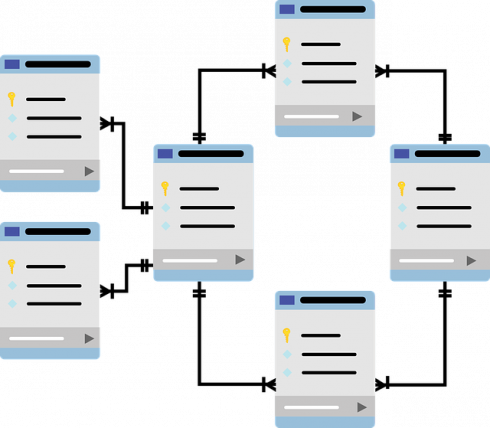

Moreover, this data is not in one system; it is in many systems. These systems tend to be developed independently and accrete over time. Now you’ve got huge volumes of data in multiple systems with varying schemas and semantics and you are left to wrangle it all into a form where you can leverage all of it.

The downfall of the traditional, normalized relational structure is that the designers and the users must plan the layout of the data long before the first bit of data is loaded or first line of code is written. That’s really tough when you’re trying to leverage data from multiple systems, none of which were designed to be consistent or even know about one another. The time to value is extremely long and the agility is low.

If requirements change, or the data changes, or new sources are added, or new questions are posed, then it’s back on the shoulders of your people to readjust everything to make it work within the rigid model. That approach is too slow and laborious as companies compete globally – often against modern competitors without the burden of legacy approaches.

Databases need to take on this burden. They need to take information regarding various entities, for example a customer, an asset or a patient, and maintain a consistent 360-degree view of the entity. Keeping all the data about an entity in a single denormalized document is a huge step in the right direction. That’s one of the reasons for the ascendancy of document-oriented databases.

Having the right approach matters

A more rigid, document-oriented approach won’t be enough for most organizations today. Given the speed of business, both the user and the underlying database need greater flexibility to react and transact faster than ever across a wide-range of distributed systems. To give the database the ability to relieve more of the burden from people, it should:

- Take a multimodel approach: Your business runs on lots of different types and forms of data, but you shouldn’t be forced to understand and manage one system for each, or to cram one form of data into a container that is best suited for another. Imagine if your only desktop app was a spreadsheet. Sure, you could write a book in rows and columns, or express a friend graph that way, but you wouldn’t want to. Once again, you’re conforming to the technology rather than the other way around.

- Be able to capture meaning, not just data: Semantics is a data model that focuses on relationships between data, which adds contextual meaning around it so it can be better understood, searched, and shared. Semantic technologies overcome limitations by helping companies understand all of their data and unlock insights, using ontologies and rule sets to link together documents, data, and triples, providing deeper insights and opening up opportunities for smarter applications and queries. Semantics operates at every level. It’s about how different data is relate, what concepts the data captures and how that data relates to other data in the world. It is a knowledge graph. It’s ironic that “Relational Databases” actually have no ability to express semantic relationships. This is a major shortcoming.

- Be able to project data into the most convenient form: The document model, with its highly flexible and very rich structure, is a great way to represent data, but sometimes you want other representations. Since before computers ever existed we’ve been making tables of data and adding up numbers in columns. It’s a natural view of data for some operations. Wouldn’t it be nice if you could use documents and semantics to very closely model your real-world business entities and the relationships between them — and at the same time be able to efficiently project that data into tabular or graph form when the problem at hand calls for it? This would allow you to speak in terms of your business entities rather than being forced to speak in terms of the shredded model that relational imposes. At the same time, you can get the benefits of tabular data when you need it.

There are other important aspects of a modern, smarter database. The key to all of them is giving the database more information in terms of policies, intent, and meaning. Armed with these attributes, the database can make smarter choices about how to store data, how to query it, how to protect it, how to best apply cloud resources, and more. Those are all things that make the database work for you, and that’s the way it should be.