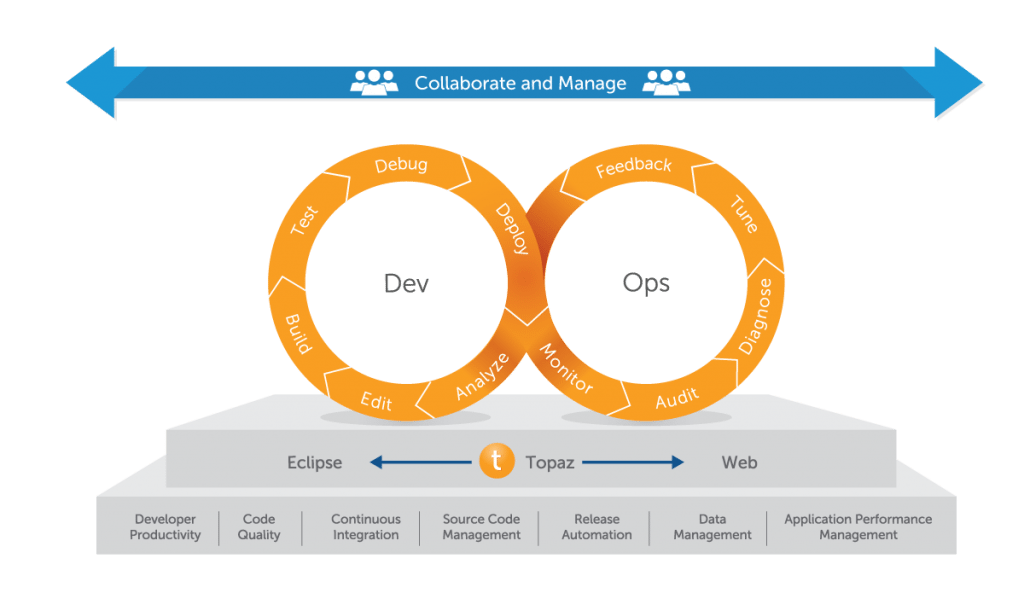

Compuware is extending its mainframe/DevOps initiatives with the availability of new REST APIs for ISPW, its source-code-management and release automation solution that Compuware acquired in January.

The new APIs are architected as REST web services, and with these APIs, Compuware customers can begin to accelerate cross-platform apps, reduce IT costs, and improve the quality of their software. The APIs provide integrations for automating end-to-end build-and-deploy processes across DevOps tool chains. The APIs also allow ISPW users to create, deploy and review the status of code releases from third-party solutions.

Along with the newly available APIs, Compuware announced that it is acquiring Itegration’s Mainframe SCM methodology as a way to speed up and cut down on risk in enterprise customers’ transitions to agile. Since many enterprises cannot achieve agile due to old SCM systems, Compuware wants to reduce the time, effort and risk associated with SCM migrations with this acquisition, according to the company.

The acquisition will enable Compuware customers to migrate their SCM systems from agile-averse products like CA Endevor, CA Panvalet, CA Librarian and Micro Focus/Serena ChangeMan ZMF, as well as internal SCM systems. These systems can be migrated to ISPW, Compuware’s agile and DevOps-enabling cross-platform SCM solution.

New plug-and-play Arduino IoT kit

Arduino wants to simplify the way non-developers build smart devices, which is why it introduced ESLOV, a new plug-and-play IoT invention kit, on Kickstarter.

ESLOV contains intelligent modules that join together to create projects with no hardware or programming knowledge necessary. Developers can use ESLOV to connect modules using cables or mount them on the back of Arduino’s WiFi and motion hub. Then, ESLOV can be plugged into the hub of a PC.

ESLOV’s visual code editor recognizes each module and displays them on a PC screen. The device can be published to the Arduino Cloud so it can interact remotely from anywhere. Also, the ESLOV modules and hub can be programmed with the Arduino Editor.

The hardware and software of this device are open-source, and third-party modules from partners and other certified programs are welcome.

Yahoo open-sources its deep learning model

Yahoo wants to tackle the challenge of identifying what images are not suitable/safe for work (NSFW) with its open-source, general purpose Caffe deep neural network model.

The model takes an image and determines how probable it is that it is NSFW. Developers can use this score to filter images below a threshold, based on a receiver operating characteristic curve for specific use cases, according to a blog post by Yahoo research engineer Jay Mahadeokar and leader of Yahoo’s Vision and Machine Learning group Gerry Pesavento.

With this model, Yahoo can use the Caffe deep learning library and CaffeOnSpark, an open-source framework for distributed learning that brings Caffe deep learning to Hadoop and Spark clusters for training models.

Developers can find the source code for this model on GitHub.

MIT CSAIL team creates new 3D printing method

This week, researchers from MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL) will present a new way of 3D printing soft materials. This way will allow people to build safer robots, and the method can also be used to improve the durability of drones, phones and more.

The “Programmable Viscoelastic Material” technique allows users to program every part of a 3D-printed object to the exact levels of elasticity they want. The new “skins” that the researchers created reduce the amount of energy it transfers to the ground by 250%. These skins could play a role in the lifespan of delivery drones from companies like Amazon or Google.

“That reduction makes all the difference for preventing a rotor from breaking off of a drone or a sensor from cracking when it hits the floor,” says CSAIL director Daniela Rus, who oversaw the project and co-wrote a related paper. “These materials allow us to 3D print robots with viscoelastic properties that can be inputted by the user at print-time as part of the fabrication process.”