Databricks has announced the general availability of Apache Spark 2.0 on its platform, building on what the Databricks community has learned and focusing on three themes: ease of use, more speed, and more intelligence, according to the company.

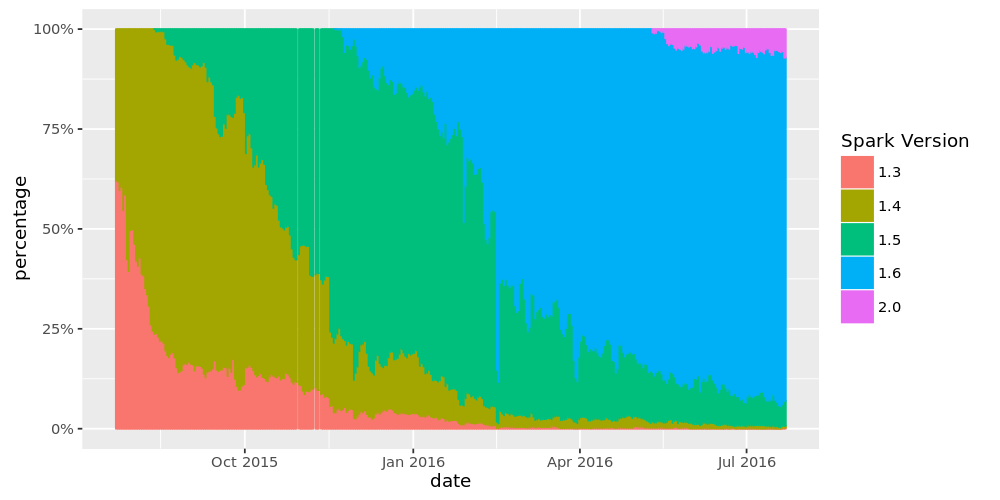

From the preview release of Apache Spark 2.0, Databricks said that 10% of its clusters were already using this release, and customers experimented with the new features and provided Databricks with feedback that was used to create the stable version of Apache Spark 2.0.

There are several new features with Apache Spark 2.0. Spark focuses on two specific areas, standard SQL support and unifying DataFrame/Dataset API, according to the company.

(Related: Reynold Xin goes over what’s in Spark 2.0)

Databricks has streamlined Spark’s APIs, including unifying its DataFrame and Dataset APIs in Java and Scala. The streamlined APIs in this release also includes the DataFrame API, which becomes a type alias for Dataset of Row in Spark 2.0, according to the company. Also, Spark 2.0 has expanded SQL support, with an introduction of a new ANSI SQL parser and subqueries, which are queries that are nested inside of another query.

Making Spark faster was another focus of this release. According to Databricks’ 2015 Spark Survey, 91% of its users said that performance is one of the most important aspects of Apache Spark. This led Databricks to consider how Spark’s physical execution layer was built, and now, Spark 2.0 ships with the second-generation Tungsten engine. This Tungsten engine “builds upon ideas from modern compilers and MPP databases and applies them to Spark workloads,” according to the company.

As a way to get applications to make real-time decisions, Spark 2.0 includes a new API called Structured Streaming, which has three key improvements: integrated APIs with batch jobs, transactional interaction with storage systems, and rich integration with the rest of Spark. Additionally, Spark 2.0 ships with an initial, alpha version of Structured Streaming as an extension to the DataFrame and Dataset APIs.

With these improvements, developers won’t have to manage failures manually or keep their applications in sync with batch jobs. The streaming job will always give the same answer as a batch job on the same data, according to the company. Developers will also be able to build complete applications instead of just streaming pipelines with more integration with the rest of Spark. Databricks expects more integrations with MLlib and other libraries in the near future, according to the company.

Overall, Spark users will be able to see more east of use with this release, as well as an updated Databricks workspace that supports Structured Streaming, according to Databricks. The company said more details on Structured Streaming in Apache Spark 2.0 are coming soon.