Yesterday, Baidu Research’s Silicon Valley AI Lab (SVAIL) released open-source code called Warp-CTC to GitHub. The goal is for this code to be used in the machine learning community.

Warp-CTC is a tool that can plug into existing machine learning frameworks to speed up the development of artificial intelligence, and according to SVAIL, it will speed up development by 400x compared to previous versions.

“The code enables training of neural networks for tasks like speech recognition and OCR,” said Erich Elsen, a research scientist at SVAIL.

(Related: Using deep neural networks as programming assistants)

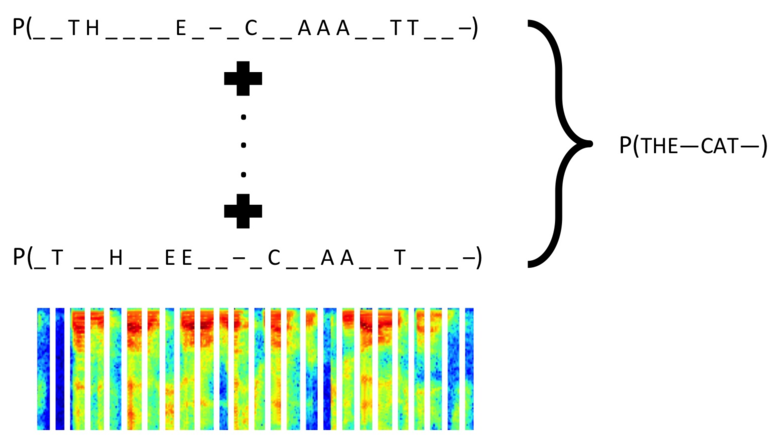

Warp-CTC is an open-source implementation of the CTC algorithm for CPUs and NVIDIA GPUs. CTC is an objective function that can be used while doing supervised training for sequence prediction, without knowing the alignment between the input and output. CTC stands for “Connectionist Temporal Classification,” and it can be used to train end-to-end systems for speech recognition.

SVAIL engineers developed Warp-CTC while they were building their Deep Speech end-to-end recognition system, which would improve the scalability of models trained using CTC.

Developers can use Warp-CTC to tackle problems like speech recognition, according to Elsen, especially if they hadn’t had access to such tools before. He also said that there have been other implementations of the CTC algorithm, which can be used to speed up AI problem-solving.

Warp-CTC can be found on GitHub.