As a way to help machines and robots better understand the objects and environment around them, researchers at MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL) have created an algorithm that can effectively learn how to predict sound.

According to the researchers’ paper, “The algorithm uses a recurrent neural network to predict sound features from videos and then produces a waveform from these features with an example-based synthesis procedure.”

The team simply wanted to show that sounds predicted by their model were realistic enough to fool participants in a “real or fake psychophysical experiment.” While the researchers achieved their goal, there is still some room for improvement so it can help machines or robots understand the world around them.

(Related: AI app helps developers plan out their day)

Abhinav Gupta, an assistant professor of robotics at Carnegie Mellon University who was not involved in the study, said that this research is a step in the right direction to emulating learning the way humans do, which is by integrating sound and sight. He also said that “Current approaches in AI only focus on one of the five sense modalities, with vision researchers using images, speech researchers using audio, and so on.”

How they did it

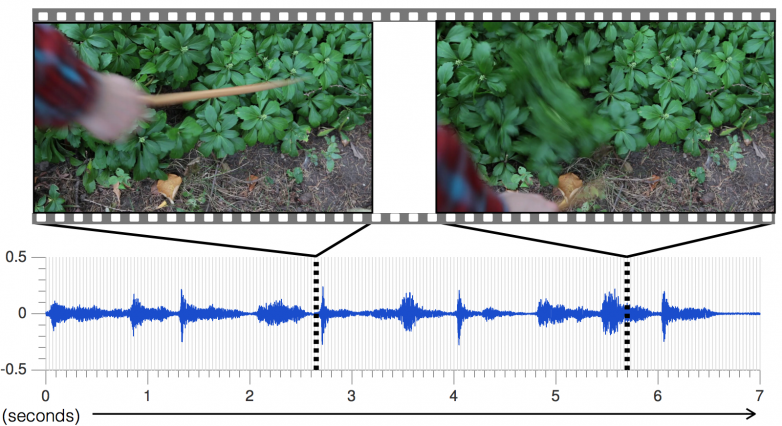

The first step was to collect data. Phillip Isola, one of the co-authors of the paper, said that the team spent about a month or two recording themselves hitting all kinds of objects with a drumstick. The result was about 1,000 videos with 46,000 sounds of the researchers hitting, prodding or scraping an object, he said.

Isola said the next step was to manually label all of the data. An algorithm then learns to map the pixels to the labels that were created for the data. In this case, the labels were raw sounds, and the algorithm maps the pixels to the raw sounds. Once all of the data was collected, he said, training the algorithm took a couple days of processor time.

The techniques of deep learning played a role in this project, because it is a very effective kind of machine learning, freeing the researchers from having to hand-design algorithms, said Isola.

“Instead, we give the computer many examples of the desired behavior we want (producing the correct sounds), and the learning algorithm automatically tries to achieve this behavior,” he said.

There were a few challenges during the initial stages of the project, including figuring out a way to come up with a “compelling way to collect large-scale data about physical interactions,” said Isola.

“We started out wanting to model the physical response of an interaction with the world, [like] what happens when you hit an object? There are so many kinds of physical responses, and most are hard to collect data for.”

The research team began by explicitly measuring the shape deformation of a surface when hit, which required special 3D scanning equipment. They found that it was much easier to capture the same kind of information by measuring the sound itself, and so the only equipment that they needed to use was a microphone.

“This lets us take the project out of the lab and into more natural settings,” said Isola. “Moving to ‘in the wild’ settings is important for computer vision applications that eventually will have to work in normal, everyday settings.”

The team tested how realistic these sounds were by creating an online study where the subjects saw two videos of collisions, one that was the real sound, and the other was the algorithm’s. The study found that people would pick the fake sounds over the real one twice as often as the baseline algorithm.

One thing that happens when a computer vision algorithm is successful is that people can easily forget how far off researchers are from solving the problem with AI systems completely, according to Isola. The team sees a few areas of improvement in this project that they hope to expand on, including modeling physical responses beyond just sound.

The project could go in two possible directions, one that includes the immediate application of adding sounds to silent movies, said Isola. The bigger picture is using this to study AI and how it can learn the world around itself, just by “poking and prodding the environment, and exploring the physical consequences of its behavior,” he said.

Sound prediction is the initial step in the right direction, according to Isola.