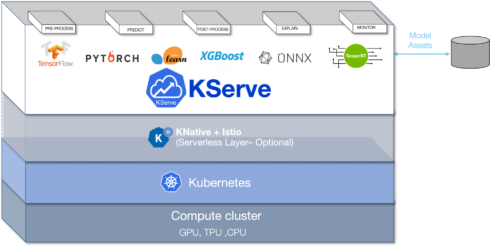

KServe is a tool for serving machine learning models on Kubernetes. It encapsulates the complexity of tasks like autoscaling, networking, health checking, and server configuration. This allows users to provide their machine learning deployments with features like GPU Autoscaling, Scale to Zero, and Canary Rollouts.

Created by IBM and Bloomberg’s Data Science and Compute Infrastructure team, KServe was previously known as KFServing. It was inspired when IBM presented the idea to serve machine learning models in a serverless way using Knative. Together Bloomberg and IBM met at the Kubeflow Contributor Summit 2019, and at the time, Kubeflow didn’t have a model serving component so the companies worked together on a new project to provide a model serving deployment solution.

The new project first debuted at KubeCon + CloudNativeCon North America in 2019. It was moved from the KubeFlow Serving Working Group into an independent organization in order to grow the project and broaden the contributor base. At this point the project became known as KServe.

KServe provides model explainability through integrations with Alibi, AI Explainability 360, and Captum. It also provides monitoring for models in production through integrations with Alibi-detect, AI Fairness 360, and Adversarial Robustness Toolbox (ART).

The project has been adopted by a number of organizations, including Nvidia, Cisco, Zillow, and more.