Feedback is routinely requested and occasionally considered. Using feedback and doing something with it is nowhere near as routine, unfortunately. Perhaps this has been due to a lack of a practical application based on a focused understanding of feedback loops, and how to leverage them. We’ll look at Feedback Loops, the purposeful design of a system or process to effectively gather and enable data-driven decisions; and behavior based on the feedback collected. We’ll also look at some potential issues and explore various countermeasures to address things like delayed feedback, noisy feedback, cascading feedback, and weak feedback. To do this, in this four-part series we’ll follow newly onboarded associate Alice through her experience with this new organization which needs to accelerate organizational value creation and delivery processes.

Alice joined this company recently, getting a nice bump in pay and the promise of working on a cutting-edge digital product. Management recruited her aggressively to address an organization crisis: unacceptable speed of delivery. The challenge was to accelerate delivery from once a month to every two weeks. The engineering team was relatively small (about 50 engineers) scattered across different functional areas.

On day one, Alice learned that the product teams consisted of three cross-functional engineering teams, each with six engineers. She was excited to learn that test engineers and software engineers routinely work together. However, it seemed strange that the organization had separated shared services for data, infrastructure, and user acceptance testing (UAT), even though data and infrastructure were parts of the product. Learning that the current release cycle had “at least” one week for UAT, and the product team reserves some time to bug-fix on the following release cycle based on feedback from UAT was a bit of a surprise and of immediate interest.

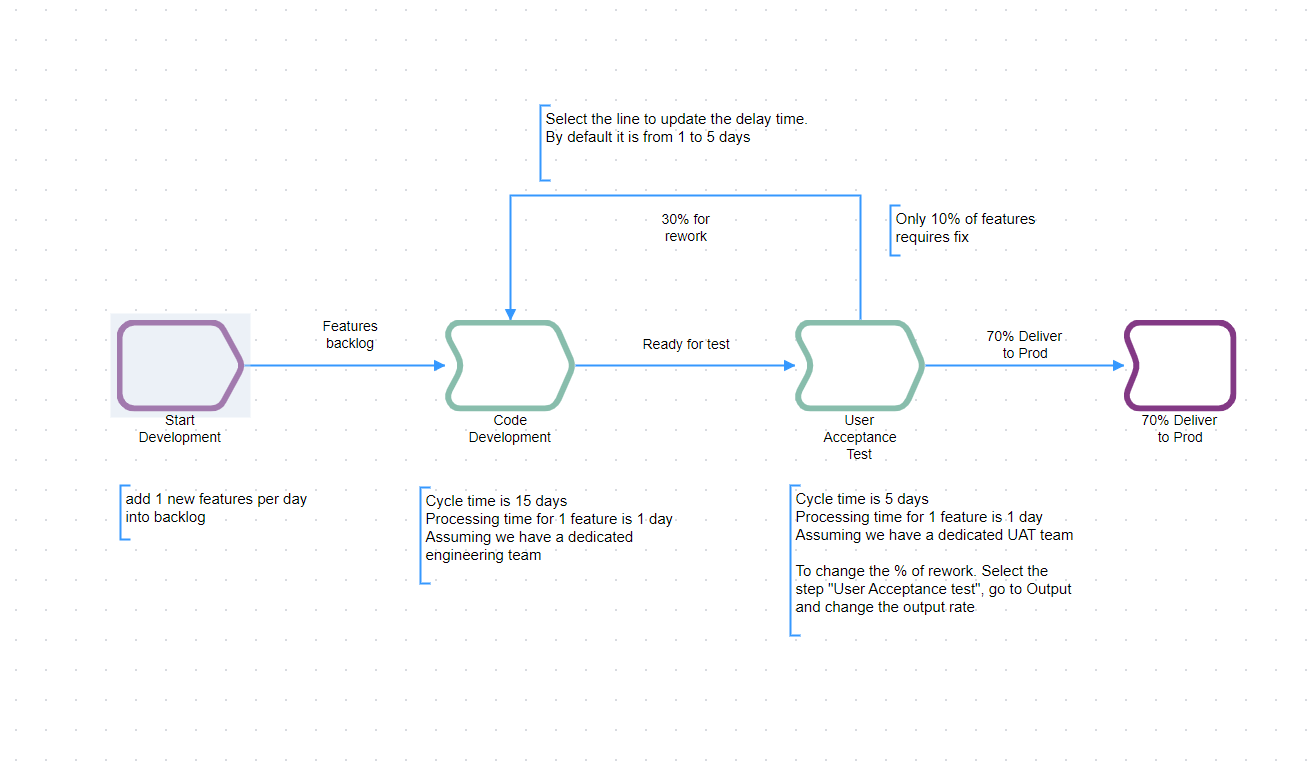

Alice knew that the software development process could be described as a set of feedback loops between code development activities performed by engineers and various feedback activities. These feedback activities verify the quality of implemented features from functional as well as non-functional standpoints.

These activities are well known with multiple approaches, and are generally designed and executed by team engineers: unit testing, code reviews, security testing, and sometimes by specialized engineers, such as for performance testing, security testing, chaos engineering, and the like. These feedback activities are associated with different artifacts or manifestations — code change, a feature branch, a build, an or an integrated solution, as examples.

Feedback activities might (should) affect the whole delivery process for both effectiveness and efficiency.

Figure 1. Simplified software development process with feedback

Delayed Feedback

Delayed feedback has several adverse implications for the speed of capability delivery:

- While waiting for feedback regarding a product’s qualitative attributes, engineers often continue writing code on top of the codebase they assume to be correct; therefore, the more delayed the feedback the greater potential for rework, that is more likely to be impactful.

- Often such rework is not planned; therefore, it will likely delay direct and collateral product delivery plans as well as negatively impact resource utilization.

It was evident to Alice that the UAT team provided feedback to the product team very late, so it could be a great starting point to accelerate delivery by eliminating delay or shortening its release cycle. Alice started her analysis journey by calculating the UAT delayed feedback impact on the delivery.

It is easy to calculate; we just need to know the probability of feedback from a step. To do so we need to know the ratio of all features with defects to all features delivered to the UAT step of the process. It gives her a probability that a feature requires rework after UAT, in this case, it was 30%; therefore percent of complete and accurate features in this case is 70%.

Here is a link to the diagram shown below, created at VSOptima platform to explore “the Alice challenge”. If you like, you can run a simulation and see the implication of the rework ratio and delayed feedback loop to the overall delivery throughput, activity ratio and lead time. What is important is that you can observe that such a feedback loop consumes the capacity of the code development activity and generates flow’s loops.

Next, we can calculate how much the rework costs for the delivery team. There are two components of this cost. The first is direct cost to address an issue. Alice learned that on average one defect costs about one day of work for software engineers and test engineers since they needed to reproduce an issue, determine how to remediate, write and execute tests, merge the fix back into a product, and verify the fix didn’t affect other features.

The second is the cost of product roadmap delay. If we delay the release for one day what would be a revenue loss? Often it is hard to estimate that, and Alice didn’t get any tangible number from the product managers.

But even just the direct cost associated with the feedback fix gave her excellent ammunition to defend her plan to shorten the release cycle. Out of 20 features delivered on average in each release cycle, on average six required rework. Remediation typically takes six days for 12 engineers, which is about 20 percent of the release planned capacity.

We have three teams of six engineers each, a total of 18 engineers.

The release cycle is one month; therefore, 20 working days.

If we multiply 18 engineers by 20 working days we will get the full capacity of 360 engineering working days

Since we have six features for rework, we need six days of 12 engineers to do rework, which is 72 engineering working days

So 72 out of 360 is 20% of engineering working days spent for rework.

Alice set the first goal to accelerate delivery up to 20%. She knew they could do that if she found a way to reduce the time required to produce feedback and made it immediately available for engineers while they were still in the code.

Alice asked the UAT team to specify acceptance scenarios for all features as part of the work definition or story so that a cross-functional feature team can implement automated testing for these scenarios along with their code development. Therefore, a feedback loop can be almost instantaneous. If the acceptance test doesn’t pass, then the engineer can immediately address a defect in a much faster way.

Alice also investigated several other issues known as noisy feedback, cascaded feedback, and weak feedback. We will unfold these terms in the following stories.

In summarizing this story, we would like to emphasize the importance of the frame of a feedback loop when you do optimization of the digital product delivery, and understanding that it is not linear — the longer it takes to get feedback the more difficult it is to address a defect because the code base and complexity grows with time.

To accelerate digital product delivery, leaders should strive to eliminate or mitigate backward cycles generating unplanned work and affecting planned capacity; instead, we should design processes where work is done the first time correctly.