NVIDIA is bringing more power to deep learning with its latest advancements in artificial intelligence and high-performance computing. At this week’s GPU Technology Conference in Silicon Valley, NVIDIA announced its new Volta architecture, the NVIDIA Tesla V100 data center GPU, as well as several other next-generation AI and computing solutions.

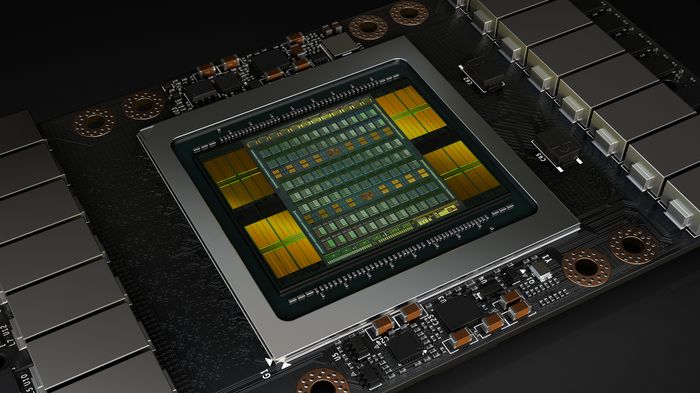

Volta, which is designed to bring great speed and scalability for AI inferencing and training, is an architecture built with 21 billion transistors. It delivers the performance equivalent of 100 CPUs for deep learning. Volta also surpasses NVIDIA’s current GPU architecture, Pascal, according to the company.

“Deep learning, a groundbreaking AI approach that creates computer software that learns, has insatiable demand for processing power. Thousands of NVIDIA engineers spent over three years crafting Volta to help meet this need, enabling the industry to realize AI’s life-changing potential,” said Jensen Huang, founder and chief executive officer of NVIDIA.

NVIDIA’s Tesla V100 data center GPU includes Tensor Cores, which are designed to speed up AI workloads. NVIDIA Tesla V100 is equipped with 640 Tensor Cores, it delivers 120 teraflops of deep learning performance, and it includes CUDA, cuDNN and TensorRT software, which current frameworks and applications can tap into for AI and research, according to NVIDIA.

NVIDIA also announced a new lineup of NVIDIA DGX AI super computers, which use NVIDIA Tesla V100 data center GPUs and are based on new Volta architecture. According to the company, the systems provide the performance of 800 CPUs in a single system.

For developers, NVIDIA announced a cloud-based platform that will give them access to a software suite for harnessing the powers of AI. Using their PC, DGX system, or the cloud, developers will be able to gain access to the latest deep learning frameworks and newest GPU computing resources.