Unsatisfied with the available solutions for connecting the analytics-generating power of their TensorFlow machine learning implementations with the scalable data computation and storage capabilities of their Apache Hadoop clusters, developers at LinkedIn decided that they’d take matters into their own hands with the development of this week’s highlighted project, TonY.

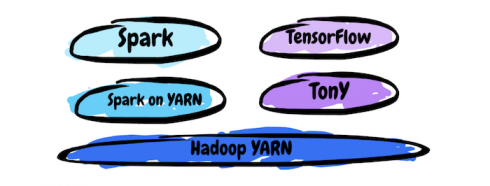

“TonY stands for TensorFlow on Hadoop YARN,” Jonathan Hung, an engineer at LinkedIn and lead on the project, explained. “At LinkedIn we have a few use cases for running TensorFlow, which is a machine learning library built by Google that lets you build complex models and extract some insights from your data. Since LinkedIn has a few hundred petabytes of data that we need to analyze, we need to run this machine learning library across clusters of machines — and we already had Hadoop running on our clusters.”

Hung and fellow engineers Keqiu Hu and Anthony Hsu wrote in the blog post announcing the open-source release of TonY that existing projects failed to meet the team’s needs. TensorFlow on Spark, for instance, lacked GPU scheduling and heterogeneous container scheduling and would have required an additional Spark layer for implementing scheduling changes. TensorFlowOnYARN, on the other hand, was lacking in fault tolerance support and usability and is no longer being maintained, a deal-breaker for Hung and his team.

“One of the philosophical reasons that we decided to build it this way was because we can run TonY directly on top of Hadoop, which means that as the Hadoop ecosystem evolves — and currently there are many developers maintaining Hadoop, so it’s a pretty active community — as well as updates to Tensorflow, which is also developing pretty rapidly — we wanted a layer in-between that we could evolve along with these two layers sitting on top of and under TonY.” Hung said.

The TonY team’s version of this technology features GPU scheduling via Hadoop, fine-grained resource requests, TensorBoard TensorFlow program optimization via code the team contributed to YARN to integrate the utility, and fault tolerance APIs that save checkpoints. There are three primary components of TonY, the team wrote in its blog post: Client, ApplicationMaster, and TaskExecutor. The team breaks the process down like this:

- The user submits TensorFlow model training code, submission arguments, and their Python virtual environment (containing the TensorFlow dependency) to Client.

- Client sets up the ApplicationMaster (AM) and submits it to the YARN cluster.

- AM does resource negotiation with YARN’s Resource Manager based on the user’s resource requirements (number of parameter servers and workers, memory, and GPUs).

- Once AM receives allocations, it spawns TaskExecutors on the allocated nodes.

- TaskExecutors launch the user’s training code and wait for its completion.

- The user’s training code starts and TonY periodically heartbeats between TaskExecutors and AM to check liveness.

The project is available through GitHub, and Hung says his team hopes to keep TonY up-to-date with the “latest and greatest” versions of TensorFlow and Hadoop YARN going forward.