Eventually, there will come a time when a consumer will look back and think of smartphones as a thing of the past, according to Kulveer Taggar, creator of the Android app Agent.

“If you think about vehicles and driving, there was the era of manual transmission, and then we switched to automatic transmission,” he said. “Cars were already very useful, but when they went to automatic they were just so much easier to drive and much less stressful; and so I think that is where we are with smartphones. We are in this sort of manual transmission era.”

Smartphones are very powerful devices, but users still have to wake them up to open up applications, change settings and interact with it depending on what they are doing.

With all the advances being made in technology these days, smartphones are starting to become smarter and more automatic, as Taggar put it. So the next generation of smartphones will take advantage of phones’ GPS sensors, accelerometers, Bluetooth, thermometers, WiFi, gyroscopes, and social data to automatically glean the context of user interactions and take actions based on it, he said.

This is a development that has been researched since the 1990s.

“The early days of context-aware computing was thinking about sensing based on assigning a value to a physical phenomenon, such as a person’s location, the temperature of a location, etc.,” said Gregory Abowd, a computer scientist and one of the first academic researchers to get into the movement.

Today, the core of contextual computing is the idea that a device can collect information from a user’s world, and that information is then used to influence the behavior of a user’s computer program or application, according to Abowd.

Contextual computing is often associated with mobile devices because Abowd said it is a good proxy for people who own and use such devices. “The most important piece of context is location, and location only became relevant when people had devices that moved a lot and that they used while moving,” he said.

Contextual computing today

There are already some examples of contextual computing on the market today. Location services already exist on smartphones. Google’s intelligent personal assistant, Google Now, answers questions, makes recommendations, performs actions, predicts what a user will want, and shows advertisements based on a user’s search habits.

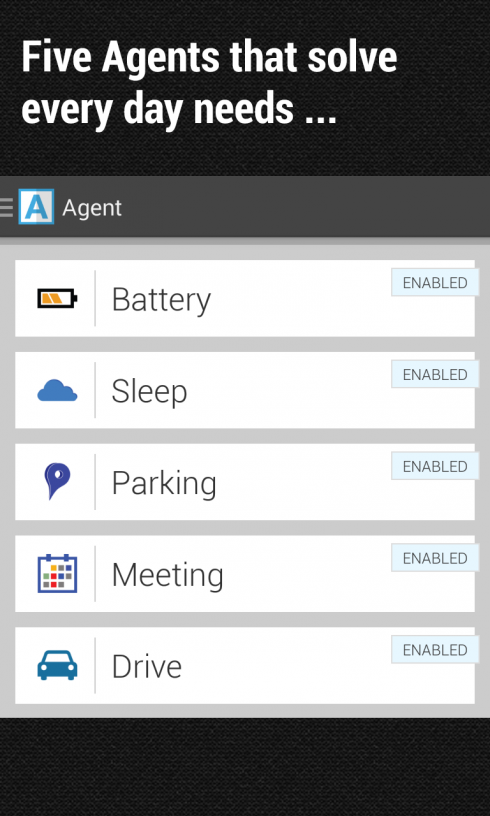

Taggar’s Agent app is also an example of contextual computing. It can detect when a user’s battery is low and start to preserve power, when a user is sleeping and automatically silences his or her phone, when a user is in a meeting, and when a user is driving.

“Our mission is to make people 10% more productive per day and save them some brain cells,” Taggar said.

While Agent already does all these things for users, he said that it is a .01 version of contextual computing. Over time he said smartphones will learn how users live their life and will be able to alert them when it detects someone important nearby, recognize people based on their voices, and know whether a user is at home, at work or in a car.

“At some point we will just assume and demand that phones already know how to do all of this stuff,” Taggar said.

There is a caveat to contextual computing, though. Contextual computing collects data about people and their habits, but that raises the usual questions of who has access to that data and how else that data will be used.

“As a society, we have to keep the dialog open about how we balance the benefits against the risks,” Abowd said. “The challenge is that we don’t always understand those risks up front; they sometimes emerge long after a technological capability is made available.”

In Agent, all the data is saved on the client-side. In other services like Gmail and Google Search, where users are aware that data is used to create targeted ads, it’s useful enough that people are willing to make the trade, according to Taggar.

In order for contextual computing to become standard, Abowd said three things need to happen: Sensors need to be affordable and embedded into devices (or available in the digital world); there needs to be an increased computational ability to transform information into knowledge that can be delivered to computing devices; and there needs to be more evidence of useful apps of the information.

Taggar added that contextual computing must not get things wrong, and it has to be designed in a way that users can understand it.

“If we get something wrong, then it is going to be really, really annoying for users,” he said. “If something is very complicated and surprising, then no one is going to use these apps.”