At its annual developer conference, Apple WWDC, Apple unveiled its new AI platform, Apple Intelligence, which will be integrated across iPhone, iPad, and Mac.

“At Apple, it’s always been our goal to design powerful personal products that enrich people’s lives by enabling them to do the things that matter most as simply and as easily as possible,” said Tim Cook, CEO of Apple, during the livestream. “We’ve been using artificial intelligence and machine learning for years to help us further that goal. Recent developments in generative intelligence and large language models offer powerful capabilities that provide the opportunity to take the experience of using Apple products to new heights.”

According to Craig Federighi, senior vice president of software engineering at Apple, the goal of Apple Intelligence is to combine the power of generative models with personalization based on Apple’s knowledge of a user.

“It draws on your personal context, to give you intelligence that’s most helpful and relevant to you,” he said.

Apple Intelligence offers multimodal generative capabilities, meaning it can generate text and images. For instance, it can use information about your contacts to create personalized images, such as generating an image of your friend in front of a birthday cake to send in a message wishing them a happy birthday.

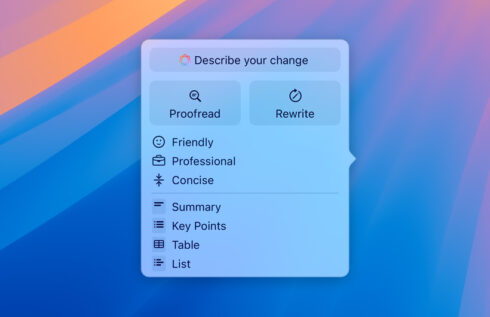

It can also help you improve your writing by either proofreading or rewriting what you have. When rewriting, you have the option to tell it to rewrite it to be more friendly, professional, or concise.

These new generative capabilities are available across Apple apps like Mail, Notes, Safari, Pages, and Keynote. Third-party developers can also build capabilities into their apps by using the App Intents framework.

Beyond its generative capabilities, the platform can also carry out specific tasks for you. Examples Federighi gave of this in action include asking it to “Pull up the files that Joz shared with me last week,” “Show me all the photos of Mom, Olivia, and me,” or “Play the podcast that my wife sent the other day.”

During the event, Federighi kept highlighting that what makes Apple Intelligence so special is the ability to draw on personal context. Another example he gave of this in action is an employee who just got an email that a meeting was rescheduled to later in the afternoon. He wants to know if he can go to this meeting and still make it to his daughter’s play that evening. Apple Intelligence draws on its knowledge of who his daughter is, the play details she sent a few days ago, and predicted traffic between the office and the theater at the time he’d be leaving to provide an answer.

“Understanding this kind of personal context is essential for delivering truly helpful intelligence, but it has to be done right,” he said. “You should not have to hand over all the details of your life to be warehoused and analyzed in someone’s AI cloud.”

According to Federighi, Apple Intelligence features on-device processing, “so that it’s aware of your personal data without collecting your personal data.” He explained that this is possible because of the advanced computing power of its Apple silicon processors (A17 and the M family of chips).

Behind-the-scenes, it creates a semantic index about you on the device, and then consults that semantic index when a query is made.

There are some instances where the on-device processing may not be enough and data needs to be processed on a server. To allow for this without compromising privacy, Apple announced Private Cloud Compute. When a request is made, the device will determine what can be handled on device and what needs to be sent to Private Cloud Compute. According to Apple, the data sent to Private Cloud Compute is not stored or accessible to Apple; it is used only to complete the request.

“This sets a brand-new standard for privacy in AI and unlocks intelligence you can trust,” he said.

Siri is being updated to take advantage of the capabilities of Apple Intelligence. The assistant now has better language understanding, allowing it to still be able to understand you even if you’re not being clear or are stumbling over your words. It will also now maintain conversational context, allowing you to follow up with additional questions or requests about your query. Another update to Siri is the ability to type requests rather than having to say the request out loud.

Siri has also been visually updated; when active, a glowing ring will display around the edge of the screen.

And finally, ChatGPT has been integrated into Siri and Writing Tools. Users can control when ChatGPT is used and will be required to confirm that it’s okay to share information with ChatGPT.

You may also like…