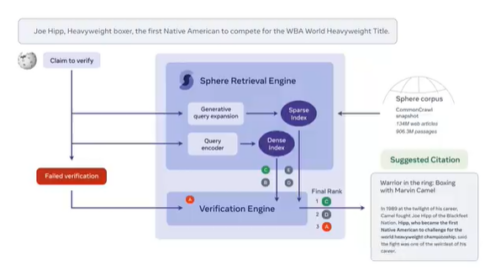

Researchers at Meta AI have come up with a model that can help verify citations on Wikipedia. Wikipedia is often the first stop when someone is looking for information online, but the validity of that information is all dependent on where it is being sourced from.

According to the researchers, volunteers verify the citations, but they can often have trouble keeping up with the 17,000+ articles added to the site every month. There are already automated tools that check for statements that are missing citations entirely, but determining whether a cited source backs up a claim is more complex.

Meta AI’s new model can scan hundreds of thousands of citations at a time and let the volunteer editors know when it comes across a questionable citation. The model can also make suggestions on better sources to replace a bad source with.

The ultimate goal of this new model, according to the researchers, is to build a platform that can help editors of Wikipedia to scale up their ability to fix citations quickly. This will help ensure that Wikipedia pages are as accurate as possible.

“Open source projects like these, which teach algorithms to understand dense material with an ever-higher degree of sophistication, help AI make sense of the real world. While we can’t yet design a computer system that has a human-level comprehension of language, our research creates smarter, more flexible algorithms. This improvement will only become more important as we rely on computers to interpret the surging volume of text citations generated each day,” the researchers wrote in a blog post.

They also noted that this model is the first step towards an editor that could verify documents in real-time by suggesting auto-complete text based on documents found online and providing proofreading. These future models would understand multiple languages and be able to make use of various types of media, such as video, images, and data tables.