The world’s two biggest microprocessor makers are very different companies. While Intel has made a name for itself in the development world by offering cutting-edge compilers and threading tools, AMD has been further removed from the software development world. But with a new family of chips combining central processing units and graphics-processing units set to arrive next year, AMD is now preparing to bring developers into its warm embrace.

Manju Hegde, corporate vice president of AMD’s Fusion Experience Program, has been charged with the task of making AMD relevant to developers. Hegde recently joined AMD from nVidia, and his outsider insight is evident when he speaks about the Fusion project.

At its core, Fusion is about preparing and training developers for the coming integration of AMD’s familiar x86/x64 CPUs and GPUs that came from its acquisition of ATI. What do you call the combo? AMD says it’s an APU: accelerated processing unit.

Hegde said that putting both chips on the same die cuts latency and adds processor power to developers who know how to tap it.

“I think developers are going to be the critical constituency we’ll work with,” said Hedge. “Historically, AMD does not have a reputation of marketing to developers.

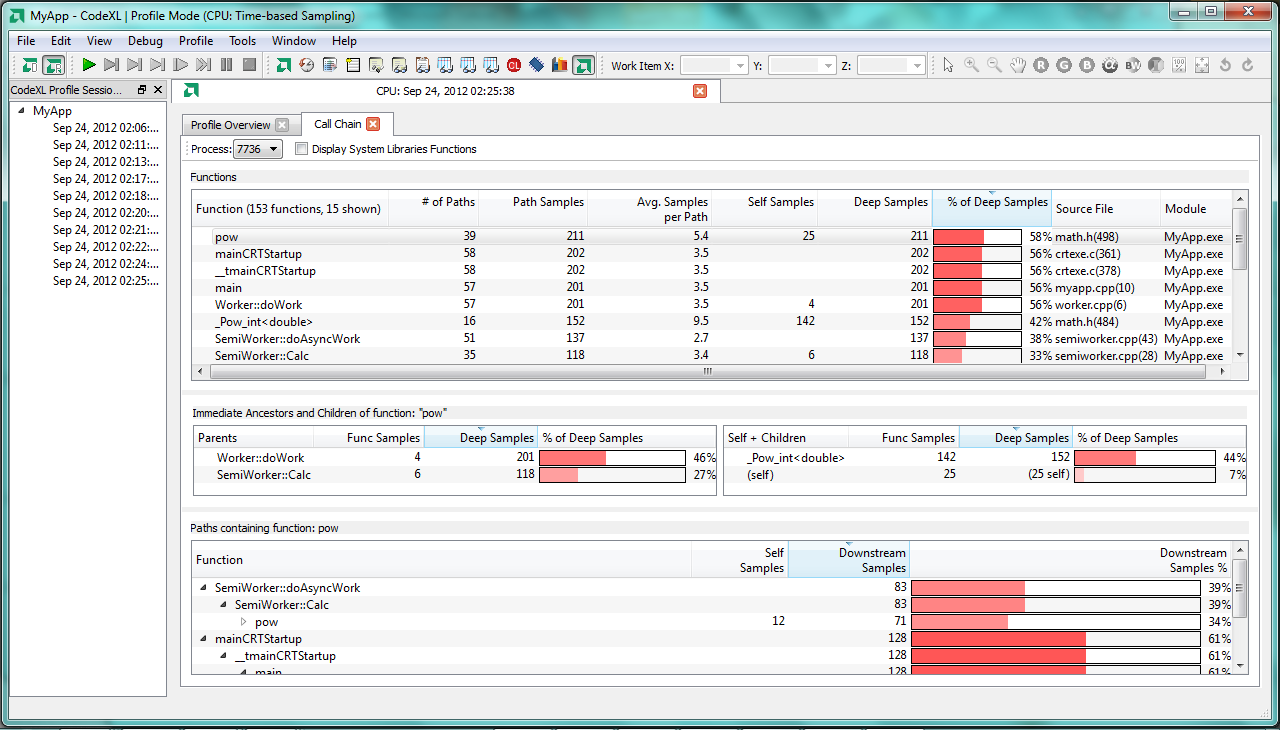

“People are used to the CPU style of programming, where everything is serial. Then you make the transition to parallel, and it’s like learning English, then learning Chinese. It’s very difficult. The challenge in GPU programming is how do you manage communication between two chips? There’s latency involved, there’s balancing involved. But now there’s extra power to use, so what we need to do is compliment that with tools and developer education.”

To teach customers how to use the new APUs, AMD has built an investment fund and plans to offer educational resources and courses at colleges. AMD also released the ATI Stream SDK 2.2, which supports OpenCL.

The Open Computing Language, which makes it relatively easy for developers to write asymmetric parallel applications that run across both CPUs and GPUs, plays a large part in the APU plans at AMD.

By contrast, Hegde said that nVidia’s competing CUDA parallel processing platform is a proprietary one, which requires developers to use nVidia’s own tools in order to run applications computing applications on an nVidia GPU.

Hegde pledged that AMD will focus on open standards and tools, such as OpenCL. “The basic programming model, we haven’t changed,” he said.

“You program it exactly like you would program the GPU and CPU with OpenCL. During the optimization process, you typically do a lot of balancing when you take the kernels and transfer them to the GPU. Now it becomes a lot easier. We are developing extra tools that will show them this is easier.”

AMD is mirroring Intel in another way by planning to release its own optimizing compilers targeting the APUs, though the details on these compilers are not yet finalized. Hegde did say that AMD will use the LLVM open-source compiler project as the foundation of tools that the company uses internally, and he hinted that LLVM might be in the commercially released compilers.

This is a big shift for a company that, historically, hasn’t spoken to developers very often. “AMD is changing substantially,” said Hegde. “We are investing in compilers, tools, and developer education support and training at the university level. We’ve won the tech battle by putting all that functionality on a single chip. Therefore, it’s really important for us that the developer community is aware of this and the possibilities.”