At BZ Media’s Wearables TechCon in March, we saw many interesting devices, technologies and software platforms for quantifying the self, telling time, and doing all manner of otherworldly duties all from the wrist of the user. Then Apple joined the party, announcing its eponymous Watch while attendees at the show gossiped over the future.

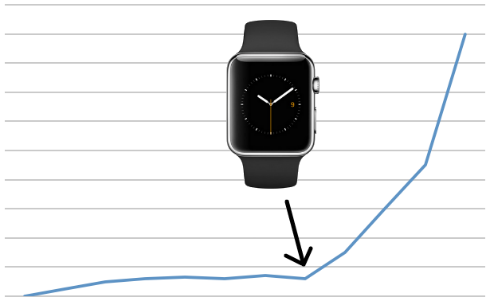

Everyone agreed that the Apple Watch validates the market for wearable devices. One thing they may not have understood, however, was that Apple’s movement into this space began in September 2014, and at that time, the company validated the market in time for Christmas.

As a result, wearables saw a big boost in sales over the holiday season, and many interesting takes on the idea, from Pebble to Martian, mean that the market is already beginning to fill with solutions of all shapes and sizes.

What does this mean for Apple? It can definitely create a market just by offering a new product. But in this case, there is already a lot of competition, and that competition seems to be offering features that are comparable to Apple’s device before it’s even launched.

Instead of differentiating on features, Apple is differentiating on watch bands to the tune of US$10,000. That’s a real shame, because we think Apple could have differentiated in another way: by making the Apple Watch the easiest wearable platform to develop applications on. We know this was a long shot, but wearables development today is not exactly a painless, quick-moving process, and Apple’s resources could have been brought to bear on the developer experience for the betterment of us all.

But instead, we get Xcode and the standard Apple developer treatment: “Here’s how you do it, so do it this one way or you won’t ever get your thing working.” It seems that doesn’t matter so much to Apple, though. It will continue to rely on the popularity of its devices to drive developer adoption, rather than developer adoption (and the applications they create) driving the popularity of its platforms.