It’s been more than 45 years since the premiere of the original “Star Trek” TV series.

It was shot on 35mm film, just like a movie. Every day, right after lunch, the director and the producers would visit a small Desilu theater to look at the show’s “dailies.” They were looking at a pristine print—its first trip through a projector. It would have no dirt, no scratches, no worn sprocket holes to make the film jitter. It was the best possible image and it was stunning.

Those episodes were produced like movies. The editors physically cut the negatives into A- and B-rolls and then printed a master inter-positive. So when it came time to transfer Star Trek to DVD, all the studio needed to do was strike a fresh print of each episode and scan it. The DVD releases were the best standard-definition videos of Star Trek you could ever hope to see on any set.

But high-definition TV has more than four times the video resolution of standard definition, and a much greater dynamic range for color and contrast. The difference can be as startling as your first exposure to IMAX.

Converting the original series episodes to Blu-ray discs meant that the studio had to go back to the original negatives, clean them, strike new prints, scan them in high-definition, and then digitally process the files to remove any dirt, scratches, judder, bounce or artifacts of transfer. Fortunately, the original negatives were in excellent condition, and the Blu-ray set of the 79 episodes of the original Star Trek series is easily the best presentation ever. On a big-screen set, the picture is equal to what we saw in the Desilu projection room, so many decades ago.

So successful was the original “Star Trek” that 20 years later, Paramount Pictures invested in a new series: “Star Trek: The Next Generation.” In those days, there was some awareness that high-definition television was eventually going to replace standard-definition, but aside from a few industry demonstrations of the technology, few producers understood just how profound that transition would be. And at that time, nobody knew what the eventual HDTV standard would require on the production side. The equipment hadn’t been built yet.

So “Star Trek: The Next Generation” was shot on film, just like the original series 20 years earlier. But… it was edited on tape. First, each shot was transferred to the highest-quality master tape, then dissolves, cuts, mattes, and many other effects were put in electronically. The original negatives were never touched. So unlike the prior series, there was never any master negative that could be used for high-definition video transfers.

The situation was ironic. The original series, existing as complete negatives, could be directly scanned in high-definition for Blu-ray distribution. The more efficiently produced “Next Generation” series was stuck in its original standard-definition format.

#!

CBS Home Video—the current owner of the rights to “Star Trek” video distribution—had a difficult choice to make. They could electronically up-rez the master tapes to a kind of pseudo-HDTV, or they could invest in the complete process of making new transfers of the original film elements and recreating the original edits in high-definition. It would be very expensive. To their credit, the wise heads at CBS Home Video realized that up-rezzing the original video would produce a very unsatisfactory result. The video would not be true hi-def, and the fans would feel cheated and unhappy.

Instead, CBS chose to invest US$9 million to recreate the entire seven-year run of “Star Trek: The Next Generation.” Each original film element was meticulously scanned to a high-definition master, then—working from the original records—the shots were digitally edited to match. Where necessary, new effects were mastered. So that planet on the main viewscreen is no longer a blurry green circle, now it’s a recognizable sphere with continents and oceans. To make the Blu-Ray release even more exciting, even more of a must-have set for fans, CBS arranged an extensive set of interviews with as many people connected to the series as possible (including myself).

Now, what does all this have to do with computers and software? Everything.

Last month, while cleaning out old files, I found several 5.25-inch floppy disks and a couple 3-inch disks that were also called floppies, but were stored in hard plastic shells. They look exactly like that icon that represents the Save function. (And why is that? Isn’t there a better way to represent Save now?)

I don’t know if there’s anything important on the 5.25-inch disks. I don’t have a device that can read them. But I did go foraging in the closet to dig out a 13-year old ThinkPad 600x laptop, which has a peripheral for reading 3-inch floppies. There wasn’t anything important on those disks either because I’ve been pretty good about keeping my files current, transferring everything to hard drives or DVDs as the technology advances. But what if I hadn’t had that old machine? And what if those disks contained the only remaining notes for that book I intend to write Real Soon Now?

I also have a lot of obsolete hardware on one of the shelves of the Batcave; in addition to the laptops, there’s a drawer full of peripherals and lots of obsolete storage. I have a handful of Sony memory sticks, everything from 32MB to 4GB. I have two or three compact flash cards of various sizes from 1GB to 8GB. I think there’s a few Iomega Zip disks that got left behind when I sold the Zip drives. There’s a scattering of 2GB thumb drives, and about 10 hard drives of incrementally larger sizes. Half of them are IDE-standard, so they can’t be swapped into a SATA dock. I’m pretty sure I’ve transferred the data off most of those units. But what if I haven’t?

#!

That’s not the only problem. My first word-processing program was WordStar. Later on, I used Sprint from Borland (anybody remember that?). When Windows came along, I switched to Ami Pro, the best of all Windows word-processing programs, until it got turned into Word Pro, sold to Lotus, sold to IBM, and pretty much forgotten. That’s when I gave up and switched to Microsoft Word.

Each one of those word processors had a different file format. Even though I’ve copied all those old files to a 3TB drive, I still don’t have access to them because the formats are effectively dead and not a lot of current software can access legacy formats. Adding to the dilemma, the original software that created those files can’t be run on 64-bit Windows 7.

Fortunately, I’m aware of the problem and I have a workaround: I still have a 32-bit machine running Windows XP, and I can transfer files to it via Dropbox. Or I can move the files on a thumb drive. But you can see where this is headed.

The USB standard lets me plug a lot of different things into a computer, so I should be able to plug in external drives and readers for another few generations. But where once we had only one standard USB connector, now we have multiple connectors: There are micro-connectors, mini-connectors, those square ones for printers, and even a few non-standard connectors for specific devices like the Zune. And USB is now up to 3.0, which means another whole set of connectors will likely climb out of the primordial ooze of the labs and evolve into an even more confusing ecology.

But let’s look to tomorrow. What happens when everything goes WiFi in the next few years and we lose all those cables? What happens when new wireless standards appear? PurpleTooth and 6G and LTX-1138? What happens to the massive body of data users have built up over the years when support for the legacy technology disappears? Like all those Betamax and VHS tapes you have—if you haven’t ripped them to disc yet, all those pictures of Granma and Fluffy and Cousin Ernie might someday be irretrievable.

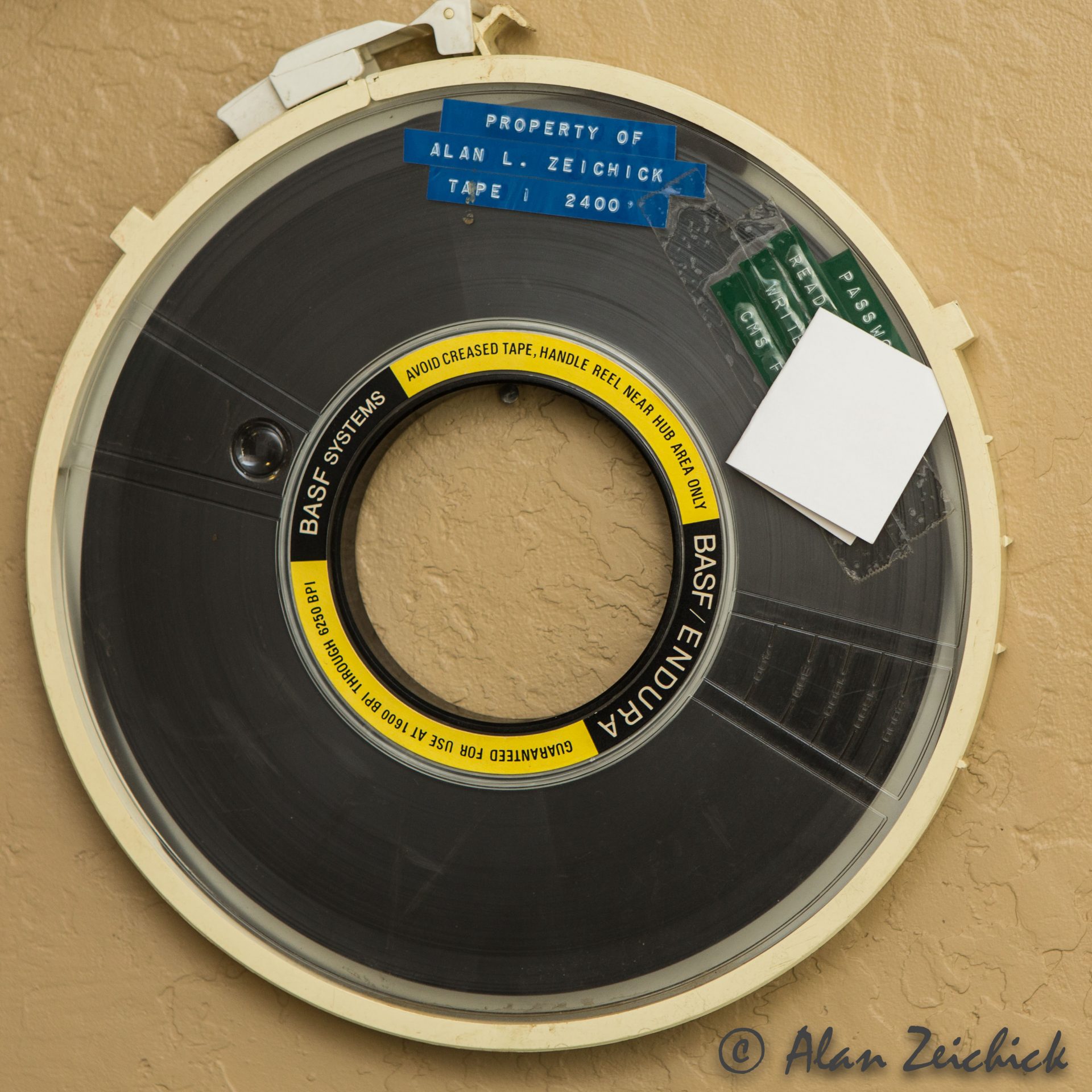

This is already a problem for industrial installations. The U.S. government has gone through several generations of upgrades for data storage, leaving petabytes of valuable information stored on tape reels with no machines capable of reading those spools of Mylar ribbon. NASA has warehouses of irretrievable data from the Gemini and Apollo missions because they no longer have the machines to read the tapes. We are losing access to our own history!

With “Star Trek: The Next Generation,” CBS is lucky: They still have the original film elements and they have the technology to recreate the original episodes. (There’s nothing like the profit motive to encourage the preservation of film and tape.)

But what happens to the rest of our digital history 20 or 30 or 50 years down the line when hardware and software have further evolved—when we reach that moment when file-formats and tech standards have changed so dramatically that we’ll look at a DVD or a flash drive the same way we look at an Edison cylinder, thinking how quaint it is and wondering what’s on it.

Without some serious consideration of how we’re going to access our legacy hardware and abandoned file formats, we may find ourselves cut off from our own past, not just as individuals, but as an entire society.

David Gerrold is the author of over 50 books, several hundred articles and columns, and over a dozen television episodes, including the famous “Star Trek” episode, “The Trouble with Tribbles.” He is also an authority on computer software and programming, and takes a broad view of the evolution of advanced technologies. Readers may remember Gerrold from the Computer Language Magazine forum on CompuServe, where he was a frequent and prolific contributor in the 1990s.