Data-diff is a new open-source project that was released by Datafold earlier this week. It is used for validating data across different databases.

It uses a simple CLI for creating monitoring and alerts, and can be used to bridge column types of different formats.

According to the project’s GitHub page, data-diff is able to verify over 25 million rows of data in under 10 seconds and over 1 billion rows in 5 minutes. It works for tables with billions of rows of data.

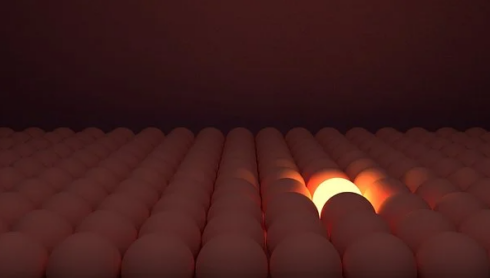

It works by splitting the table into smaller segments and then performing checksums on each segment in both databases. If those checksums aren’t equal, then it will divide the segment into even smaller segments and checksums it until it finds the rows that differ.

Possible use cases highlighted on the project page include verifying data migrations, verifying data pipelines, alerting and maintaining data integrity SLOs, debugging complex data pipelines, and making self-healing replications.

“data-diff fulfills a need that wasn’t previously being met,” said Gleb Mezhanskiy, founder and CEO of Datafold. “Every data-savvy business today replicates data between databases in some way, for example, to integrate all available data in a warehouse or data lake to leverage it for analytics and machine learning. Replicating data at scale is a complex and often error-prone process, and although multiple vendors and open source tools provide replication solutions, there was no tooling to validate the correctness of such replication. As a result, engineering teams resorted to manual one-off checks and tedious investigations of discrepancies, and data consumers couldn’t fully trust the data replicated from other systems.”

Find the project on GitHub here.