Gradually, Microsoft has provided stepping stones to ease the lock-in factor represented by Windows Azure, and SQL Server Denali should be another big one. In fact, one of the marketing promises of SQL Server Denali is “Cloud On Your Terms, ” which I rather like. The SQL Server Developer Tools package, codenamed “Juneau,” is a big part of this promise, and it is also where most of the meat is for developers who are interested in Denali.

Recently Microsoft released the third Community Technology Preview (CTP) for SQL Server Denali. One of the most prominent advantages the company is touting is that it is “Cloud Ready.” Thanks to the new Juneau tools, SQL Server Denali is a must-have if cloud is part of your future plans, as it is the version of SQL Server that allows you to move seamlessly from your on-premise deployments to cloud. There are also a great number of enhancements to the business intelligence side of SQL Server Denali.

In recent years, Microsoft has changed the way that new software is eased into the market. It used to be that a small cadre of inner-circle experts who did not work for Microsoft—but did work closely with the company—would be the only ones to hear about the details of an upcoming release, until the public beta became available. Also in those days, there was usually only one beta that was public and it came very close to the final release. Now there are increasingly public CTPs and betas, so not only is there a lot of information about upcoming products available much earlier, but there are also a lot that changes on the road toward final release.

This third CTP is supposed to be feature-complete, based on all the announcements and hints of what features would make up Denali. If you have time to set up a virtual environment to play with the CTP, it is not a bad idea since there is a lot to see. The reason I say virtual environment is that it is typically not a good idea to run beta or CTP versions of software on any system you are not comfortable with rebuilding soon.

The SQL Server Denali CTPs are no different in this regard because their uninstallers thus far do not do such a good job of clearing things off the system, and often these remnants will block future installs and can be a nightmare to work out. This is just one of the rules you learn when playing with early bits of anything. That being said, it is always better to recon major releases ahead of your first big production installation, and virtualization is your friend.

Now that so many versions have seen the light of day, we are all wondering when the final release of SQL Server Denali will be and what its final name will be. (I expect that if we get a final release before the end of the year, it will be called SQL Server 2011, and of course a release after the first of the year will make it SQL Server 2012.) The answer should come—officially or not—by the end of this month’s Microsoft BUILD 2011 conference in Anaheim.

#!

What fixes have we seen so far?

Many technologies have worked to close the gaps that cause pain for developers, and speaking as a developer myself, I can say this is appreciated. For example, the introduction of Web Services in the first .NET Framework helped make integration between applications so much easier. Now one of the biggest pain points of development is the isolation, or perceived isolation, between the developer and the back-end data. Without that data and the ability to manipulate it, most projects are a lost cause.

The Entity Framework, which I covered last month, tries to smooth over this pain point, and to some extent so do the SQL Server Developer Tools (they even work together some as you will see below). The developer tool kit does this by bringing database tasks formerly only available in SQL Server Management Studio into Visual Studio. While this may very well drive the database administrators to distraction, it is a huge improvement for those of us that spend a lot of time in both SQL Server Management Studio and Visual Studio.

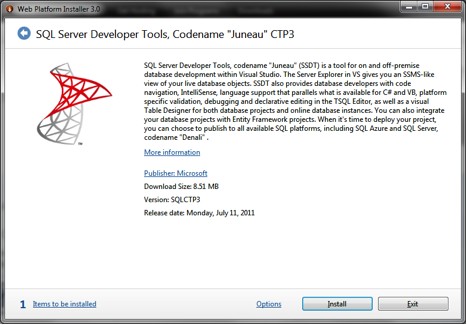

The SQL Server Developer Tools package is available via the Microsoft Web Platform Installer, and it has a bunch of dependencies, including the .NET Framework 4.0 and Visual Studio 2010 SP1. If you kick off the Web Platform Installer and double-click on the SQL Server Developer Tools, you will see the description as shown below. I found this to be a very good description of what Juneau has to offer. The key line is “The Server Explorer in VS gives you an SSMS-like view of your live database objects.” Imaging taking all the best aspects of Visual Studio 2010 and SQL Server Management Studio and putting them into one developer-friendly package.

In this case that means augmenting Visual Studio via Server Explorer. For example, we get multiple-screen support, allowing you to see so much more than in the past and organize tasks that without it tend to be much harder. This was huge when Visual Studio 2010 brought us this ability, but now SQL Server enables it in both the SQL Server Developer Tools and the new SQL Server Management Studio. Other really overdue amenities that C# and Visual Basic.NET developers take for granted are IntelliSense support, code snippets, zoom in the query editor window, debugging, and integration with Entity Framework.

All of this rapid progress is in large part due to the fact that SQL Server Management Studio is built upon the Visual Studio Isolated Shell, and I assume the Juneau tools are as well. This is another clear indication that a major unstated goal of SQL Server Denali is to close down the pain points for developers when working with SQL Server. A possible unintended consequence is that you can now use the built-in extensibility to create add-ins or plug-ins for SQL Server Management Studio.

The official word from Microsoft, however is that you should not. Installations with extensions present are specifically not supported. I have not seen any indication that this will change in future versions, though I do hope that pressure from the community and realization of what mind-blowing extensions they could make would possibly cause Microsoft to reverse that position eventually.

A new capability with big potential for increasing developer productivity is Project-Oriented Offline Database Development. To understand where this will be useful, let us assume that we have just deployed a first version of a production system with a SQL Server back end hosted on premises and developed in Visual Studio. The next phase of the project is ambitious (they always seem to be, don’t they?) and will require reorganizations of existing data structures, additional data structures, and many other potential changes to the architecture that pose challenges, including a desire to transition to the cloud.

With Project-Oriented Offline Database Development, a development database can be copied from the production database, and the developers can then create an offline database project against which they can make the changes. Thanks to the fact that Juneau delivers much of the SQL Server Management Studio’s capabilities in the Server Explorer of Visual Studio 2010, and the support for SQL CLR has been evolving steadily over several versions of SQL Server, the developers will likely be able to work much more independently of the DBAs, assuming that is allowed by corporate policy.

Model-based development

This brings together Transact-SQL (T-SQL), SQL CLR, and your C# or Visual Basic.NET work into one tool, namely Visual Studio. This is supported by what Leonard Lobel referred to in his blog as a “Model-Based Development.” He is one of the people who have been digging most deeply into the changes Juneau will wreak on programming SQL Server primarily because he is preparing to update his book, “Programming Microsoft SQL Server 2012.”

I talked to him about his experience with Juneau, and as one might expect, we agreed on the things we had both delved into for our various purposes. According to Lobel, the model-based approach is a key concept in SQL Server Developer Tools as it provides for “an in-memory representation of what a database looks like, and all the SSDT tools (designers, validations, IntelliSense, schema compare, etc.) operate on that model.”

Once the system in our hypothetical example has finished with the database project, it can be tested, and then once all issues are resolved, someone with the proper permissions and access can publish it to the production environment. The finished database does not even have to be published to an on-premise server.

This is where flexibility comes in, because if the new version needs the scale provided by Windows Azure and SQL Azure, it can be deployed there. This serves Microsoft’s needs well by breaking down the barriers to getting projects that are currently hosted by organizations on its own servers onto Azure with the minimum of churn.

If I recall correctly, this is how it felt when websites first started being hosted at hosting providers. The tools of the second generation allowed publishing to wherever you wanted, and it did not feel as drastic a step as it had just a few short months before.

Another project type Juneau offers—Database Project—opens up interesting possibilities for developers. This means that all sorts of operations can be carried out against the database using fairly straightforward text analysis instead of complicated SQL Server integration (which is likely not supported as discussed below). Snapshots of the Database Project exemplify the advantage of this approach. Taking a snapshot of the database allows you to implement versioning at the database level. The Database Project type is a good place to leverage the visual table designer provided by Juneau.

One tool in particular I expect to use often is Schema Compare, which, as the name implies, allows you to see what has changed since the schema has been imported into your project. Once the differences are found, SQL Server Data Tools can generate scripts that will not only put the target database into sync, schema-wise, with the project database, but more importantly it will advise on the potential for data loss when these changes are made.

In my consulting and software work, I often work with large SQL Server databases that are on premise at the customer site. I envision using Schema Compare to validate that a client has not removed a key index, resulting in a significant performance hit. In fact this happens more often than you might think, as novice DBAs sometimes drop things in the name of maintenance and then neglect to put them back.

SQL Server Denali, and more specifically Juneau, answer the lingering question regarding the Database Edition of Visual Studio. Though Visual Studio 2010 Team Suite Edition does include the Database Professional role along with all the others, there has not been much word on where it would go in the future. Widely referred to as Data Dude (a name I hate to this day), Visual Studio Database Professional Edition was too focused on database developers rather than on developers who use databases. The first group is very small in comparison to that second one. So it is no surprise that Visual Studio Database Pro projects will open up in Juneau. I think it is safe to say that its functionality has found a home where it will be useful.

#!

Denali’s data quality

A new feature included in SQL Server Denali that will likely escape the attention of many developers is the new Data Quality Services. In the summer of 2008, Microsoft bought the Israeli company Zoomix, which specialized in data management. That product has since been rolled into the Data Quality Services, which allow you to clean and improve the quality of data in very useful ways.

The process of getting it going is a bit less obvious than the other features and components discussed elsewhere in this article. For starters, you have to pick Data Quality Services during the installation process, but then to actually get it working in CTP3, you have to run the DQSInstaller.exe, and then you can use the Data Quality Client. The client then will let you create knowledge bases about your target data.

For example, if you have client registration data collected from a marketing effort, you will want to clear out duplicates and get rid of the bogus data. We have all filled out a form before with an address of 123 Main Street in Anytown, USA, but some people are more inventive and these are the records we need to clear out. Sometimes real money is on the line for this kind of mission, and while it is not something I would describe as a developer task, it will often fall on their shoulders.

Once the knowledge bases are established, a new Data Quality Project is created and it will use a knowledge base. The start button kicks off the cleaning process, and the results allow you to approve or reject the records that need attention, along with a confidence appraisal for the suggestion. If you make progress, you can then export the data back out to a new table, and repeat as needed. How you approach this, as well as the size and quality of the data set, will dictate how long and bothersome this process becomes.

The devil is in the details, and the details are in the order of operations. If you start with items that are likely to eliminate the most records, then even if you have limited time to perform runs against the data, you will still end up with the best result. Personally, I expect to be using Data Quality Services on a regular basis.

In previous versions of SQL Server, Microsoft included Business Intelligence Developer Studio as a development platform for, but it was also used for Integration Services projects and developing SQL Server Reporting Services reports.

Juneau is the replacement for this odd-duck development tool. This means that all the various development tools for SQL Server that have sprung up over the years are finally being unified in a way that is not only developer-friendly, but is also developer-empowering.

Business intelligence is getting a lot of attention lately as it is an area where there are still lots of opportunities for innovation, and Microsoft has made many strategic acquisitions in this space over the last few years. Among the innovations in SQL Server Denali that will impact anyone developing with SQL Server Analysis Services are Columnstore Indexes and the rest of the Apollo columnar data storage technology.

The Columnstore index, in combination with a vector-based query execution capability, promises to make some of the largest workloads run faster by one or more orders of magnitude. The trick here is that unlike normal SQL data storage, where everything is stored in row order, the Columnstore index stores a column’s data, which means the data pages holding the Columnstore are packed. This means that when traversing a Columnstore, the system can find things much faster because all the data read is relevant.

This makes it a vast improvement over row-based storage. A Columnstore on a table effectively makes it read only, as inserts, updates and deletes are not allowed, which is why it is probably just right for data warehousing but not so much for relational applications. The underlying technology that Microsoft is using for Apollo is the same as what is used in PowerPivot, and that has been splashing around all the demos at the major conferences.

What are the downsides?

A late-model CTP like this one is bound to have a few warts, but surprisingly they are hard to find, and mentioning them really feels like being a mite picky, especially since they very well might disappear by the time the full SQL Server releases.

But one aspect that seems to still be a bit rough is the process of adding a foreign key through Juneau. Some other items for Juneau include reference data not currently able to be saved with the data model; database diagrams are not being supported; and no Query Designer is available as of CTP3. These features can be put in the “nice to have” column, and there is an assumption that these are some things that could come in a point release, though I have not heard anything to that effect, and neither has Lobel.

Finally, I am not sure if this is really something that will—or should—be redressed down the line, but Columnstores have limits on the data types allowed, and on first glance they seem a bit random rather than based on some hard limit that should exist on this kind of technology.

Overall, I am looking forward to SQL Server Denali. Many of the things that steal my time while developing are addressed with this release, and other things, like Quality Data Services, are just a bonus from my perspective.

Microsoft is doing a good job of smoothing over the pain points for developers in this coming release of SQL Server. It is also helping itself mightily as these same pain points are also obstacles to Windows Azure adoption. Because of this, there is much more in this coming version of SQL Server than there has been in the last couple of versions specifically for developers.

My advice is to sit up and take notice now so you are not forced to play catch-up after release, whenever that turns out to be. As things progress toward the release of SQL Server Denali and SQL Server Developer Tools, Lobel’s blog is a good place to keep track of developments.