In a a Tricentis-commissioned report from Forrester released in July, “The Definitive Software Quality Metrics For Agile+DevOps,” surveyors found that it’s a common trait of companies that have seen the most success from Agile and DevOps adoption that they have made another operational transition. These companies have moved on from considering “counting” metrics — for instance, whether you’ve run tests an adequate number of times — as key indicators of success, to “contextual” metrics — whether the software meets all of the requirements of the user experience, and that these are better indicators of success.

This conclusion is based on the report’s findings that of the 603 enterprise Agile and DevOps specialists surveyed, those referred to as “DevOps leaders” are significantly better at measuring success and are much further along in their pursuit of end-to-end automation, a key ingredient in meeting the rapid development and delivery expected of modern software cycles.

It seems that somewhere along the way, there is a bottleneck holding some businesses back while the DevOps leaders speed ahead; fifty-nine percent of respondents placed the blame squarely on manual testing.

This focus on automated end-to-end functional testing is among four other best-practices that the report’s findings say are almost universally employed by DevOps leaders. They are:

- Properly allocated testing budgets and a focus on upgrading testing skills

- The implementation of continuous testing “to meet the demands of release frequency and support continuous delivery”

- The inclusion of testers as part of integrated delivery teams

- A shift left to implement testing earlier in development

But businesses shouldn’t be quick to rush the gates on implementing test automation across the board — there are some risks involved.

“Automating the software development life cycle is an imperative for accelerating the speed and frequency of releases,” Forrester wrote in its report. “However, without an accurate way to measure and track quality throughout the software development life cycle, automating the delivery pipeline could increase the risk of delivering more defects into production. And if organizations cannot accurately measure business risk, then automating development and testing practices can become a huge danger to the business.”

All businesses, even DevOps leaders, should pay heed. According to Forrester, 80 percent of respondents believe that they “always or often deliver customer-facing products that are within acceptable business risk.” This despite that only 29 percent of DevOps “laggards” think that delivering software within acceptable business risk is “very important,” and only 50 percent of leaders think so. Forrester speculates that this finding must be a mass overestimation.

“Given that most firms, even the ones following continuous testing best practices, admit that their software testing processes have risk gaps and do not always give accurate measures of business risk, it stands to reason that the 80% who say they always or often deliver within acceptable risk may be overestimating their capabilities,” Forrester wrote in the report.

Diego Lo Giudice, vice president and principal analyst at Forrester and the lead on the report, found this disparity unusual.

“I think it’s more intentional than truth — they get it that business risk is of paramount importance and have the illusion of addressing it,” Lo Giudice said. “It’s a misinterpretation of what real business risk is perhaps? I think it’s hard to keep testing and quality focus on business risk.”

Wayne Ariola, chief marketing officer at Tricentis, was a little more sure of where this disconnect lay.

“This is your classic geek gap,” Ariola said. “Business leaders assume that the definition of risk is aligned to a business-oriented definition of risk, but the technical team has it aligned to a very different definition of risk. This mismatch is your primary cause of overconfidence. For example, assume a tester saw that 100 percent of the test suite ran with an 80 percent pass rate. This gives you no indication of the risk associated with the release. The 20 percent that failed could be an absolutely critical functionality, like security authentication, or it could be trivial, like a UI customization option that’s rarely used.”

Avoiding this pitfall comes with the preparedness provided by knowing which metrics to watch to know whether a process is ready for automation. But there is yet another gap that sees less developed DevOps and Agile initiatives tracking metrics that used to mean a lot, but have very little value once a digital transformation is fully underway.

“‘Counting’ metrics lost their value because the question changed for DevOps,” Ariola said. “It was no longer about how much testing you completed in the allotted time. The focus shifted to whether you could release and what business risks were associated with the release. If you can answer this question with five tests, that’s actually better than answering it with 5,000 tests that aren’t closely aligned with business risk. Count doesn’t matter — what’s important is — one, the ability to assess risk and two, the ability to make actionable decisions based on test results.”

Forrester’s Lo Giudice also elaborated on what “outdated” metrics were and where focus should be shifted.

“Measuring productivity is one example,” Lo Giudice said. “Teams focus on the amount of output, or read code, not necessarily valuable outcomes for the business or the right code. Agile focuses on both building the right things and [evaluating] things right, so value metrics become more important than productivity of teams, and so related productivity metrics lose relevance. Quality, however, remains always of paramount importance. “

Quality can severely slip if proper metrics aren’t tracked and businesses continue operating on old metrics while trying to implement modern automated testing initiatives. Businesses need to be aware of those risks, Tricentis’ Ariola explained.

“We’ve seen many organizations that are severely over-testing,” Ariola said. “They’re running massive test suites against minor changes—self-inflicting delays for no good reason. To start, organizations must take a step back and really assess the risks associated with each component of their application set. Once risk is better understood, you can identify practices that will mitigate those risks much earlier in the process. This is imperative for speed and accuracy.”

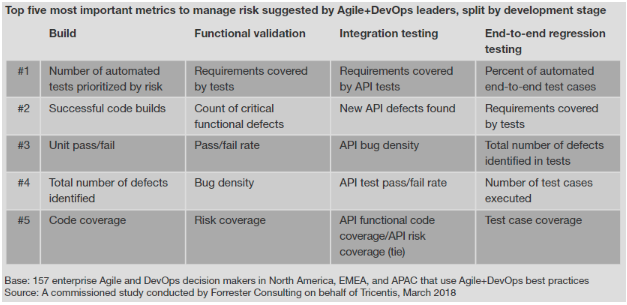

In its report, Forrester lists which metrics and factors are rated the highest in importance by the successful DevOps leaders. The figure below, taken from the report, breaks down the stages of development and shows which factors these leaders considered a priority during each stage.

Productivity, while still an obvious goal of a successful business, is not precisely where Agile and DevOps specialists should be putting their thoughts. “Once risk is better understood, you can identity practices that will mitigate those risks much earlier in the process. This is imperative for speed and accuracy,” Ariola said. Productivity will then follow.

“In the past, when software testing was a timeboxed activity at the end of the cycle, we focused on answering the question, ‘Are we done testing?’ Ariola said. “When this was the primary question, counting metrics associated with the number of tests run, incomplete tests, passed tests, failed tests, etc. drove the process and influenced the release decision. As you can imagine, these metrics are highly ineffective in understanding the actual quality of a release. In today’s world, we have to ask a different question: ‘Does the release have an acceptable level of risk?’”