Google’s annual developer conference, Google I/O’18, kicked off today with a focus on bringing AI-powered features across the Google platform. The company also announced the beta release of Android P as well as a cross-platform collection of APIs for deploying AI-based featured with developers’ apps.

“We’re at an important inflection point in computing, and it’s exciting to be driving technology forward. It’s clear that technology can be a positive force and improve the quality of life for billions of people around the world. But it’s equally clear that we can’t just be wide-eyed about what we create,” Sundar Pichai, CEO of Google, wrote in a post.

Google announced plans to make Google Assistant more visually and naturally helpful. With progress in language understanding, Google Assistant will soon be able to provide a natural back-and-forth conversation, eliminating the need to repeat “Hey Google” with follow-up requests. In addition, Assistant is getting new experiences for Smart Displays and coming to Google Maps for navigation. “Someday soon, your Google Assistant might be able to help with tasks that still require a phone call, like booking a haircut or verifying a store’s holiday hour,” Pichai wrote.

Google Maps was updated with new AI capabilties that enable the app to tell users if the business they are looking for is open, how busy it is, and how bad is parking. “Advances in computing are helping us solve complex problems and deliver valuable time back to our users—which has been a big goal of ours from the beginning,” Pichai wrote.

Android P was revealed with new AI features designed to make it smarter and easier to use. The upcoming operating system saw a slew of new features designed to drastically improve battery life, bring snappier system navigation, and more useful Do Not Disturb and brightness management designed to improve users’ “digital well-being.”

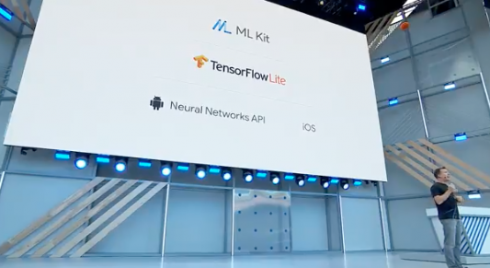

To help make the entire device experience smarter beyond just the operating system, the company announced a new set of cross-platform APIs for developers. ML Kit provides on-device APIs for text recognition, face detection, image labeling, barcode scanning, landmark detection and smart reply as well as access to Google’s cloud-based machine learning technology.

“Architecturally, you can think of ML Kit as providing ready-to-use models, built on TensorFlow Lite and optimized for mobile,” said keynote speaker Dave Burke, Android’s VP of engineering.

Also coming with Android P are “App Actions” and “Slices,” which can streamline users’ experiences by providing predictive shortcuts or app UI elements to actions they’re likely to be attempting.

“To support App Actions, developers just need to add an actions.xml file to their app and then actions surface not just in the launcher, but in smart text selection, Play Store, Google Search and the Assistant,” Burke said.

To illustrate App Actions, Burke used the example of buying movie tickets. By simply beginning to type the name of a film into Google Search, Android P displays a button that will take the user to the ticket purchasing tab of the Fandango app.

Slices are a bit more complex.

“Actions are a simple, but powerful idea for providing deep links into an app given your context. Even more powerful is bringing part of the app UI to the user right there and then,” Burke explained. “We call this feature ‘Slices.’ Slices are a new API for developers to define interactive snippets of their app UI that can be surfaced in different places in the OS. In Android P, we’re laying the groundwork by showing Slices first in Search.”

Burke illustrated with the example of typing “Lyft” into Google Search, where a Slice displayed the option to instantly request a ride home from the ride-sharing service using its app’s own UI, pared down to a Slice.

“With Android P, we’ve put a personal emphasis on simplicity by addressing many pain points where we thought, and you told us, the experience was more complicated than it ought to be,” Burke said. “And you’ll find these improvements on any device that adopts Google’s version of the Android UI, such as Google Pixel and Android One devices.”

Other announcements included a new Google Photos partner program for developers and the availability of Google Lens directly in the camera app on supported devices.