NVIDIA’s new open-source toolkit enables developers to add topical, safety, and security features to AI chatbots and other generative AI applications built with large language models.

The software includes all the code, examples, and documentation businesses need to add safety to AI apps that generate text. NVIDIA said it’s releasing the project since many industries are adopting large language models (LLMs), the powerful engines behind these AI apps.

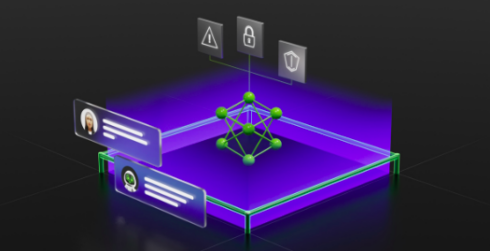

Users can set up three kinds of boundaries with NeMo Guardrails: topical, safety, and security.

With topical guardrails, apps can be prevented from going into unwanted areas by implementing topical guardrails. An instance of this is preventing customer service assistants from responding to inquiries regarding the weather.

Safety guardrails ensure apps respond with accurate, appropriate information. They can filter out unwanted language and enforce that references are made only to credible sources.

Security guardrails restrict apps to make connections only to external third-party applications known to be safe.

The tool is compatible with the tools that enterprise app developers commonly use. For example, it is capable of functioning on LangChain, an open-source toolkit that developers are readily embracing to incorporate third-party applications with LLMs. Furthermore, NeMo Guardrails is designed to be versatile enough to function with a wide range of LLM-enabled applications, including Zapier.

The project is being incorporated into the NVIDIA NeMo framework that already has open-source code on GitHub.