In recent years, the ubiquity and high speed of our current internet connections have led to important breakthroughs in the software industry. A relevant example would be the DevOps movement, which blurred the lines between developers and operations.

A similar phenomenon is happening right now with the quality assurance field. Words and phrases like “testing,” “quality,” “validation,” and many more, have become loaded terms. They might mean wildly different things to different people. The consequence of such a scenario is that it becomes harder for people to agree on pretty much anything concerning QA. What purpose does it serve? Where does it fit in the software development process? Who should be responsible for it?

RELATED CONTENT: 5 things product managers should know about QA

This article will primarily argue two points. The first one is what you’ve just seen, meaning, the software industry lacks consensus on QA and who owns it. The second point is somewhat more controversial. I’ll argue that this lack of consensus isn’t something you should worry about. On the contrary: it’s a good sign. Keep reading to learn more.

Waterfall’s downfall and the rise of DevOps

The software industry has changed dramatically in the last couple of decades. About 20 years ago, the software industry was dominated by the waterfall methodology. As you’re probably aware, this methodology consists of a linear approach to project management. Before the project starts, professionals from the development company gather stakeholder requirements. These requirements result in a project plan. Such a plan would usually describe in great detail each phase of the project, including also the people, team or department who owned it.

Since the late 90s/early 2000s, we’ve experienced some important technological breakthroughs, e.g. ubiquitous broadband internet connections, virtualizations, cloud computing, and a general increase in the available computational power. These developments have changed—and are still changing—the software development landscape. These transformations were and still are complex and with far-reaching consequences. But if we had to resume them with a single sentence it would be “shorter feedback cycles.”

The increase in computational power, along with other improvements, caused the reduction of compiling times. They dropped to minutes and finally dropped to seconds. Smart people soon figured out that software organizations should use this newly-gained speed to their advantage. Developers on a team should integrate their work into the mainline daily and the application should be built as often as possible, avoided the dreaded integration problems that used to be common at the time. This became known as continuous integration.

Another crucial technical development has to do with the internet. Yes, the internet has existed in one form or another since the 60s, but it was only in the 90s that it reached massive adoption, mainly thanks to the invention of the World Wide Web and its release to commercial use in 1995. The availability of high-speed internet connections, along with other technological advancements, such as the improvement of virtualization, finally gave birth to the last piece of the puzzle: the cloud. The advent of powerful, cheap and ubiquitous cloud computing was the missing link between developers and operations.

QA: The current state of affairs

Up to this point, this post has been no more than a history lesson. All we did was a brief overview of the software development practices of the past and how technological advancements have transformed them into the version we have today. We’ve seen how landscape changes have caused traditional roles in software development to evolve. The barriers between certain roles became thinner. This is true for DevOps, but also for QA.

We’re now going to argue the main thesis of this post, namely that IT leadership doesn’t seem to agree either on who’s currently responsible for QA nor who should be. And this claim isn’t mere speculation: we have the data to back it up.

Testim has recently conducted an informal study with IT leadership of several organizations, of varied sizes. In the study, they asked, among other questions, who is currently responsible for QA and also who should be responsible for it. We’ll now discuss the results, starting with the first question.

Who currently owns QA in most software organizations

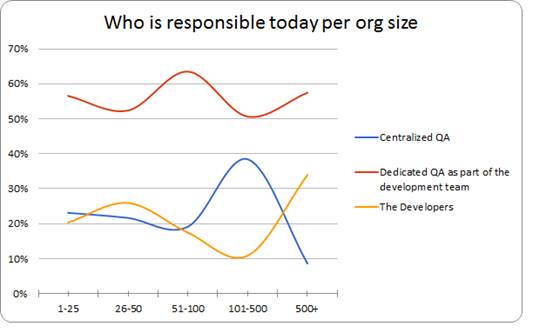

Who is currently responsible for QA in most software organizations? Let’s take a look at the following chart.

The chart above makes some things immediately clear. First, we see that dedicated QA professionals as part of the development team are the clear winner—and by a long shot—regardless of company size.

When it comes to the remaining answers (“centralized QA” and “developers”) the situation isn’t so clear-cut. Centralized QA starts winning by a small margin in the 1 to 25 range. The situation quickly reverts, though: we see that, in organizations with 26 to 50 people, developers own QA in about 25% of the cases, while centralized QA falls close to 20%.

The scenario reverts again. By the 101 to 500 range, centralized QA is beating “developers” by a long margin: almost 40% to virtually 10%. When we get to the last range, the scenario is, again, the opposite. Now “developers” are close to 25%, while centralized QA is below the 10% mark.

Who should own QA according to IT leadership

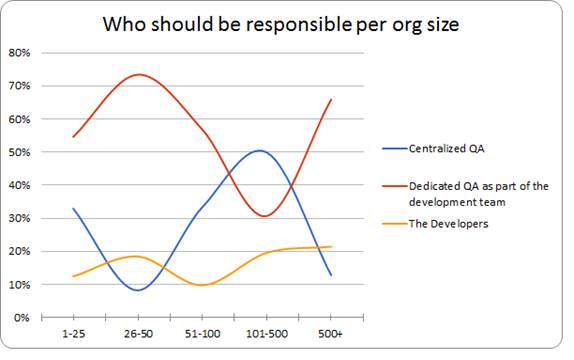

Now let’s see the answer to the second and most important question. According to IT leadership of the various organizations above, who, in the ideal situation, should be responsible for QA? Take a look at the following chart:

As you can see, the situation here seems way more random than with the previous chart. In the 1 to 25 range, “dedicated QA as part of the development team” is at the top, at about 55%. The answer raises to more than 70% in the second range (companies who have between 26 and 50 people). From here onward, it’s a nosedive: 57% by the 51 to 100 range, finally reaching the lowest point (a little bit over 30%) at the 101 to 500 range.

Then the answer dramatically rises again, reaching close to 70% in the 500+ mark.

The software industry evolves fast, and QA is no exception

As we’ve just seen, the data gathered by Testim’s study suggests that when it comes to who’s currently responsible for QA, it seems clear that, in most organizations, the answer is a dedicated QA department as part of the development team. However, when it comes to who should be responsible for QA, the answer isn’t so clear. For the larger part of the chart, the “dedicated QA” option dominates, but then it falls sharply before rising again. The “clear loser” is the “developers option”. But even then, the option managed to stay at #2 during some moments.

What should we make of these numbers? First, as we’ve stated from the beginning, the software industry seems to lack consensus on who should own QA, although there seems to be a consensus on how it actually does.

As promised, we’ll now argue that such a lack of consensus isn’t a problem. Why do we think that?

We don’t know what QA really is because it’s far from being done

First, we can see how answers varied wildly between different organization sizes. For me, this is a healthy sign, and indicative that companies are aware of their strengths and weaknesses, and the intricacies of the size and their development stage.

But the second and most important answer simply boils down to rapid evolution. Things in the testing/QA world are changing at breakneck speed, as is the whole software industry. The variety of categories of testing seems to increase each year. The same happens with tools targeting said types of testing. Then, people start writing articles and books about all those types of testing and their tooling. Courses are published, talks are given, and all of a sudden there are a plethora of new terms to learn, and new meanings for existing terms.

Even simple words like “test” have become loaded terms. For many developers, the word is synonymous with “unit testing.” For testers on a dedicated QA team, it could mean “manual, scripted test.” For front-end developers, it might mean automated UI testing, and the list goes on.

One might argue that such a scenario is a confusing state of affairs or a “mess.” I’d beg to differ. For me, the current scenario shows that QA is a live field, in frenetic and constant evolution. As technologies like machine learning evolve and we walk towards higher degrees of automation—in QA and elsewhere—the trend is for the dust to settle. The QA field will eventually reach its state-of-the-art (likely heavily aided by IA). While we’re not there yet, let’s be glad that we get to live in a time of rapid evolution and growth for the field.