Amazon hosted its annual AWS Summit today in NYC where it announced several updates related to its generative AI offerings.

Here are the highlights from today’s event:

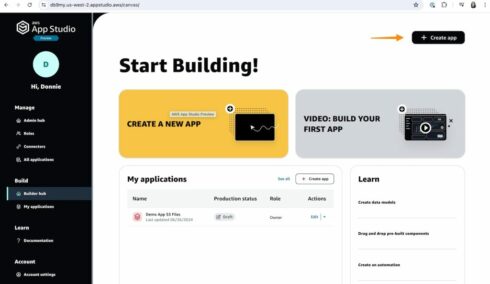

AWS App Studio now in preview

AWS App Studio is a no-code platform for building applications using generative AI, without having to have any software development knowledge. For instance, the prompt “Build an application to review and process invoices” will result in an application that does that, including the necessary data models, business logic, and multipage UI.

“The generative AI capability built into App Studio generated an app for me in minutes, compared to the hours or even days it would have taken me to get to the same point using other tools,” Donnie Prakoso, principal developer advocate at AWS, wrote in a blog post.

Amazon Q Apps enables users to build generative AI apps

First announced as a preview in April of this year, this offering is now being announced as generally available. It will allow users to create generative AI apps based on their company’s own data.

Also, since the first preview release, Amazon updated Amazon Q Apps with the ability to specify data sources at the individual card level, and also released an Amazon Q Apps API.

Amazon Q Developer is now available in SageMaker Studio

Amazon Q Developer is the company’s AI coding assistant, while SageMaker Studio is a platform that includes a variety of tools for developing, deploying, and managing ML models.

With this new integration, Amazon Q Developer can now create plans for the ML development life cycle, recommending the best tools for a task, offering step-by-step guidance, generating code to get started, and providing troubleshooting assistance.

“With Amazon Q Developer in SageMaker Studio, you can build, train and deploy ML models without having to leave SageMaker Studio to search for sample notebooks, code snippets and instructions on documentation pages and online forums,” Esra Kayabali, senior solutions architect for AWS, wrote in a blog post.

Amazon Q Developer customization now available

This means that the tool can now use an organization’s internal libraries, APIs, packages, classes, and methods to come up with code recommendations.

Users will also now be able to ask Amazon Q questions about their organization’s codebase, the company explained.

Additional data sources can be connected to Knowledge Bases for Amazon Bedrock

Knowledge Bases for Amazon Bedrock allows private company data to be used for RAG applications.

Now companies can connect web domains, Confluence, Salesforce, and SharePoint data sources, though this functionality is currently still in preview.

Agents for Amazon Bedrock updates

Agents for Amazon Bedrock allows generative AI applications to run tasks with multiple steps in them across different systems and data sources.

The tool now retains a summary of conversations with different users, which allows it to provide a more seamless and adaptive experience for user-facing multi-step tasks, such as booking flights or processing insurance claims.

It also now can interpret code, allowing it to tackle advanced use cases like data analysis, data visualization, text processing, solving equations, and optimization problems.

Vector search for Amazon MemoryDB now available

This new capability will enable companies to store, index, retrieve, and search vectors. Customers can use it to implement generative AI use cases, such as RAG, fraud detection, document retrieval, and real-time recommendation engines.

“With this launch, Amazon MemoryDB delivers the fastest vector search performance at the highest recall rates among popular vector databases on Amazon Web Services (AWS). You no longer have to make trade-offs around throughput, recall, and latency, which are traditionally in tension with one another,” Channy Yun, principal developer advocate for AWS, wrote in a blog post.

Guardrails for Amazon Bedrock now detects hallucinations

This offering helps companies set up safeguards for their AI applications based on their company’s responsible AI policies.

With this new update, it uses contextual grounding to detect hallucinations by checking a reference source and user query. Amazon also released an “ApplyGuardrail” API that evaluates input prompts and model responses for third-party foundation models (FMs).

You may also like…

Q&A: Evaluating the ROI of AI implementation

Anthropic adds prompt evaluation feature to Console