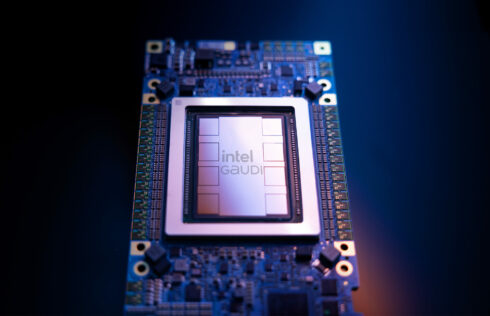

At Intel Vision 2024, Intel had a lot to say about AI and what it’s been working on in that area. The company announced a new AI accelerator called Gaudi 3, its plans to collaborate on an open platform for enterprise AI, and next generation processors.

Gaudi 3 uses Ethernet to connect tens of thousands of accelerators, which the company believes will enable a “significant leap in AI training and inference for global enterprises looking to deploy GenAI at scale.”

Each accelerator can run 64,000 operations in parallel, which supports the computational complexity required by deep learning algorithms. Its memory capacity is 128 GB and it also has 3.7 TB of memory bandwidth and 96 MB of available on-board static RAM. According to Intel, these memory specs make it possible to efficiently serve LLMs and multimodal models.

The software Gaudi runs on integrates with PyTorch and provides optimized models from Hugging Face, which the company says makes it easy to port models across different hardware types.

Gaudi 3 also introduces a peripheral component interconnect express (PCIe) card that is helpful for workloads like fine-tuning, inference, and retrieval augmented generation.

Compared to its competitor Nvidia H100, Intel expects Gaudi 3 to be 50% faster to train across Llama2 with 7B and 13B parameters and GPT-3 with 175B parameters. It also is expected to have 50% more throughput in general and 40% more for inference power-efficiency, compared to Nvidia’s.

Intel anticipates making Gaudi 3 available to manufacturers, including Dell Technologies, HPE, Lenovo, and Supermicro, in the second quarter of this year.

“In the ever-evolving landscape of the AI market, a significant gap persists in the current offerings,” said Justin Hotard, executive vice president and general manager of the Data Center and AI Group at Intel. “Feedback from our customers and the broader market underscores a desire for increased choice. Enterprises weigh considerations such as availability, scalability, performance, cost, and energy efficiency. Intel Gaudi 3 stands out as the GenAI alternative presenting a compelling combination of price performance, system scalability, and time-to-value advantage.”

Alongside the announcement of Gaudi 3, the company also announced that it was collaborating with a number of companies to create an open platform for AI in the enterprise.

To support this effort, Intel will be releasing reference implementations for GenAI pipelines of Intel Xeon and Gaudi-based systems, publish a technical conceptual framework, and add more infrastructure capacity in the Intel Tiber Developer Cloud.

The other companies who are working together on this project include Anyscale, Articul8, DataStax, Domino, Hugging Face, KX Systems, MariaDB, MinIO, Qdrant, RedHat, Redis, SAP, VMware, Yellowbrick, and Zilliz.

And finally, the company announced the next generation of its Intel Xeon processors. The new Intel Xeon 6 processors include Efficient-cores (E-cores) and Performance-core (P-cores). The E-cores offer a 4x performance improvement and 2.7x better rack density than the 2nd generation Intel Xeon processors. P-cores add support for the MXFP4 data format, reducing token latency by 6.5x compared to the 4th generation Intel Xeon processors.

According to Intel, the Xeon 6 processors with E-cores will launch this quarter and processors with P-cores will launch after that.

The company also teased that the next generation of Intel Ultra processors will launch later this year and will have over 100 platform tera operations per second (TOPS) and over 45 neural processing unit TOPS.

“Innovation is advancing at an unprecedented pace, all enabled by silicon – and every company is quickly becoming an AI company,” said Pat Gelsinger, CEO of Intel. “Intel is bringing AI everywhere across the enterprise, from the PC to the data center to the edge. Our latest Gaudi, Xeon and Core Ultra platforms are delivering a cohesive set of flexible solutions tailored to meet the changing needs of our customers and partners and capitalize on the immense opportunities ahead.”