JetBrains has announced an update to its AI Assistant that enables it to use local models so that developers under strict data privacy and compliance rules can work with it.

To enable this feature, users will need to enable LM Studio under “Third-party AI providers” in the AI Assistant settings. LM Studio provides an interface for managing and running AI models on a local machine.

This latest release also provides access to the latest models from Anthropic and OpenAI, including Claude 3.5 Sonnet and Claude 3.5 Haiku, and OpenAI’s o1, o1-mini, and o3-mini.

According to JetBrains the o3-mini and o1-mini models are ideal for users who need faster and cost-efficient reasoning capabilities. “These compact OpenAI models offer faster processing than o1 and are tailored for coding, scientific, and mathematical tasks,” JetBrains wrote in a blog post.

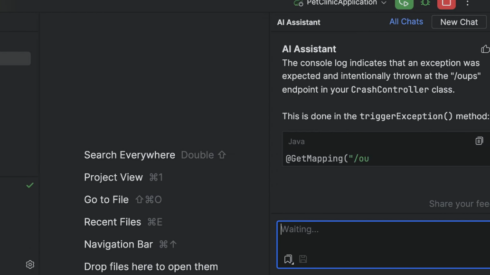

JetBrains’ AI Assistant is integrated into JetBrains IDEs and can do things such as autocomplete lines, functions, or blocks of code; answer questions about the code; generate documentation; and refactor code.

“Besides yourself, who knows your project best? Your IDE! That’s why AI Assistant, seamlessly integrated into your development workflow, understands your code and its context,” JetBrains says.