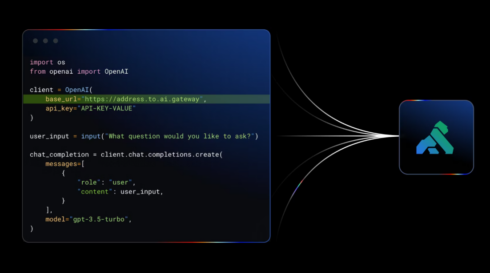

Kong has introduced the latest update to Kong AI Gateway, a solution for securing, governing, and controlling LLM consumption from popular third-party providers.

Kong AI Gateway 3.11 introduces a new plugin that reduces token costs, several new generative AI capabilities, and support for AWS Bedrock Guardrails.

The new prompt compression plugin that removes padding and redundant words or phrases. This approach preserves 80% of the intended semantic meaning of the prompt, but the removal of unnecessary words can lead to up to a 5x reduction in cost.

According to Kong, the prompt compression plugin complements other cost-saving measures, such as Semantic Caching to prevent redundant LLM calls and AI Rate Limiting to manage usage limits by application or team.

This update also adds over 10 new generative AI capabilities, including batch execution of multiple LLM calls, audio transcription and translation, image generation, stateful assistants, and enhanced response introspection.

Finally, Kong AI Gateway 3.11 adds support for AWS Bedrock Guardrails, which can help protect AI applications from malicious and unintended consequences, like hallucinations or inappropriate content. Developers can monitor applications and adjust policies in real time without needing to change code.

“We’re excited to introduce one of our most significant Kong AI Gateway releases to date. With features like prompt compression, multimodal support and guardrails, version 3.11 gives teams the tools they need to build more capable AI systems—faster and with far less operational overhead. It’s a major step forward for any organization looking to scale AI reliably while keeping infrastructure costs under control,” said Marco Palladino, CTO and co-founder of Kong.