The open-source machine learning library developed by Facebook continues to evolve. Facebook announced at its PyTorch developer conference PyTorch 1.3 is the latest release of the library, following significant growth in the past year.

“PyTorch continues to gain momentum because of its focus on meeting the needs of researchers, its streamlined workflow for production use, and most of all because of the enthusiastic support it has received from the AI community. PyTorch citations in papers on ArXiv grew 194 percent in the first half of 2019 alone, as noted by O’Reilly, and the number of contributors to the platform has grown more than 50 percent over the last year, to nearly 1,200. Facebook, Microsoft, Uber, and other organizations across industries are increasingly using it as the foundation for their most important machine learning (ML) research and production workloads,” Facebook wrote in a post.

PyTorch 1.3 introduces experimental support for seamless model deployment to mobile devices, model quantization, and front-end improvements, such as the ability to name tensors, Facebook explained.

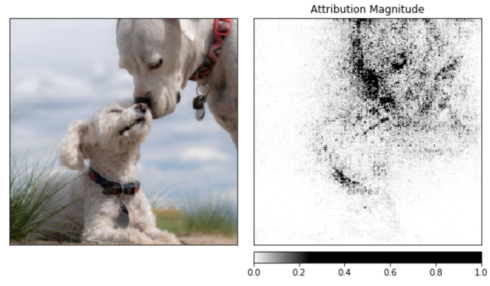

It also added a number of additional tools and libraries in order to “support model interpretability and bringing multimodal research to production.” Such tools include Captum, which offers information on how a model generates a certain output; and CrypTen, which is a community-based research platform to advance the field of privacy-preserving machine learning.

In addition to these new features, Facebook announced a collaboration with Google and Salesforce. Together, they will add broad support for Cloud Tensor Processing Units. This provides an accelerated way of training large-scale deep neural networks.

Facebook also announced that Alibaba Cloud is now a supported cloud platform, joining AWS, Microsoft Azure, and Google Cloud.