Humans are constantly engaged in sound, and with mobile devices and virtual reality headsets becoming increasingly popular, sound is becoming more immersive. Today, the Cardboard SDKs for Android and Unity support spatial audio so developers can create immersive audio experiences in virtual reality apps, according to Nathan Martz, product manager for Google Cardboard.

Users will just need their smartphone, a regular pair of headphones, and a Google Cardboard viewer.

Typically, apps create simple versions of spatial audio by playing sounds from the left and right in separate speakers. With the updates to the SDK, an app can now produce sound the way humans hear it, said Martz.

(Related: Kickstarter project wants users to smell virtual reality)

For example, the SDK combines the physiology of a listener’s head with the positions of virtual sound sources to determine what he or she hears, so sounds that come from the right will reach a the left ear with a slight delay, and with fewer high frequency elements, which are normally dampened by the skull, according to Martz.

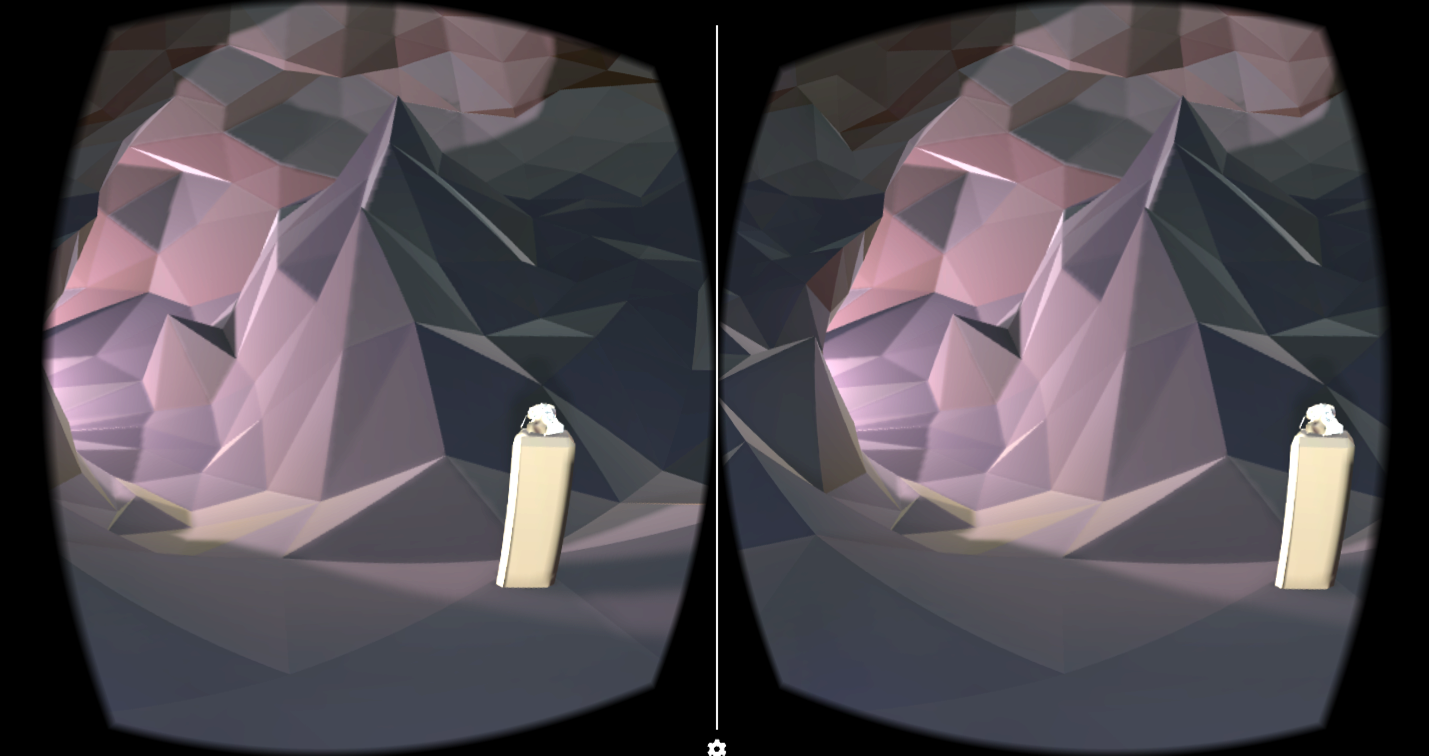

He added that the SDK will also let developers specify the size and material of a virtual environment, both of which contribute to the quality of a given sound. If the virtual environment is in a tight space, the sound would be much different than if it was in a big, open field. They are still both virtual environments but create very different sounds.

According to Martz, Cardboard’s developers created today’s updates with performance in mind, so adding spatial audio to the app should have minimal impact on the primary CPU.

To get started with the spatial audio, there will be a comprehensive set of components for Unity developers for creating soundscapes on Android, iOS, OS X and Windows. Native Android developers will now have a Java API for simulating virtual sounds and environments.