On the day of the Software Testing World Cup Finals, it was an understatement to say my team and I were nervous. We knew we had worked our hardest to prepare, but the fact that none of us knew exactly what lie ahead for us to test left us with an air of uncertainty. The stakes were high on the biggest global stage for software testing, and the pressure was rapidly mounting.

We started off the day by getting physically set up for the competition, held in the largest conference room at the Dorint Hotel in Potsdam, Germany. Each of the seven teams had their own workstation, although that workstation only consisted of a couple of tables along with some power supplies for devices. We were also given large paper flip boards for whiteboarding ideas or problem-solving while in the heat of the battle. Our laptops were charged and primed to operate at peak speed and performance, and our phones were juiced and lying at the ready. Once we had our workstation duly prepped, we headed off to a quick interview with a STWC judge who quizzed us on how we prepared ourselves for the competition and how we planned to go about testing the still-unknown application. We tried not to give away too many secrets.

After the interview, we retreated to our workstation. As in the prelims, each team member was assigned an area that they would focus on testing. Whereas I handled security in the previous round, my area of concentration changed to become application performance and exploratory testing. When you’re testing in that type of team and high-pressure environment, the key is to remain flexible. The rest of the team was poised to focus on everything from security to third-party application integration to static code analysis.

Team “RT Pest Control” (clockwise from left): Samantha Yacobucci, Zala Habibi, Nick Bitzer and Alex Abbott

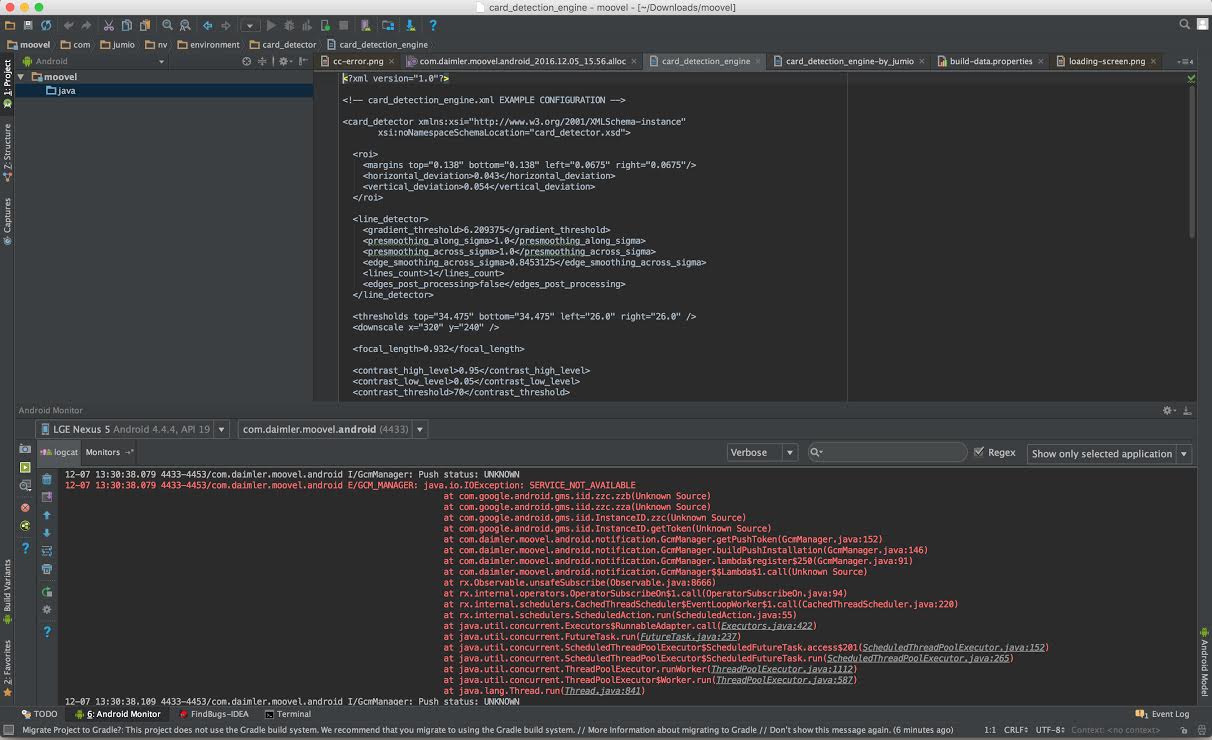

In order to be sure that we were ready to hit the ground running when the timer started, we had every testing tool we needed open and running. This included things like Android Studio for app debugging, and Slack so that we could communicate important facts or ideas without other teams being able to overhear us. (The workstations in the room were only about 10 feet apart and had no sound or vision barriers, adding another wrinkle to the challenge.)

It was also important that I spent some time double-checking that all the developer options I needed were enabled on both of the Android devices I was set to use: an LG Nexus 5 running Android 4.4.4 (KitKat), and a Dell Venue 8 tablet running Android 5.0 (Lollipop). Other members of the team strategically utilized different brands and types of devices along with various versions of Android to ensure that we had comprehensive OS and hardware coverage when it came time to test the application. That turned out to be a smart move.

The game begins

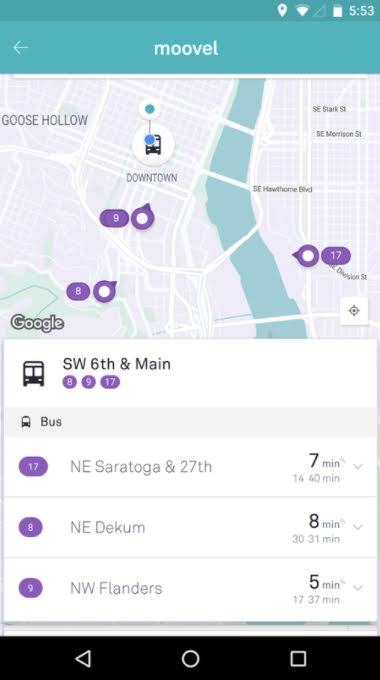

No more than 30 minutes before the competition started, we received an e-mail informing us that we’d be testing an Android app called Moovel, a transit application that enables consumers to book public transit or ridesharing options on the go. The e-mail contained information from the Moovel product owner about what aspects of the app they were most concerned with testing, hardware/software requirements, a debug .apk file, as well as what was in and out of scope for testing.

Once we got the application, we were able to divvy up specific objectives for testing and estimating our testing process. I’ll admit it was tough to lay out an official test plan. When you don’t know what kind of application you’re going to be testing, and you’ve got such a finite timeframe, exploratory testing tends to be the best way to find bugs.

Upon seeing the link to the debug .apk, a wave of relief washed over me. Since I had a debug version of the app, I knew I could do a lot more with performance metrics in Android Studio. With the debug application, I’d be able to gather CPU, memory and network performance statistics in a more reliable fashion.

It was finally our moment of truth: time to start testing. We actually had two hours and 40 minutes to test the app, write bugs and put together a final report, which was abbreviated from an already compressed three hours in the prelims. As such, prioritizing what to test was even more of a challenge. Before we even knew exactly what the app was, going purely off the knowledge that it was Android, the team had already decided upon a few universal tools that we were going to use to do some static analysis on the .apk file, as well as other tools to do automated testing.

I grabbed the .apk file, tossed it into Firebase (a platform developed by Google to help build quality applications), and used the Firebase Test Lab to run some automated tests against the application. I used the built-in automated “robo” tests that come with Firebase. I honestly wasn’t expecting too much to come out of the Firebase test, but since it took very little effort and time on my end, I figured it couldn’t hurt. In the end, it didn’t surprise me that Firebase didn’t turn up any bugs.

Another tool my team used in hopes of finding some bugs through automation was the open-source MobSF (Mobile Security Framework). We mainly turned to MobSF to uncover security flaws in the application code. Unlike Firebase, MobSF actually turned up some results, the best of which was an SQL injection vulnerability—a bug that would ultimately lead to us winning the “Holy Cow Bug Award,” a distinction for finding the most difficult bug.

We also leveraged Exerciser Monkey, a UI exerciser tool within Android Studio to try to find bugs. In our preparation for the World Cup, we used Monkey with great success on other dummy apps, but unfortunately (or perhaps fortunately for the product owners) had no such luck when using it against the Moovel app.

After the automated steps finished running, it was “all hands on deck” for exploratory testing. The application had some well-defined functions, so it was pretty easy for the team to decide who was going to test what once we had the application in our hands. For the next couple hours, we peeked into every corner of the application trying to find as many bugs as possible, although we thankfully didn’t have to repair any of the bugs we found.

We ran into a couple major obstacles right away. Only two team members had local cell phone service on their Android phones, and part of the testing required us to leave the building and go out to taxicabs to make sure we could reserve them (a critical part of the application’s functionality). Because of this unexpected snare, only half of the team was able to test all portions of the application.

Individually, I also ran into an expired trial period license for Firebase. I had been using Firebase to do some prep testing, but didn’t realize that I was running on a trial of the software. Literally as soon as the competition was underway, I attempted to use Firebase and was notified that my trial had expired, and I now had to pay for the service. I (of course) didn’t have my wallet on me, so I had to track it down and pony up for the full Firebase subscription, right in the middle of the action. Lesson learned: Always check the terms and conditions for your software. They’ll come back to haunt you at the worst times if you don’t.

During the competition, I noticed teams taking alternate approaches to writing bugs. Some teams would keep a running list of bugs on paper and then write all the bugs in one fell swoop toward the end of the exercise. Other teams, including ours, would write bugs individually as they came up. We made a conscious, collective decision to handle it this way so that the recreation steps were fresh in our minds and the logs for a given failure were readily available.

Who won?

After all was said and done, we were able to rack up a bug count of 39 bugs. The bugs ranged from suggestions on user experience design to Java threads stuck in endless loops. As a team, we felt very positive about the bugs we submitted and felt as though our test report was comprehensive and laid out a clear overview of everything we thought should be fixed in the Moovel application.

Frankly, I was just relieved it was all over—the weeks of staying up late, poring over practice application code, and the stress and energy that were necessary elements of being ready to test anything and everything.

Although we ultimately didn’t end up taking home the title of “Best Software Testers in the World,” the entire competition was an absolutely unforgettable experience. We met a lot of amazing test engineers in the process, among them the Pan Galactic Gargle Blasters, the team from the Netherlands that earned the championship. They were fantastic, and we feel privileged to have competed alongside them. I’ll recount some of those dynamic people, our lessons as a team and some retrospective thoughts in my final post next week.