Cloud computing has become perhaps the most overblown and overused buzzword since Service Oriented Architecture (SOA). However, that does not mean that it is not still going to have a huge impact on how we build solutions. Most organizations are trying to find their way with this new, must-have technology just like they did with SOA a few years back.

Cloud computing has become perhaps the most overblown and overused buzzword since Service Oriented Architecture (SOA). However, that does not mean that it is not still going to have a huge impact on how we build solutions. Most organizations are trying to find their way with this new, must-have technology just like they did with SOA a few years back.

Like all tools, Windows Azure has its place, and helping in defining that place is what this article is all about. I have been impressed that Microsoft has been flexible over the first few years of Azure’s existence, and has responded to feedback and criticisms with tools and offerings to round out the platform. Digging into the details of where Azure stands today is the best way to determine if your solution will benefit from leveraging Microsoft’s cloud offering.

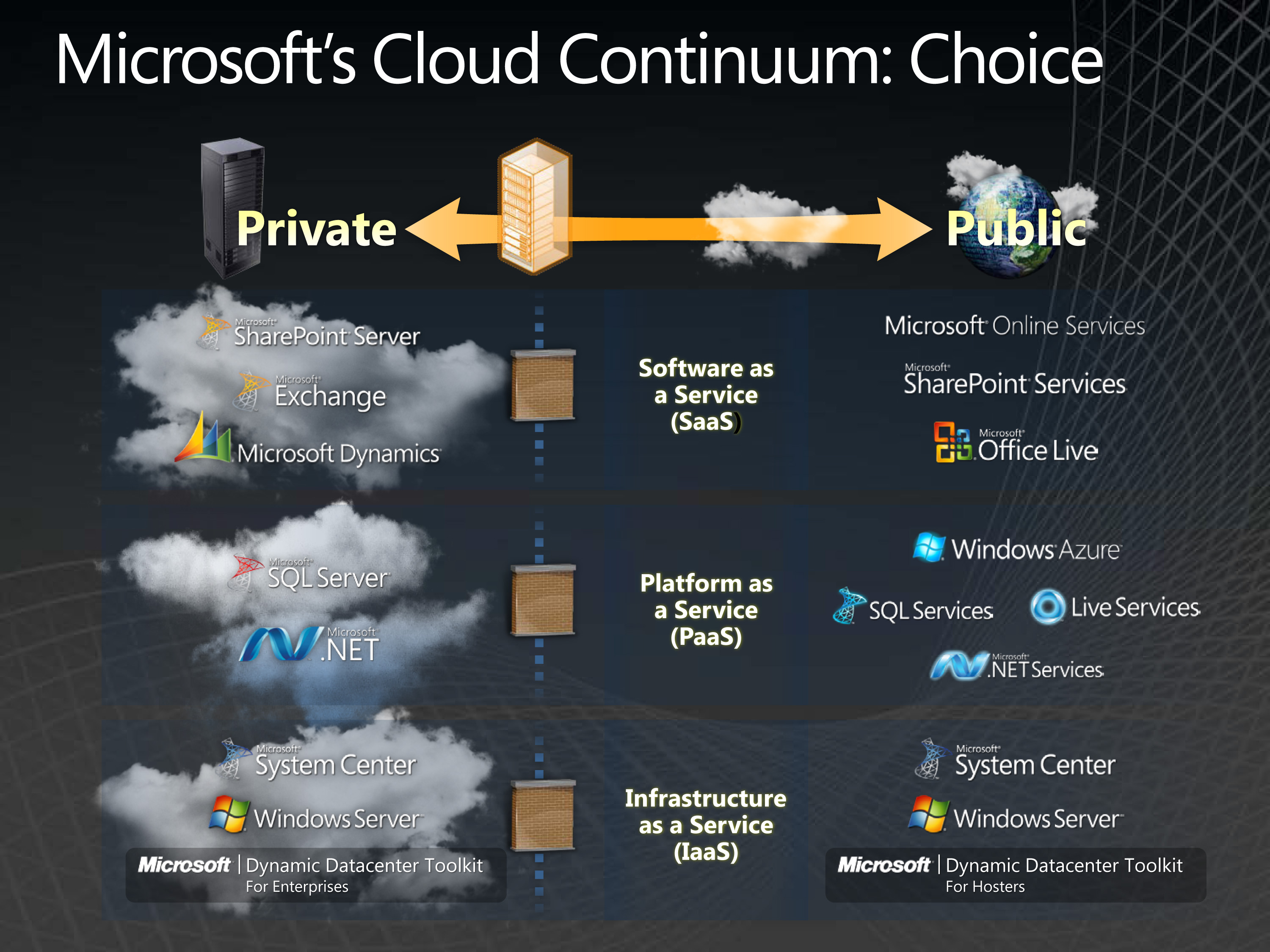

Azure contends in the Platform-as-a-Service space. It’s likely we all have heard of Software-as-a-Service. Companies like Salesforce.com have matured that space and proven that it works as a business model and a solid way to deliver solutions. One complaint by end users, though, is the lack of configurability, since someone else provides the interfaces. And even with customization capabilities, hosted software never can rise to the quality of building your own solution to your own specs.

Conversely, Infrastructure-as-a-Service has been with us as a viable choice for even longer, and it is taken as a proven model in most circles. With IaaS, you basically rent a server, whether it is a VM on Amazon’s Elastic Compute Cloud (EC2) system or a hosted server at an Internet service provider. IaaS does not inherently remove the requirement to support the underlying platform with patches and configuration. It also leaves all the disaster recovery requirements in your hands.

PaaS is the next step in the progression. Brian Goldfarb, director for Windows Azure product management, described it this way: “With Windows Azure, Microsoft provides the industry’s most robust platform for developers to create applications optimized for the cloud, using the tools and languages they choose. With support for languages and frameworks that developers already know, including .NET, Java, Node.js and PHP, Windows Azure enables an open and flexible developer experience.”

The idea is to provide everything the developer needs except the code. Microsoft provides the hardware, the operating system, the patches, the connections, the bandwidth, health monitoring with automated server instance recovery, and even the tools for deploying. Smaller software companies can skip the need for an IT staff provided they can navigate the tools, which are tailored for developers. For larger companies, PaaS gets them to a point where they can achieve economies of scale that can only be had through provisioning many thousands of servers.

Microsoft is a prolific company when it comes to providing offerings and expanding solutions; over the years, it has not tended to err on the side of limited options for customers. Figure 1 shows a diagram of Microsoft’s Cloud offerings that span SaaS, IaaS and PaaS.

Figure 1

#!

Azure components

Windows Azure is the generic term, and when it was first announced, it was used to described the full offering. Windows Azure is still used to refer generically to the platform as a whole, but it really is only part of the story.

Windows Azure is the part of the platform that provides compute services along with storage and management. It is the PaaS and the more direct competitor to Amazon’s EC2, as it lets you deploy your code to compute instances of various sizes and in configurable numbers.

But there is also SQL Azure for those who want their database in the cloud. It provides databases, reporting, data synchronization and business analytics, and it can be consumed from Windows Azure compute instances or from applications hosted anywhere.

The third major piece is Windows Azure AppFabric. AppFabric provides the service bus and access control for orchestrating it among the components. Each of these areas is worthy of whole books unto themselves. There are resources online to learn how they work in detail, including hands-on labs to walk you through scenarios with the various components.

I talked to Richard Bagdonas, president and CTO of SubtleData, about his company’s point-of-sale solution that leverages Azure. When asked what aspects of using Azure took some getting used to, he said, “The great thing about Azure is the relative simplicity in deploying solutions to the cloud. My recommendation is to build and deploy often to get new features out quickly. This is not a sprint, but a marathon of sprints,” he said.

“The hardest thing we had to get used to was Azure SQL server. We do not have to worry about backups anymore, [but] we do not have the great WYSIWYG editors that we do in SQL Server. That being said, the SQL Azure Migration Wizard allows us to build locally and move it up to the cloud, minimizing that difficulty.”

This is an oft-heard refrain. It is not hard to use Azure for your development, just different. The things that are hard with .NET development are still hard with Azure.

Developing with Azure

In order to build a .NET-based solution with Windows Azure, a project must be written with either C# or VB.NET using Visual Studio. The exception to this is that there is also an option to create a Worker Role using F#. While you could probably use something else like Notepad, that would be perverse and well beyond the scope of this writing.

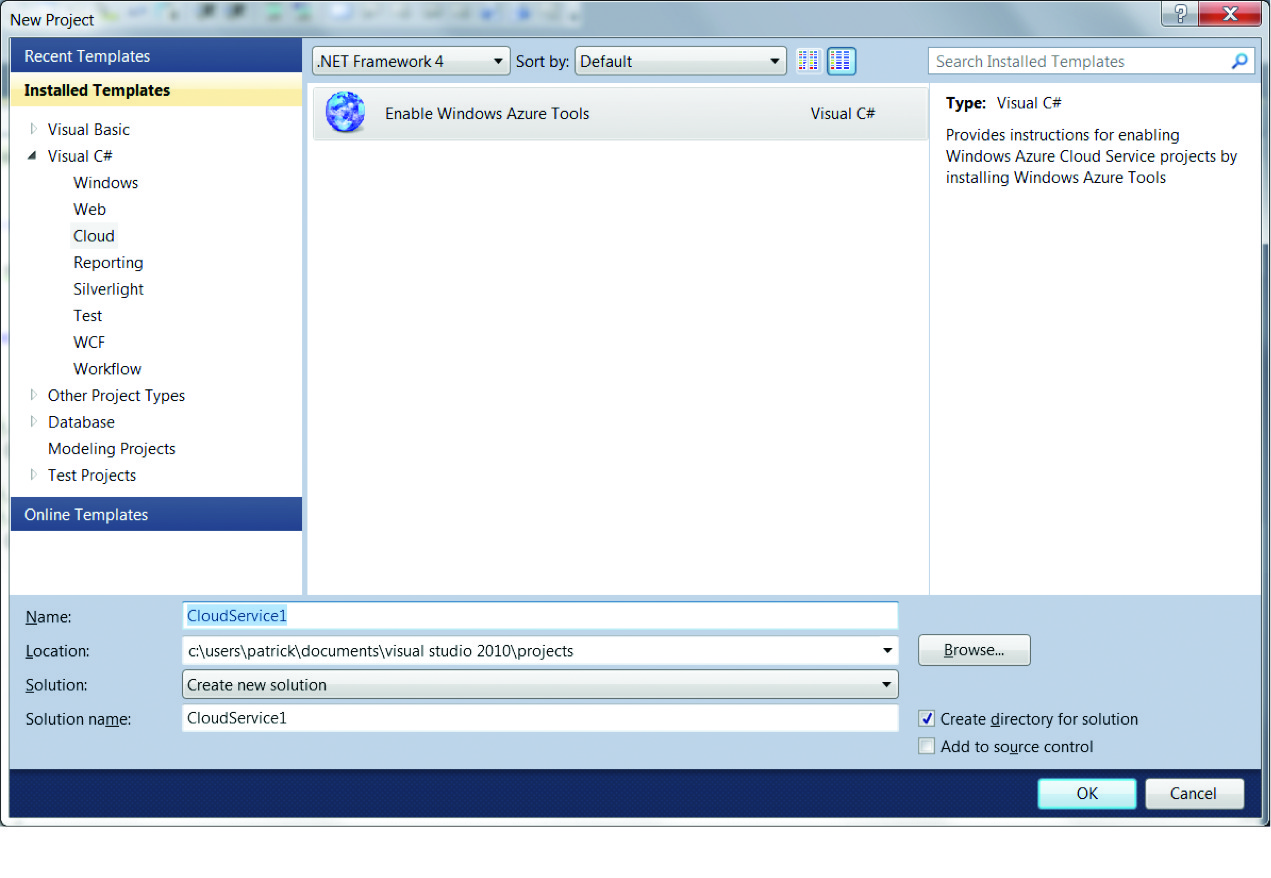

To use Visual Studio 2010 to develop your Azure-based solutions, you must install the Windows Azure Tools for Visual Studio. Last November, Microsoft released version 1.6 of the Windows Azure SDK for .NET. When you create a new project and select the Cloud templates, you are given the option to “Enable Windows Azure Tools” (see Figure 2). This will lead you through the install of the SDK as well. After the toolkit is installed, you can use the .NET Framework 4.0 and Visual Studio 2010 to create a project for a Worker Role and/or one of the various types of Web Roles.

Out of the box, there are ASP.NET, ASP.NET MVC, WCF Service and CGI Web Role templates in Visual Studio 2010. A Worker Role is for processing and getting things done. A Web Role is the user interface or system interface to the application.

To understand the distinction, we can look at an example. Suppose that there is a system that takes user input through a Web-based UI and performs lookups and processing of that data in such a way that it takes 10 to 30 seconds per request. The best way to handle that is to have enough systems running the UI to handle the requests, and then have enough systems doing the processing (the Worker Role systems) to handle the background work.

The guidance is to take any task that takes time and resources and delegate it to a Worker Role to keep the Web Role servers responsive to client requests. The power of this scenario is that we can scale the number of servers running in either role automatically in response to demand for the services. It is also possible to remove the UI component completely and make the solution a back end only for other UIs by replacing the Web Role with a WCF Service Web Role.

Not too long ago, Microsoft added a third kind of Role to the available options: a Virtual Machine Role that lets you get the IaaS experience where needed and integrate it with the rest of your PaaS implementation. The Virtual Machine Role was added after the fact, and I was fortunate enough to be part of the beta offering. It speaks to the control freak hidden down inside those of us with a server management background, and it is a much more direct competitor for Amazon’s EC2 as it lets you do on Azure what that service provides.

Figure 2

#!

Microsoft has made its way over the last decades by being a platform vendor. Windows is possibly the most recognizable platform, but it is not the only one offered by Microsoft. Office has been a platform for development for most of its existence. SharePoint has in recent years become a very popular platform. The .NET framework is part of the platform in each of these cases, and it is often thought of as a platform for development in and of itself.

With all these examples, why would we need yet another platform for Microsoft development? The answer is that all of these examples are platform choices, but Windows Azure is meant to represent platform evolution.

What if you did not have to maintain the servers that hosted your solutions? Would that make things easier for you and less expensive? How about being able to scale up your solution to hundreds of servers without bearing the cost of owning or even preparing the infrastructure for this expansion? All of these are the promises of PaaS.

In an ideal world, resources are like power. If you want more, you just draw it from the seemingly infinite well as needed. By standardizing everything, Microsoft can achieve economies of scale with something that normally is not known for being economical. Microsoft is a logical provider because it’s its own best customer. It owns many disparate Web properties that require scale and in some cases elastic scale. When I first heard of Azure, I assumed that Microsoft was fulfilling a need that it itself had that is also useful to most of the other companies hosting technology solutions around the world.

The primary competition for Azure is to just keep hosting solutions yourself on your own servers in your own data centers. Another is trading some of the responsibility to a provider while keeping much of the work of server care and feeding. Hosted servers have served this well and there are variants of this, from getting your own dedicated server where you do all the patching to a managed solution where a concierge-like service handles everything at great expense.

The developer connection

Thanks in large part to the coming rollout of the Windows 8 App Store, we will likely see a large increase in the number of applications that use Azure-based cloud storage. The Metro model screams for cloud integration for online integration.

It was explicitly called out during the BUILD conference last September that this is the expected scenario to deliver rich connected experiences. As I have mentioned in the past, I am working on a project to bring a Metro-style application to market, and Azure does figure into the plan to share information between users when online. When competing against thousands of other offerings in a commodity space, which many apps will do in the App Store once things get going, any disruption will likely result in lost market share. Microsoft wants to lower the friction for independent software developers to get a ton of apps available on its newest platforms, namely Metro on Windows Phone 7 and Windows 8.

In my experience, there are two places that the average developer tends to get lost. The first is how to market and sell his or her solution. This is intended to be addressed by the App Store implementation Microsoft has been preparing and that we discussed a bit in last month’s article. The second is handling infrastructure to host his or her solution, especially when it outgrows the server in the basement or at its hosting provider.

We are seeing how Microsoft’s offerings are starting to reinforce one another. I asked Bagdonas why SubtleData selected Azure, and his reply was, “We selected Azure because our company needed a managed platform that allowed us to focus on software development rather than systems administration. Our requirement was to have a platform that easily scaled using technologies that we are already most comfortable with, namely Microsoft C# and Microsoft SQL Server.”

This is the goal of PaaS. Outsource everything (except the code) and get the solution out the door.

Making sense of the usage that makes up the cost of using the system is a major source of confusion for newcomers to Azure. When you log into your Azure account at windowsazure.com, you will see that there are quite a few metrics of usage for your Azure account, and any of them can and will cost you money. Oddly enough, this is perhaps the most complicated part of Azure.

It is very important to understand these metrics, but the central one is compute hours. These are literally the hours that your solution is loaded on Azure systems. And the wording on that last part is very specific. People have been known to load up a sample application, even one from the Hands-on Labs online, and then shut it off. The problem is that if it stays loaded, it is ready to run and hence accrues charges.

Other metrics such as transactions and bandwidth will not accumulate unless the system is actually running. To help figure out what your costs might look like, there is a pricing calculator built right into the Windows Azure management interface.

Getting help with your Azure implementation is getting easier as time goes on, as more consulting firms turn to providing this kind of service or are founded around providing Azure expertise. An old friend of mine from the Boston developer community, Ben Day, has been doing this kind of work from the start, and Steve Marx, who has been a technical strategist on Windows Azure at Microsoft since the first public demo, has recently joined Aditi as its chief Windows Azure architect. We can expect more companies to provide consulting on the Azure platform, and should even expect some fairly specific specialization.

As an example of this, there are those that know the entire platform well but specialize in the harder bits. My experience is that working with AppFabric and Service Bus are the hardest parts of architecting a large solution on Azure. For smaller implementations, you could get away without them entirely, but where is the fun in that? The good news is that Christian Weyer of Thinktecture and Juval Lowy of IDesign, along with their cohorts, are two prominent examples of people who know how to translate communication requirements into an implementation.

Microsoft is also helping companies get their solutions started with Windows Azure. Aside from including Azure resources with MSDN subscriptions in accordance with the level of MSDN SKU (i.e., higher-end SKUs get more compute time each month), it is also including Azure as part of its BizSpark program. SubtleData’s Bagdonas said, “We have had a great experience building in Azure because of the technical, developer and architectural support we have received as part of the Microsoft BizSpark program.”

Microsoft is deadly serious about making Azure the path to the future for itself and its clients. Confirmation of that came when Scott Guthrie, a Microsoft corporate vice president, and many of the best and brightest at Microsoft were brought in to concentrate on development on Windows Azure.

#!

Reliability matters

A critical assumption about Windows Azure has been that hosting solutions on such a massive system, with a company with so many properties that support such great uptime records, is going to translate directly to uptime for your solution. But it takes time for this assumption to be proven. Reliability is like security: It is all about risk and probability. The more resources and effort, the higher the expected uptime should be, but the devil is in the details, and the costs go up very fast as you near smaller and smaller gains in reliability.

During the Dot-Com boom, the discussion was about the “number of nines” you could achieve. My experience with server-based solutions in general is that 99% uptime is easy to get with redundant power and ping (a second path to the Internet), and a little extra spent on the server specs, such as error-correcting memory and RAID options on the disk. That sounds good until you do the math and realize that 1% downtime is more than three full days a year of the solution being offline. Ten times less downtime is achieved at 99.9% reliability and represents the expectation of a little over 8 hours of downtime a year.

There are levels beyond this of course all the way to the coveted “5 nines,” which represents 99.999%, just minutes of downtime per year, but most solutions do quite well at 99.9%. The reason the world does not just do what it takes to deliver 99.999% or even 100% reliability is that the former is ridiculously expensive and tricky to accomplish, and the second is just flat-out not possible in the real world.

Microsoft and all the other cloud providers are loathe to state overtly in their Service Level Agreements (SLAs) what levels exactly are supported on their services. I have yet to see an actual number presented on any of them in terms of time.

Microsoft has an entry in its support FAQ that says, “How will the Windows Azure, SQL Azure, Caching, Service Bus and Access Control SLA agreements work with current on-premise Microsoft licensing agreements?” The answer to that item is, “Windows Azure, SQL Azure, Caching, Service Bus and Access Control are independent of our on-premises Microsoft licensing agreements. Our SLAs for Windows Azure provide you a monthly uptime guarantee for those services you consume in the cloud, with SLA credits against what we have billed you in the event we fail to meet the guarantee.”

My understanding of this is if there is an outage, the remuneration is in credit for the hours where the system was down.

It is not surprising that, while pioneering, the providers do not want to name a number and be held to that number. After all, they will be judged by their actual performance in the end. Unfortunately, there has been plenty to judge with Amazon’s EC2 and Microsoft Windows Azure both having outages in the last year or so that were assumed to be designed out of the system. Most recently, Azure had a problem based on February’s leap day. To its credit, Microsoft has been very transparent about what happened, including update and a post to the Windows Azure blog explaining what happened. You can read the summary online, but the gist of the issue is that a bad calculation, combined with an update that was going on at the time, accelerated the issue and the outage.

As stated before, there are no perfect systems and no such thing as 100% uptime. The question is how the provider copes when things go wrong. I have often heard it said that any fool can manage when things are going well, but it takes a pro to manage when things have gone off the rails. If there are many and repeated or extended issues, then confidence will be lost no matter what the stories are behind those outages.

I still have plans to use Azure in several upcoming projects, and nothing has changed in that regard, except I will be sure to double-check what my fallback plans will need to be if there is an outage of the platform. This is a best practice no matter what you use to run your solution if even small stretches of downtime cannot be tolerated. To be fair, I see the outages Amazon had a while back in the same light.

Moving forward

Windows Azure is a tool that can help you solve problems in much the same way that the .NET Framework on Windows is a tool. We can expect it to change a bit over time as Microsoft seeks to fill in the holes that remain and tries to maintain the balance in its pricing structure to keep the whole system profitable while not scaring away the clientele.

The cloud is not guaranteed to be part of your IT future in the next few years, but it is a good bet that it will be one way or another, even if it is only via the solutions your vendors provide. The options can be confusing, so it is best to tackle understanding them one variable at a time. Most organizations that I have polled that are using Azure are happy with what it provides and are planning to expand their use going forward. The outage recently is a bump in the road to be sure; however, the savings and other benefits to be achieved are too great to not keep trying.

Editor’s note: Due to customer feedback, Microsoft has simplified its branding so that the AppFabric name is now part of the broader Windows Azure brand. Features and services remain unchanged from the Service Bus technology. More information is available here.