Let’s flash back to New Year’s Eve 1999, when we were popping corks to celebrate the entrance of a new millennium and, as the ball dropped, that Y2K hadn’t descended us into the Dark Ages. All the while we were blissfully unaware that the dot.com bubble was about to burst (even though it was obvious in hindsight!).

Over the last 20 years, we have seen massive changes to how we design, develop, and deploy applications; from monolithic applications to microservices, desktop applications and static web applications to highly interactive web and mobile applications. The changes happened incrementally but the cumulative impact has been dramatic.

RELATED CONTENT:

Creating a DevOps culture

Dynatrace replaces DevOps with NoOps

5 DevOps myths

One of the key mantras over the last 20 years has been the drive to accelerate delivery (“get it out sooner than the competition”) powered by the rise of Agile+DevOps. The Phoenix Project (by Gene Kim, George Spafford, and Kevin Behr) became required reading for anyone trying to transform their software delivery process and, while some organizations have achieved the goal of “Continuous Delivery,” many are still struggling with adopting some of the basic principles.

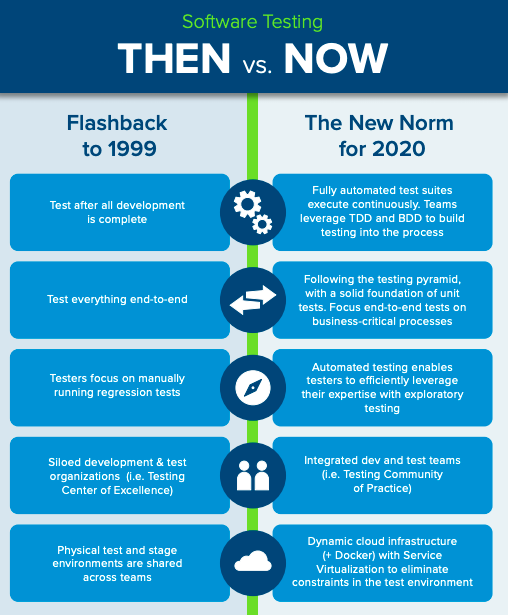

Organizations that are succeeding in 2020 have transformed not only their development activities but also how they approach testing, starting with when they first think about testing. Traditionally testing was an afterthought, something we did when all the development was done. The leaders in 2020 are thinking about testing from the very beginning, leveraging TDD and BDD practices to build fully automated testing into the development process. These teams also recognize that you can’t test everything end-to-end, and many look to the Testing Pyramid (advocated by Agile experts Martin Fowler and Michael Cohn) as a strategy for building a scalable test automation practice and freeing the testers to spend time with valuable exploratory testing, rather than tedious manual regression testing.

The structure of the teams themselves have also changed. If you still have a test team that is airdropped into a project to do the testing, this needs to change. The only way to succeed with Agile+DevOps is to have the roles integrated together into a unified team that is working to achieve the same goals.

Lastly, we get to the test infrastructure. Gone are the days of the monolithic application, and so are the days of a test environment that you could deploy on a single machine and share across the teams. Today’s modern architectures require access to multiple back-end systems and all the teams require access to them “now.” Leveraging both cloud infrastructure and techniques such as Service Virtualization, teams can gain control over their complex test environments and test on-demand, anytime, anywhere.

Looking to 2040

Now that we are in 2020 and at the dawn of a new decade, what does the future hold? Certainly AI is going to play a large part in the way we both develop and test applications.

Many would say we are still in the Dark Ages for automated UI and manual testing, and many AI-powered testing technologies have been released over the last couple of years. This is sure to grow over the coming years, but where will this ultimately end up? Autonomous testing is my prediction, where the AI does all the mundane work, taking care of both test creation and execution, and the human reviews the results. AI will continue to be assistive, augmenting the tester and making the tester’s insight and domain expertise more valuable.

But what about testing the AI-infused applications? More and more organizations are using AI to help gain insight into their business and streamline decision-making processes. But how do you test AI? While traditional approaches of ‘sample datasets’ are a great starting point, this is still an area of active research. Due to the non-deterministic nature of evolving AI systems, traditional testing techniques will also need to evolve. We will need to change the way we think about testing, moving away from the concept of a binary pass/fail status to rather introduce concepts of gated acceptance (guardrails if you like), used to determine if the algorithm is drifting too far off course.

Lastly, the non-deterministic behavior of applications is going to get even more complicated with edge computing. Dedicated devices that run at the edge are going to change the way we deploy software, the same way that mobile devices changed the way we consume … everything.

Evolve today and plan for tomorrow.

As we head towards the next 20 years, the changes for sure are going to be incremental, so don’t worry. Take stock of your current state and prepare for the future … and keep reading SD Times for another 20 years of industry insights … Happy testing!