Google has announced a new set of services aimed at simplifying Machine Learning Operations (MLOps) for data scientists and machine learning (ML) engineers.

According to Google, companies are using machine learning to solve challenging problems, but machine learning systems can create unwanted technical debt if it is not managed well. Google noted that machine learning has a few maintenance challenges on top of all of the challenges of traditional code: unique hardware and software dependencies, testing and validation of data and code, and the fact that models degrade over time as technology advances.

RELATED CONTENT: Use Emotional Intelligence before Artificial Intelligence

“Put another way—creating an ML model is the easy part—operationalizing and managing the lifecycle of ML models, data and experiments is where it gets complicated,” Craig Wiley, director of product management for the Cloud AI Platform at Google, wrote in a post.

One of the new services is a fully managed service for ML pipelines that allow customers to build ML pipelines using TensorFlow Extended’s pre-built components and templates. This helps significantly reduce the effort needed to deploy models, Wiley explained. This service will be available in preview in October.

Another new service is Continuous Monitoring, which monitors models in production and alerts admins if models go stale, or if there are outliers, skews, or concept drifts. This will enable teams to quickly intervene and retrain models. This will be available by the end of 2020.

The final new service is Feature Store, which is a repository of historical and latest feature values. This service will help enable reuse within ML teams and boost productivity of users by eliminating redundant steps. According to Wiley, the Feature Story will also offer tools that mitigate common causes of inconsistency between features that are used for training and prediction.

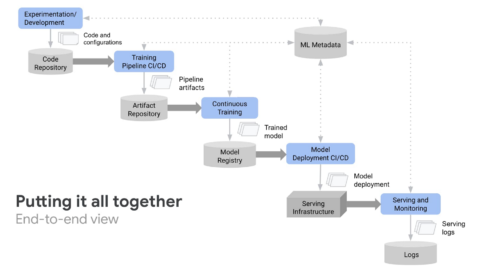

“Practicing MLOps means that you advocate for automation and monitoring at all steps of ML system construction, including integration, testing, releasing, deployment and infrastructure management. The announcements we’re making today will help simplify how AI teams manage the entire ML development lifecycle. Our goal is to make machine learning act more like computer science so that it becomes more efficient and faster to deploy, and we are excited to bring that efficiency and speed to your business,” Wiley wrote.