AI use is growing rapidly. As with most “new” technologies, organizations are focusing on all the potential opportunities without giving equal weight to the potential risks. While it seems that just about everyone is talking about AI, less discussed, but growing in volume and frequency, is AI ethics which questions whether categories of AI systems and individual implementations will advance human well-being. Rather than focusing narrowly on whether something can be done, AI ethics also considers whether something should be done.

It’s already apparent that some things shouldn’t have been done, as evidenced by the outrage over the Cambridge Analytica/Facebook debacle. When Google dissolved its ethics board just one week after its announcement, lots of people had opinions about what went wrong – establishing an ethics board in the first place, the people selected for the panel, whether Google’s attempt to establish the board was a genuine effort or a marketing ploy, etc.

Meanwhile, organizations around the globe are discussing ethical AI design and what “trust” should mean in light of self-learning systems. However, to affect AI ethics, it must be implementable as code.

“People in technology positions don’t have formal ethics training,” said Briana Brownell, a data scientist and CEO of technology company PureStrategy. “Are we expecting software developers to have that ethical component in their role, or do we want to have a specific ethics role within the organization to handle that stuff? Right now, it’s very far from clear what will work.”

Where Google erred

Some organizations are setting up ethics boards that combine behavioral scientists, professional philosophers, psychologists, anthropologists, risk management professionals, futurists and other experts. Their collective charter is to help a company reduce the possibility of “unintended outcomes” that are incongruous with the company’s charter and societal norms. They carefully consider how the company’s AI systems could be used, misused and abused and provide insights that technologists alone would probably lack.

The central question at issue was whether the members of the ethics board could be considered ethical themselves. Of particular concern were drone company founder Dyan Gibbens and Heritage Foundation president Kay Coles James. Gibbens’ presence reignited concerns about the military’s use of Google technology. James was known for speaking out against LGBTQ rights.

“One of the core challenges when you assemble these kinds of panels is what ethics do you want to represent on the panel,” said Phillip Alvelda, CEO and founder of Brainworks, a former project manager at the U.S. Defense Advanced Research Projects Agency (DARPA) and former NASA rocket scientist. “My understanding is that despite Google’s well-intentioned efforts to engage in a leadership practice of thinking deeply and considering ethics and making that part of the process of developing products, they ended up caught in a fight about what were the proper ethical backgrounds of the people included on the panel.”

Law enforcement solutions company Axon was one of the first companies to set up an AI ethics board. Despite its genuine desire to affect something positive, 42 organizations signed an open letter to Axon’s ethics board underscoring the need for product-related ethics (ethical design), the need to consider downstream uses of the product (ethical use) and the need to involve various stakeholders, including those who would be most impacted by the technology (ethical outcomes).

It’s not that AI ethics boards are a bad idea. It’s just that AI ethics isn’t a mature topic yet, so it is exhibiting early-market symptoms.

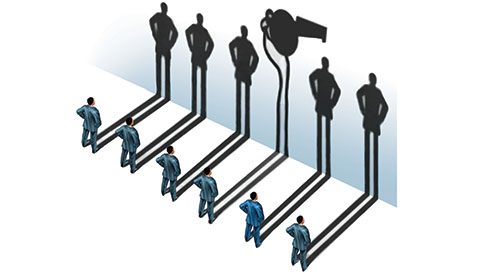

The whistleblowers are coming

Christopher Wylie, who worked at Cambridge Analytica’s parent company SCL Group, was one of several whistleblowers who disclosed the 2016 U.S. presidential campaign meddling on Facebook. Interestingly, he was also the same person who came up with a plan to harvest Facebook data to create psychological and political profiles of individual members.

Jack Poulson, a former Google research scientist, was one of five Google employees who resigned over Project Dragonfly, a censored mobile search engine Google was prototyping for the Chinese market. Project Maven caused even bigger ripples when 3,100 Google employees signed a letter protesting the U.S. Department of Defense’s use of Google drone footage. In that case, a dozen employees quit.

“I would assume that there are some people who feel like speaking out means their job would be jeopardized. In other cases, people feel freer or motivated to speak out,” said Amy Webb, CEO of the Future Today Institute, foresight professor at New York University’s Stern School of Business, and author of The Big Nine: How Tech Titans and Their Thinking Machines Could Warp Humanity. “I think the very fact we’ve now seen a lot of folks from the big tech companies taking out ads in papers, writing blog posts and speaking at conferences is a sign that there’s some amount of unrest.”

Right now, whistleblowing about AI ethics is in a nascent state, which is not surprising given that most companies are either experimenting with it, piloting it or have relatively early-stage implementations. However, technologists, policymakers, professors, philosophers and others are helping to advance the AI ethics work being done by the Future of Life Institute, the World Economic Forum, and the Institute of Electrical and Electronic Engineers (IEEE). In fact, standards, frameworks, certification programs and various forms of educational materials are emerging rapidly

What’s next?

Laws and regulations are coming, though not as quickly as the EU’s General Data Protection Regulation (GDPR) and the California Consumer Privacy Act (CCPA), which are both designed for the algorithmic age.

For now, software developers and their employers need to decide for themselves what they value and what they’re willing to do about it because AI ethics compliance does not yet exist.

“If you’re a company designing something, what are your norms, your ethical guidelines, and your operational procedures to make sure that what you develop upholds the ethics of the company?” said Brainworks’ Alvelda. “When you’re building something that has to embody some sort of ethics, what technologies, machineries and development practices do you apply to ensure what you build actually reflects what the company intends? [All of this] sits in a larger environment where [you have to ask] how well does it align with the ethics of the community and the world at large?”

Designers and developers should learn about and endeavor to create ethical AI to avoid unethical outcomes. However, technologists cannot enable ethical AI alone because it requires the support of entire ecosystems. Within a company, it’s about upholding values from the boardroom to the front lines. Outside an organization, investors and shareholders must make AI ethics a prerequisite to funding, and consumers must make AI ethics a brand issue. Until then, companies and individuals are free to do what they will unless and until the courts, legislators, and regulators also step in or a major adverse event happens (which is what many AI experts expect).

Developers should be thinking about AI ethics now because it will become part of their reality in the not-too-distant future. Already, some of the tools they use and perhaps some of the products and services they build include self-learning elements. While developers aren’t solely responsible for ethical AI, accountability will arise when an aggrieved party demands to know who built the thing in the first place.