Google is making a number of advances in the area of machine learning this week, from the release of TensorFlow 2.0 to updates to its Vision AI portfolio.

TensorFlow is Google’s open-source machine learning library. Version 2.0 provides an ecosystem of tools for developers and researchers looking to push the boundaries of machine learning and build scalable machine learning-powered applications, the TensorFlow team explained.

TensorFlow 2.0 now has a tight integration with the Python deep learning library Keras, eager execution by default, and Pythonic function execution. The team believes these features will make developing applications using TensorFlow more familiar for Python developers.

RELATED CONTENT:

A look at what’s coming in TensorFlow 2.0

New AI platofrm tackles 10 steps of AutoML

Be (AI) smarter about your digital transformation

The team also invested heavily in the library’s low-level API for this release. It now exports internally used ops and provides inheritable interfaces for concepts like variables and checkpoints. According to the TensorFlow team, this will allow developers to “build onto the internals of TensorFlow without having to rebuild TensorFlow.”

Other features in TensorFlow 2.0 include:

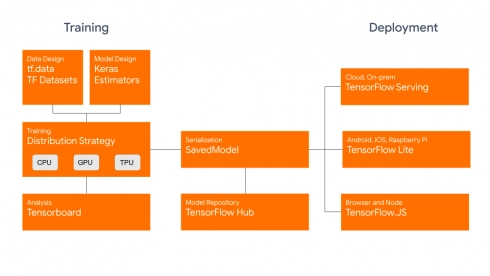

- Standardization on the SavedModel file format, which will allow developers to run models on various runtimes

- Distribution Strategy API, which distributes training with minimal code changes

- Performance improvements on GPUs

- TensorFlow Datasets, which provide a standard interface for datasets

AutoML Vision Edge, AutoML Video, and Video Intelligence API updates

Google also announced updates to AutoML Vision Edge, AutoML Video, and Video Intelligence API, which are all part of Google’s Vision AI portfolio. AutoML Vision Edge and AutoML Video were both introduced earlier this year, in April.

“Whether businesses are using machine learning to perform predictive maintenance or create better retail shopping experiences, ML has the power to unlock value across a myriad of use cases. We’re constantly inspired by all the ways our customers use Google Cloud AI for image and video understanding—everything from eBay’s use of image search to improve their shopping experience, to AES leveraging AutoML Vision to accelerate a greener energy future and help make their employees safer. Today, we’re introducing a number of enhancements to our Vision AI portfolio to help even more customers take advantage of AI,” Google product managers Vishy Tirumalashetty and Andrew Schwartz wrote in a post.

AutoML Vision Edge can now perform object detection and image classification on edge devices. According to the team, object detection is important for scenarios like identifying part of an outfit in a shopping app, detecting defects on a conveyor belt, or assessing inventory on a retail shelf.

AutoML Video Intelligence can also now do object detection, allowing it to track the movement of objects between frames. This will be useful in traffic management, sports analytics, and robotic navigation, among other use cases.

Finally, the Video Intelligence API, which offers pre-trained machine learning models capable of recognizing a number of objects in video, now can detect, track, and recognize logos of popular businesses and organizations. According to Google, it can recognize of 100,000 logos, making it ideal for use cases such as brand safety, ad placement, and sports sponsorship.