Google wants to improve developers ability to integrate machine learning technology into their applications with the announcement of four new APIs in its ML Kit.

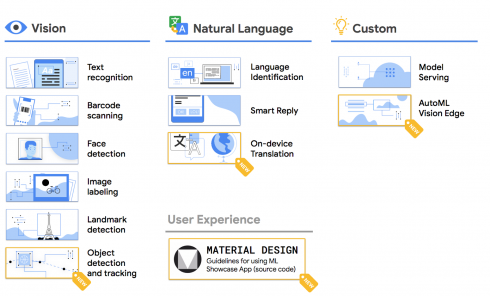

The new APIs are object detection, tracking, on-device translation and AutoML Vision Edge.

“We see strong engagement from users who use these features and as we are extending ML Kit with more APIs, we see this engagement accelerating,” said Christiaan Prins, a product manager at Google.

The company said it launched the new APIs as a response to developer feedback which focused on three core themes: more base APIs, help with UX, and greater customizability.

ML Kit aims to provide a real-time visual search experience through the new Object Detection and Tracking API, which can be paired with a cloud solution to track coordinates of object as well as its classification, according to the company.

Google’s Translation API now operates on-device, which means users can dynamically translate while offline. According to the company, it can be used to communicate with other users who don’t speak the same language or can translate content.

The AutoML Vision Edge API enables users to create custom image classification models that can run locally on users’ devices. This can be useful if the app needs to classify different types of food, animals and the sort, Google explained.

Additionally, the company worked with the Material Design team to provide new design patterns for ML apps. The patterns are now open sourced and available on the Material.io website.