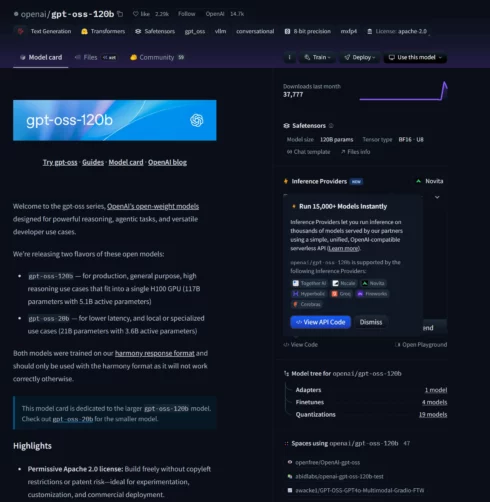

OpenAI is joining the open weight model game with the launch of gpt-oss-120b and gpt-oss-20b.

Gpt-oss-120b is optimized for production, high reasoning use cases, and gpt-oss-20b is designed for lower latency or local use cases.

According to the company, these open models are comparable to its closed models in terms of performance and capability, but at a much lower cost. For example, gpt-oss-120b running on an 80 GB GPU achieved similar performance to o4-mini on core reasoning benchmarks, while gpt-oss-20b running on an edge device with 16 GB of memory was comparable to o3-mini on several common benchmarks.

“Releasing gpt-oss-120b and gpt-oss-20b marks a significant step forward for open-weight models,” OpenAI wrote in a post. “At their size, these models deliver meaningful advancements in both reasoning capabilities and safety. Open models complement our hosted models, giving developers a wider range of tools to accelerate leading edge research, foster innovation and enable safer, more transparent AI development across a wide range of use cases.”

The new open models are ideal for developers who want to be able to customize and deploy models in their own environment, while developers looking for multimodal support, built-in tools, and integration with OpenAI’s platform would be better suited with the company’s closed models.

Both new models are available under the Apache 2.0 license, and are compatible with OpenAI’s Responses API, can be used within agentic workflows, and provide full chain-of-thought.

According to OpenAI, these models were trained using its advanced pre- and post-training techniques, with a focus on reasoning, efficiency, and real-world usability in various types of deployment environments.

Both models are available for download on Hugging Face and are quantized in MXFP4 to enable gpt-oss-120B to run with 80 GB of memory and gpt-oss-2bb to run with 16 GB. OpenAI also created a playground for developers to experiment with the models online.

The company partnered with several deployment providers for these models, including Azure, vLLM, Ollama, llama.cpp, LM Studio, AWS, Fireworks, Together AI, Baseten, Databricks, Vercel, Cloudflare, and OpenRouter. It also worked with NVIDIA, AMD, Cerebras, and Groq to help ensure consistent performance across different systems.

As part of the initial release, Microsoft will be providing GPU-optimized versions of the smaller model to Windows devices.

“A healthy open model ecosystem is one dimension to helping make AI widely accessible and beneficial for everyone. We invite developers and researchers to use these models to experiment, collaborate and push the boundaries of what’s possible. We look forward to seeing what you build,” the company wrote.