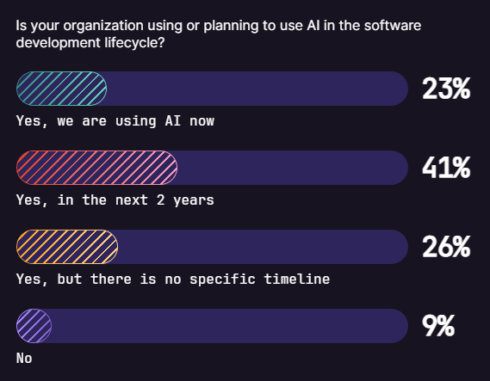

Only 23% of development teams are actually implementing AI today in their software development life cycle.

This is according to GitLab’s State of AI in Software Development report, which surveyed over 1,000 DevSecOps professionals in June 2023.

Despite low adoption now, when you add in the number of teams planning to use AI, that number climbs to 90%. Forty-one percent say they plan to use AI in the next two years and 26% say they plan to use it but don’t know when. Only 9% said they weren’t using or planning to use AI.

Of those respondents who are planning to use AI, at least a quarter of their DevSecOps team members do already have access to AI tools.

Most of the respondents did agree that in order to adopt AI in their work, they’ll need further training. “A lack of the appropriate skill set to use AI or interpret AI output was a clear theme in the concerns identified by respondents. DevSecOps professionals want to grow and maintain their AI skills to stay ahead,” GitLab wrote in the report.

The top resources for learning included books, articles, and online videos (49%), educational courses (49%), practicing with open-source projects (47%), and learning from peers and mentors (47%).

According to GitLab, 65% of the respondents plan on hiring new talent to manage AI in the software development life cycle in order to address the lack of in-house skills.

A majority of the respondents (83%) also agreed that implementing AI will be important in order to stay competitive.

For those 23% who are already using AI, 49% use it multiple times a day, 11% use it once a day, 22% use it several times a week, 7% use it once a week, 8% use it several times a month, and 1% use it just once a month.

According to GitLab, developers only spend 25% of their time writing code and the rest of the time is spent on other tasks. This is an indication that code generation isn’t the only area where AI could potentially add value.

Other use cases for AI that companies are investing in are forecasting productivity metrics, suggestions for who can review code changes, summaries of code changes or issue comments, automated test generation, and explanations of how a vulnerability could be exploited, among others.

Currently, the most popular use case for AI in practice is using chatbots to ask questions in documentation (41% of respondents), automated test generation (41%), summarizing code changes (39%). While not doing it currently, 55% of respondents are interested in code generation and code suggestion, which ranked as the number one interest among developers.

Many developers also worry about job security when thinking about the impact of AI. Fifty-seven percent of respondents fear AI will “replace their role within the next five years.”

Job replacement wasn’t the only worry; Forty-eight percent also worry that AI-generated code won’t be subject to the same copyright protections and 39% worry that this code may introduce security vulnerabilities.

There are also concerns around privacy and intellectual property. Seventy-two percent worry that AI having access to private data could result in exposure of sensitive information, 48% worry about exposure of trade secrets, 48% worry about how it’s unclear where and how the data is stored, and 43% worry because it’s unclear how the data will be used.

Ninety percent of the respondents said that they would have to evaluate the privacy features of an AI tool before buying into it.

“Leveraging the experience of human team members alongside AI is the best — and perhaps only — way organizations can fully address the concerns around security and intellectual

property that emerged repeatedly in our survey data. AI may be able to generate code more quickly than a human developer, but a human team member needs to verify that the AI-generated code is free of errors, security vulnerabilities, or copyright issues before it goes to production. As AI comes to the forefront of software development, organizations should focus on optimizing this balance between driving efficiency with AI and ensuring integrity through human review,” GitLab concluded.