Amazon’s public preview of Lex, the natural language processing (NLP) platform used by the Amazon Alexa digital assistant, made a splash at AWS re:Invent. With it, developers can create mobile apps, Internet of Things (IoT) devices, and chatbots that connect to Lex services, expanding Amazon’s NLP footprint beyond its own products, such as Amazon Echo. If you’re familiar with programming Alexa for the Echo, you can build apps now and easily port them to Lex at the official launch.

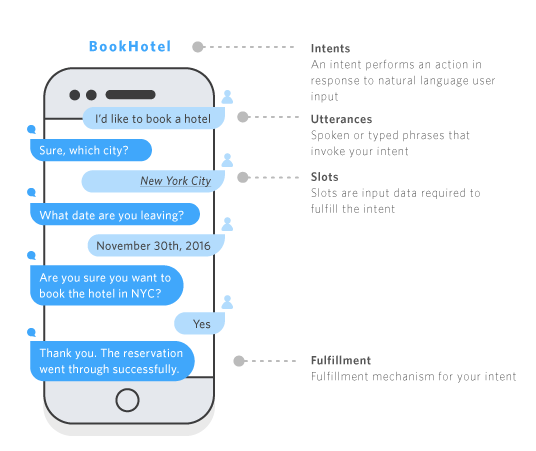

The Lex natural language processor can convert both written and spoken human language into meaningful, guided interactions. Lex captures a user’s text or speech and compares it to sample data stored on Amazon, looking to identity key words. For instance, if I wanted to order a pizza, I may say, “I want to order a pizza,” or, “Order me a pizza.” Each request is different, and Lex requires me, the user, to provide enough sample questions so that it can recognize the request.

If it’s missing any information – such as which toppings I want – Lex can prompt me further by sending text or voice queries. Once it has everything it needs, it can act on the request by invoking Lambda services. Amazon fittingly refers to this as “fulfillment.” Once Lambda fulfills the request, Lex sends an appropriate response to the client.

The Lex API is REST-based, so it’s easy to use. It has two actions – PostContent and PostText – so the same bot logic on the Amazon side can be used to deliver text-driven or voice-driven interfaces, interchangeably. But Lex doesn’t do it all for you, and there are some limitations that can make it frustrating.

How Lex parses natural language

When a user interacts with Lex, Lex is gathering data from the user, which Amazon refers to as “slots.” For instance, if I want to watch a specific movie, I’ll say, ”Play the movie Batman.” Lex stores a collection of the movies I want to watch into the Lex Console. In this case, I need Lex to understand that I want to watch the movie Batman.

As mentioned, Lex is missing some information, it can prompt for more. In my movie example, say I have multiple televisions in my house. When I say, “Play the movie Batman.” Lex can ask, “What room do you want to play the movie in?” This allows Lex to be more conversational, allowing a user to ask Lex to take user requests in multiple ways.

While this approach works out well for simple tasks like ordering a pizza, however – because there are a limited number of sizes, crusts, and toppings I can have – it’s less successful when I have a few hundred movies to choose from. I’d need to enter each movie into a slot. So if you’re thinking about using Alexa to order from a product catalog or use dynamically changing data, Lex can’t do that effectively yet.

This is where utterances come in

Utterances are important because they give Lex an understanding of how a user may ask questions. The more utterances you have for an action, the better Lex can respond. For example, I may say, “Play a movie for me,” or be more specific and say, ”Play the movie Hunt for Red October in the living room.”

Conversely, my son may say, “Can you play me a video?” My son and I both want to do the same thing, but Lex needs to be trained to understand that. This means I need to program some sample phrases into Lex to help Lex understand, known as “utterances.” The differences between specific phrases and ones that need more information must be added into Lex Console to help Lex understand all the permutations of a command.

Finally, Lex needs to connect to something useful, and Lex’s entry point to backend services is Lambda. Amazon provides a slew of backend services for which Lambda provides the integration logic, as well as providing connectivity to third-party and enterprise services. In the pizza example, orders can be stored in DynamoDB or sent to an order delivery web service. In my movie example, Lex might communicate directly to an exposed web service. Still, this design has its limitations.

While Lex is a great tool, it still takes work to get it connected to your apps. Lex would benefit greatly from the ability to create dynamic slots by storing slot data in DynamoDB. And as with a web or mobile user experience, it’s important to think about how users will engage with your bot and how they will ask it to do things. With the growing popularity of the Amazon Echo and voice-driven bots, it’ll be interesting to see where this technology goes.