There are a number of reasons why companies avoid automated UI testing for their mobile apps. Many are new to mobile development and are in a sort of Maslow’s mobile hierarchy of needs, in which perceived survival depends on just getting the app working, and things downstream in the workflow seem like luxuries. Even teams that have reached self-actualization with automated testing for Web development may be unfamiliar with the unique set of tools and processes mobile testing demands.

In fact, even the most sophisticated development organizations are often using only manual mobile testing for mobile—or not testing at all. According to a 2014 Xamarin survey, just 13.2% of mobile developers perform automated UI testing, and according to a Forrester Research survey, only 53% of developers perform even a cursory test on a single device.

Here are five of the most common reasons mobile teams have not yet automated mobile app quality, and five reasons why they just don’t make sense.

Myth 1: Speed. “We can’t take the time to automate.”

In 2014, vendors introduced nearly 7,000 new types of Android devices, as well as nearly 10,000 mobile-specific APIs. Mobile applications change quickly and ship faster. With QA in constant crunch mode, there just isn’t time to create test scripts and keep them in sync with features that change every day.

Reality: “You’re wasting time right now.” It’s true: Manual testing can be faster than automated testing, but only on the very first test run. On subsequent runs, any marginal benefits manual testing might have brought erodes almost immediately.

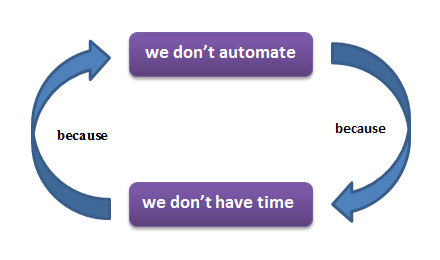

With every repeated test run or feature addition, app developers must either add testing resources or scale back test scope. With finite budgets, this will ultimately lead to a viscous cycle of diminishing quality. In response to negative user reviews and engagement data from untested devices, teams will want to expand device coverage. This will further increase stress on a QA department that is already at capacity, as the business struggles to research, procure and maintain devices while executing tests.

Even the best-funded manual UI testing programs fall short of complete coverage. In the U.S., mobile teams need to test on 188 devices to cover 100% of market share, yet according to a 2014 Xamarin survey, the majority of development teams frequently test on 25 or fewer devices, and more than a quarter of developers target five or fewer devices.

In real-world testing situations, automation can pay off almost immediately. On their very first test run, we have seen customers accelerate their testing timeline by 4x over entirely manual testing when running against 50 or more devices. Subsequent runs were several times faster yet, shortening nearly a full week of testing to just a few hours using.

Myth 2: Coverage. “Because of fragmentation, it’s just not possible to get broad device coverage.”

With more than 19,000 unique Android devices, and dozens of permutations of form factors and operating systems for iOS, many teams believe it is just not possible to cover the majority of the devices in a given market, so they default to testing on a handful of devices as “good enough.”

Reality: “You can have complete device coverage.” Even if you maintain a handful of devices in-house, you’re doing too much. Procuring devices is difficult, maintaining them costs time and money, and making them available to testers when and where they need them creates logistical logjams.

Gartner stated that mobile developers “must find ways to achieve a higher rate of automation to keep up with the platform spread and pace of change.” As it was in hosting and other functions that used to be managed internally, the path to that automation is through third-party cloud services.

Third-party cloud services can help automate the process of loading the app, executing test scripts, reporting results, and securely resetting the device back to factory standards. A subset of app tests can also run in parallel, further speeding results.

By testing on a wide range of real devices, Test Cloud allows everyone on the team to know precisely how an app will function, eliminating the guesswork typical of mobile development. For example, product managers can set minimum system requirements with justified confidence in device performance, and developers can receive objective visual confirmation of bug fixes before committing a new build, regardless of when and where they work.

Myth 3: Cost. “We can only afford manual testing.”

Automated testing requires infrastructure, test script creation, and a learning curve for QA staff. Many teams are already struggling to hit deadlines and are already over budget, so automated testing seems out of reach.

Reality: “Manual testing only saves money if you sacrifice coverage.” Manual testing only seems inexpensive in the most bare-bones environments. If testing involves only a quick “gut check” of basic functionality on a few devices, manual testing seems like a bargain. But anything resembling comprehensive device and test coverage will quickly make manual testing much more expensive than automation.

Manual testing can only scale by adding more bodies, and those costs are not truly linear. Scaling up staff to meet demand brings tremendous overhead in the form of training and coordination, and dividing test cases reduces the efficiency of each tester by removing perspective. Additionally, testers who are sophisticated enough to dig beyond basic user behavior and anticipate and explore the reasons why an application might fail, are neither cheap nor plentiful.

Automated testing will always require somewhat more overhead during the initial setup, but as shown above, it can produce dramatic gains in test speed and a corresponding reduction in staffing within days. Cloud-based test environments have further reduced costs by eliminating expensive and under-utilized on-premise test infrastructure.

Myth 4: Consistency. “Good enough will have to do.”

For many test teams, “ready to deploy” is a subjective decision, built on the perceptions of many different manual testers. While they understand that this means bugs will fall through the cracks, overlapping test coverage should catch the most critical and common issues before release, and the rest of the bugs can wait for a maintenance release.

Reality: “Quality is not qualitative.” Production-readiness should not be a matter of opinion. In purely manual environments, perception varies from test to test and tester to tester, leading to erratic test results and inconsistent documentation. This complicates decisions regarding product readiness, which could lead to lost revenue, customer disenchantment, or compliance failures. Additionally, it creates pockets of uncaptured tribal knowledge that are lost when employees walk out the door. Automation creates a quantifiable metric that can serve as an objective source of truth to inform decisions about product readiness, chart team progress, and justify business decisions.

Myth 5: Reluctance. “Automation replaces manual testing.”

Many developers enter into test automation expecting that they can replace their manual testers with machines. If test automation can repeat the same test thousands of times with complete accuracy, why would you need humans except to automate test scripts for machines to follow?

Reality: “Automation makes manual testers better.” Humans and machines are good at very different things. Manual testers will always be able to test more creatively, and automation frees them to do so. While humans look for new ways to break an app, automation can ensure compliance across a wide range of devices, from unit tests to full regression tests. And the two approaches needn’t work in isolation. Performing manual exploratory tests while back-end systems are under load from automated testing is an excellent way to discover errors that could crop up in production environments. Automated testing doesn’t replace human testers. It lets them do more interesting, rewarding work.

Better speed, coverage, cost and consistency add up to improved quality. Saving time and money means you can test more—not less—when you reach critical milestones. It allows testing to keep pace with agile development teams rather than standing in the way, so companies can release code more frequently and reduce the amount and impact of defects in any given build. That means developers are working with cleaner code, and bug fixes are dramatically less complicated. It also frees testers from acting solely as a gatekeeper to focus on creative, exploratory testing that improves product quality.