Buzzwords come and go from the software development industry. Some, like “agile” and “test-driven development,” have weathered the test of time. Others, like “SOA” and “LISPy everything,” haven’t fared as well. But “Big Data” as a buzzword, and as a quantifiable problem, is unique in the world of buzz.

Service-oriented architecture, agile development, and indeed most other development-related buzzwords, are prescriptive: SOA and agile are both solutions to the classic problem of software development, that is, not getting enough work done. But Big Data as a buzzword is not a solution. It’s a representation of a problem, and one that if your company does not have now, it assuredly will have soon.

Emil Eifrém, CEO of Neo Technology (a producer of the Neo4j graph database), said that Big Data is here to stay. “First, Big Data is not a fad. We see it every day,” he said. “You’ve all seen a gazillion presentations and analyst reports on the exponential growth of data. Supposedly, all new information generated this year will be more than all the data generated by humanity in all prior years of history combined.”

So, clearly, there’s plenty of data out there to deal with. But the Big-Data problem, as it were, isn’t just about having that big stack of information. It’s about juicing it like a pear for the sweet nectar of truth that awaits inside.

Big Data is about figuring out what to do with all that information that comes pouring out of your applications, your websites and your business transactions. The logs, records and details of these various systems have to go somewhere, and sticking them into a static data warehouse for safekeeping is no longer the way to handle the problem.

Instead, vendors, developers and the open-source community have all designed their own solutions to the problem. And for most of those problems, the Apache Hadoop Project is the most popular solution, though it is not the only option. Since its creation in 2005, however, Hadoop has grown to become the busiest project in the Apache Software Foundation’s retinue.

The reason for this popularity is that Hadoop solves two of the most ornery Big-Data problems right off of the bat: Hadoop is a combination of a MapReduce algorithm with a distributed file system known as HDFS. As a cluster environment, Hadoop can take batch-processing jobs and distribute them across multiple machines, each of which holds a chunk of the larger data picture.

Facebook often touts its Hadoop cluster as an example of success, citing its size of over 45PB as a sign that Hadoop can handle even the largest of data sets. But there are other signs that point to the increasing power, relevance and appeal of Hadoop. First of all, there are now three major Hadoop companies, with more popping up every day. Outside of the dedicated ISVs, analytics firms and major software vendors are also building connectors to Hadoop and its sub-projects.

#!

Why all the enthusiasm for Hadoop? Because there’s no alternative at the moment if you have to deal in the petabyte range. Below that threshold a number of other solutions are available, but even vendors have realized that no matter how robust their solutions are, Hadoop integrations can only make them better.

John Bantleman, CEO of RainStor, said that Hadoop really became relevant around two years ago. “Go back two years, and the data management landscape was all [online transaction processing] relational databases, like Oracle,” he said. “Once you start to hit velocity and volume, you’ll generate hundreds of terabytes of data and billions of records a day. Your general roles-based relational database tops out. That’s forcing customers to look at alternative solutions.

“Part of what’s available in the market is data-warehousing technology in products like Teradata. Those do scale to petabytes, but they’re extremely costly. They cost hundreds of millions of dollars to put that infrastructure in place. Hadoop has a cloud-like infrastructure, which allows you to manage that data at that scale at a fraction of the cost.”

And despite the fact that RainStor essentially competes with Hadoop, the company is still offering its products in forms that work on top of or in conjunction with Hadoop. So while RainStor’s bread and butter is storing large amounts of data in its highly compressed database, it is also able to perform analytics across a Hadoop cluster instead of inside itself.

“My view is Hadoop is going to be a platform, kind of like Linux is a platform,” said Bantleman. “It’s going to be a management system to manage Big Data. Generally, you have to build the stack out to meet enterprise requirements.”

Horton Hears a 2.0

Hortonworks (a producer of supplemental Hadoop software) is pushing forward efforts to do just this: turn Hadoop into a job-scheduling cluster-management system, rather than just a vehicle for MapReduce. As the company charged with maintaining and pushing forward innovation in the Apache Hadoop Project, Hortonworks is focused on two things: training, and improving open-source Hadoop.

On the other side of the coin, Cloudera is the commercial services and products company for Hadoop. It offers its own distribution of Hadoop, coupled with management tools to make cluster control simpler.

But it is Hortonworks that is thinking most heavily about the next version of Hadoop. Dubbed version 2.0 by some, this next edition will include complete rewrites of many aspects of the system.

Shaun Connolly, vice president of corporate strategy at Hortonworks, said that Hadoop 2.0 will be about updating some of the lagging portions of the project to be more in line with modern needs.

“Today’s MapReduce is a job-processing architecture and a task tracker,” he said. “The Hadoop 2.0 effort really has been focused on separating the notions of the MapReduce framework paradigm from the resource-management capabilities of the platform, and generalizing the platform so MapReduce can just be one type of application framework that can run on the platform.”

That means Hadoop 2.0 is poised to be more like a data center operating system than simply a MapReduce bucket and scheduler. “Other types of applications we can foresee coming would be message-passing interface applications, graphic-processing applications, stream-processing systems, those types of things,” said Connolly. “At the end of the day, they begin to open up Hadoop. We view Hadoop as a data platform, and for the data platform to continue to be relevant, it needs to open itself up to other work cases and to effectively store the data across larger clusters.”

HDFS too is receiving an update in Hadoop 2.0, he said, and will become highly available in the next major release. Making the file system highly available will vastly improve the performance of HBase, the in-Hadoop database framework. HBase has been slowly evolving to become fast enough for front-line usage, with the ultimate goal being to allow Hadoop to run all the data for your sites, not just the long-term storage.

#!

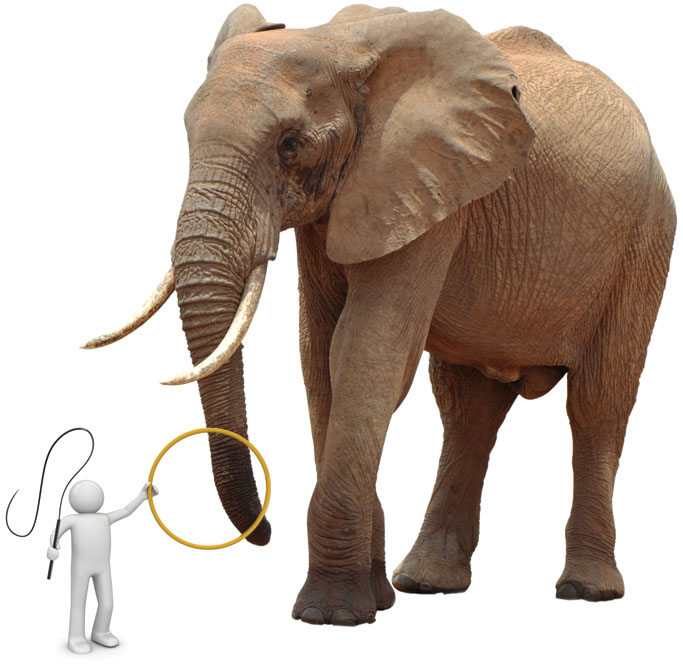

Not the only elephant in the room

While Hadoop is clearly where the excitement is for the open-source Big-Data community, it’s not the only option out there. Big-Data solutions come in many shapes and sizes, and the ability to couple them with Hadoop makes them even more relevant.

One of the popular use cases for Hadoop right now is as a scum filter: All data is poured into the unstructured Hadoop data store, and jobs are then written to winnow down that data set to a manageable size—say a few gigabytes.

Those gigabytes of filtered data are then ready to be processed in more-traditional business intelligence and analytics platforms. And that’s not to say that many of these solutions don’t have Hadoop connectors as well.

But the current state of analytics platforms is in flux due to this major shift in focus away from expensive data warehouses to more commodity-priced Hadoop hardware.

That’s echoed by George Mathew, president and COO of Alteryx, an analytics platform, which was recently integrated with Hadoop through a new Apache Hive-based connector.

“For our Hadoop driver, we have a rough ODBC connector to Hive,” he said. “We did that with the 7.0 release, and released the connector jointly with MapR. The ability to take pre- or post-MapReduce functions and bring that onboard into a SQL-like expression, and then basically join that against more structured data that might be in a more traditional data warehouse, is very powerful. That’s where we see the current movement, particularly the whole volume side of things.”

MapR, too, has been busy building on top of Hadoop. The MapR distribution of Hadoop includes many next-generation features that have not even made it off the drawing board at Apache, said Jack Norris, vice president of marketing at MapR.

“We looked at Hadoop early on, and basically determined that this would be a very strategic analytic data store for organizations both large and small,” he said. “We made some deep integrations to make it reliable, and to provide the data protection and provide full high availability: all the features and capabilities you expect in other enterprise applications. It’s leaps and bounds ahead of where Apache is, and where it plans to be in the future.”

Running jobs on top of Hadoop is no longer just about Java, the SQL-like Hive or the ETL-like Pig. Thanks to hard work from the community around open-source language R and Revolution Analytics, that popular analytic programming language can now be used to write map/reduce jobs for a Hadoop cluster.

David Smith, vice president of marketing and communications at Revolution Analytics, said that R is great for sifting through giant piles of data.

“The basic use case that I have seen with this is to think of Hadoop as this massive unstructured data store that can read data from all sorts of formats,” he said.

“R shines at the distillation and refinement process for that data. You can use it to convert that data in Hadoop into something that’s structured that you can then apply statistical analytics too. You do that distillation process with R in Hadoop, and you generate another large, but not as large or unstructured, data set. You can then do regressions and an exploratory analysis.”

#!

Simple on Big

MapR’s Norris said that one of the biggest appeals of Hadoop and other Big-Data systems is that algorithmic analysis of big data sets is wildly simpler than it is on smaller sets of data. While there are some who quibble with this theory, he said that “Simple algorithms on big data outperform complex models. There was an article about this from Google. They used scene completion: They take a picture, and they want to take an element out and fill the background back in. On a corpus of thousands of data examples, it didn’t work, but the same algorithm with a million samples in the corpus worked well.”

But simple is not the only option. There is a bit of a valley between the simple and the complex in Big Data, and that second slope upward begins where predictive analytics and machine learning begin.

In fact, Hadoop is ripe for machine learning. Though Apache Mahout (the Hadoop machine-learning library project) is not as mature as commercial offerings, it has been expanding and evolving apace with the rest of Hadoop.

Jacob Spoelstra, head of R&D at Opera Solutions (a producer of predictive-analytic software), said that predictive and machine learning are extremely powerful for business, but the trick is figuring out how much such capabilities are worth from a development standpoint.

“There are things like the Netflix challenge, where Netflix held a contest to rewrite its recommendation algorithm,” he said. “Sure you have lots of data, and maybe a fairly standard approach to achieve an improvement over what you were doing before, but the question is how much is that further improvement worth to you? Is that change worth a few million dollars?

“To some extent, that is the promise of machine learning, and that is what Opera does. We differentiate from predictive analytics by saying we’re machine learning. The machine responds to changes over time. It’s about using feedback to help you make predictions of whether someone will purchase an item, and incorporating that back to your algorithm and adjusting the parameters of your statistical model so next time you will make a better prediction. It’s continuously improving your performance over time. That will become more important as time moves on.”

As machine learning and predictive analytics become more commonplace, they may help to alleviate what is, perhaps, the most difficult problem for Big Data: a lack of talent.

Patrick Taylor, CEO of Oversight Systems (a Big-Data analysis and workflow company), said there are not enough data scientists to go around, and that there may not be for some time. “I saw a really interesting article from McKinsey where they were talking about Big Data, and one of the things they cited was that we’re headed toward a shortage of analysts, data scientists and whatnot,” he said.

“That’s the problem we solve for people. There are a lot more people who could use the valuable insights than there are people who know how to get them. What’s emerging are software platforms like ours that are basically in the business of putting those ‘ah has’ to work every day.”

Taylor likened Big-Data strategy to the “Moneyball” strategy employed by the Oakland Athletics baseball team. “In ‘Moneyball,’ there are two steps. The part everybody remembers is where they were really clever about how they assembled a team on a budget. They looked for new and innovative analysis of what makes a good first baseman. That’s the traditional strategic planning side of what we’ve done with analytics. But they didn’t start winning until they applied those same insights into how they played the game. That’s what you’re talking about here. It’s going to take both of those together, and I have to put the results from analysis to work in my day-in, day-out business,” he said.

#!

Other ways over Big Data

Outside of all this Hadoop fervor, there are plenty of alternative solutions to the Big-Data problem. One of those solutions had its initial public offering in late April: Splunk.

While Splunk began as a tool for analyzing log files, it has matured into a clear window from which Big Data can be observed and put through its paces. And it’s not just about operations using those logs to tweak server configurations, either.

Leena Joshi, director of solutions marketing at Splunk, said that “One of the big reasons why IT ops people put Splunk in place is to get developers off of their production box. They use the role-based access controls to make sure the data from the production boxes is available to the developer. They find it easy to see what’s going on in their own code, and if you log key value pairs, you can get deep analytics out of them.”

Elsewhere, graph databases are appearing as an alternative to Hadoop and other data stores for Big Data. Neo Technology’s Eifrém said that his company’s flagship graph database offers significant insights and real-time data mining for enterprises, something that can’t be done on a slower Hadoop cluster.

“We see this huge trend toward real-time data,” he said. “One example is retail. There are a bunch of people using graph databases in retail because they want to know buying patterns and predict buying behaviors of customers while they’re in the store. Someone coming in and buying a bunch of beer and a bunch of diapers is a pattern, and if you can figure out the demographics of someone while they’re in the store, that’s when you can really target discounts and rebates and promotions. If you figure this out a day later, that’s kind of interesting, but it’s much more valuable to get that within minutes, while they’re in the store. There’s value in low-latency response time.”

But real time or not, Hadoop remains the big focus for Big Data at the moment. While alternatives exist, Revolution Analytics’ Smith said, “I definitely see Hadoop being the new hotness, if you want to put it that way. It’s groundbreaking technology, as it allows companies to store the data that heretofore they’ve let fall off the table. Now you have the capability of looking at the atomic level of your data to find out interesting things about it.”