At its Data + AI Summit, Databricks announced several new tools and platforms designed to better support enterprise customers who are trying to leverage their data to create company-specific AI applications and agents.

Lakebase

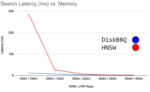

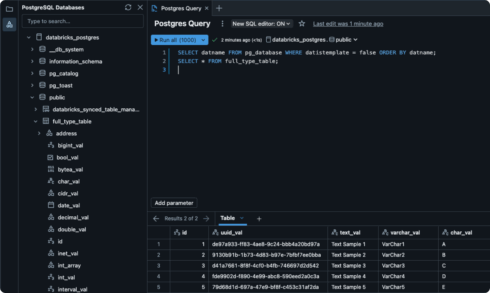

Lakebase is a managed Postgres database designed for running AI apps and agents. It adds an operational database layer to Databricks’ Data Intelligence Platform.

According to the company, operational databases are an important foundation for modern applications, but they are based on an old architecture that is more suited to slowly changing apps, which is no longer the reality, especially with the introduction of AI.

Lakebase attempts to solve this problem by bringing continuous autoscaling to operational databases to support agent workloads and unify operational and analytical data.

According to Databricks, the key benefits of Lakebase are that it separates compute and storage, is built on open source (Postgres), has a unique branching capability ideal for agent development, offers automatic syncing of data to and from lakehouse tables, and is fully managed by Databricks.

It is launching with several supported partners to facilitate third-party integration, business intelligence, and governance tools. These include Accenture, Airbyte, Alation, Anomalo, Atlan, Boomi, Cdata, Celebal Technologies, Cloudflare, Collibra, Confluent, Dataiku, dbt Labs, Deloitte, EPAM, Fivetran, Hightouch, Immuta, Informatica, Lovable, Monte Carlo, Omni, Posit, Qlik, Redis, Retool, Sigma, Snowplow, Spotfire, Striim, Superblocks, ThoughtSpot and Tredence.

Lakebase is currently available as a public preview, and the company expects to add several significant improvements over the next few months.

“We’ve spent the past few years helping enterprises build AI apps and agents that can reason on their proprietary data with the Databricks Data Intelligence Platform,” said Ali Ghodsi, co-founder and CEO of Databricks. “Now, with Lakebase, we’re creating a new category in the database market: a modern Postgres database, deeply integrated with the lakehouse and today’s development stacks. As AI agents reshape how businesses operate, Fortune 500 companies are ready to replace outdated systems. With Lakebase, we’re giving them a database built for the demands of the AI era.”

Lakeflow Designer

Coming soon as a preview, Lakeflow Designer is a no-code ETL capability for creating production data pipelines.

It features a drag-and-drop UI and an AI assistant that allows users to describe what they want in natural language.

“There’s a lot of pressure for organizations to scale their AI efforts. Getting high-quality data to the right places accelerates the path to building intelligent applications,” said Ghodsi. “Lakeflow Designer makes it possible for more people in an organization to create production pipelines so teams can move from idea to impact faster.”

It is based on Lakeflow, the company’s solution for data engineers for building data pipelines. Lakeflow is now generally available, with new features such as Declarative Pipelines, a new IDE, new point-and-click ingestion connectors for Lakeflow Connect, and the ability to write directly to the lakehouse using Zerobus.

Agent Bricks

This is Databricks’ new tool for creating agents for enterprise use cases. Users can describe the task they want the agent to do, connect their enterprise data, and Agent Bricks handles the creation.

Behind the scenes, Agents Bricks will create synthetic data based on the customer’s data in order to supplement training the agent. It also utilizes a range of optimization techniques to refine the agent.

“For the first time, businesses can go from idea to production-grade AI on their own data with speed and confidence, with control over quality and cost tradeoffs,” said Ghodsi. “No manual tuning, no guesswork and all the security and governance Databricks has to offer. It’s the breakthrough that finally makes enterprise AI agents both practical and powerful.”

And everything else…

Databricks One is a new platform that brings data intelligence to business teams. Users can ask questions about their data in natural language, leverage AI/BI dashboards, and use custom-built Databricks apps.

The company announced the Databricks Free Edition and is making its self-paced courses in Databricks Academy free as well. These changes were made with students and aspiring professionals in mind.

Databricks also announced a public preview for full support of Apache Iceberg tables in the Unity Catalog. Other new upcoming Unity Catalog features include new metrics, a curated internal marketplace of certified data products, and integration of Databricks’ AI Assistant.

Finally, the company donated its declarative ETL framework to the Apache Spark project, where it will now be known as Apache Spark Declarative Pipelines.