How far does technology need to go, or how far are we going to let it go? With all the recent advancements being made to devices and software, innovation is starting to explode, and not necessarily in a good way.

A company recently launched a new platform that makes it easier for developers to add contextual awareness to their applications. That means if you are driving your car, your app can let someone who is texting you know you are driving. Or if you are running late to a meeting, your app can let your boss know you are running late—taking away the option of you slipping in unnoticed, or even at stopping for donuts on the way in and using the length of the line at the donut shop for an excuse for your lateness. A good thing for the boss; for you, not so much.

(Related: The EFF doesn’t want trackers)

If someone wants to text you but can tell you are driving, will that encourage them to wait until you are not driving to begin a text conversation? Going even further, Google recently launched Inbox, a new artificial intelligence e-mail feature that will respond to e-mails for you. This technology may be advertised as “making our lives easier,” but essentially it’s just taking us out of the equation.

People are so worried about technology taking over in a harmful way, they don’t even notice it’s taking over in a personal way. Who needs to wish Grandma a happy birthday when your phone or device will do it for you? Who needs to hand pick out a gift for your significant other when you have technology deciding for you? Technology can even find you that significant other, without you having to leave your house.

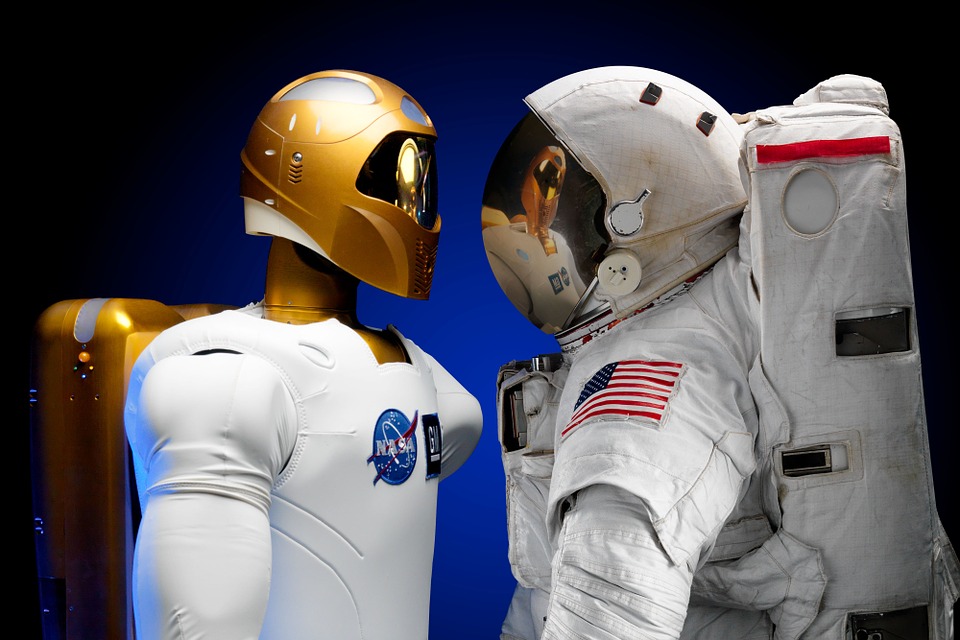

Would you trust technology to watch your kids, or take care of you when you’re sick? Some might argue a well-placed camera can provide a caregiver with a constant view of the patient, or even as a robot, it would be more attentive than a human. And, if the patient is hooked up to a medical device that can communicate directly with a doctor or ambulance corps when it detects an abnormality via predictive analytics, isn’t that better than waiting, say, for the patient to go into cardiac arrest before any action is taken?

At the core of everything is data. Data that organizations can collect about you can make your life better, in terms of health, leisure, work and more. But that same data can also be used by nefarious persons to harm you.

These are the social, moral and ethical questions we need to continue to discuss, bat around and try to answer as technology advances. But who should make these decisions? World leaders? The medical or legal professions? Parents? Will we always be able to “opt out,” to stay “off the grid,” so to speak? Yes, if we choose to have cars without entertainment and navigation systems, if we choose to not use smartphones, if we avoid shopping online. But does that put us at a disadvantage?

Important questions indeed. We worry about who ultimately will be making those decisions.