This week, there was a major breakthrough for artificial intelligence researchers. Google’s AI system AlphaGo won a contest of the ancient strategy game Go, the first time an AI system was able to do so for it.

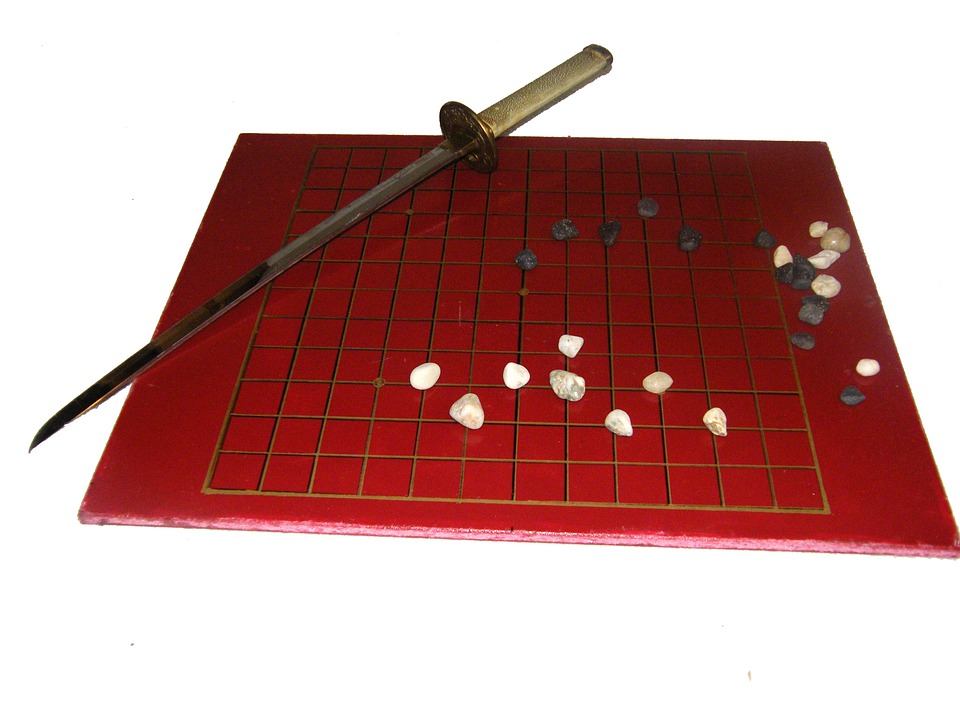

The rules of Go are simple: Players take turns placing black or white stones on a board, and they try to capture the opponent’s stones or surround empty space to mark points of territory. It takes concentration and a bit of intuition to play it. While the rules are simple, the possibilities for positions are just about endless. In fact, the positions are more than a googol times larger than chess, according to a Google blog post.

Since the game is complex, it makes it hard for a computer to play, which is why it has been so difficult for an AI to beat the game. Previously, computers playing Go were compared to nothing more than amateur Go players, according to Google.

(Related: AI system teaches itself to play chess)

The AI computing system AlphaGo was developed by Google researchers in the United Kingdom. It combines an advanced tree search (a heuristic search algorithm for decision-making processes) with deep neural networks.

The neural networks take a description of the Go board as an input and process it through 12 network layers that contain millions of neuron-like connections. A “policy network” selects the next move to play, while the “value network” predicts the winner of the game.

The researchers trained the neural networks on 30 million moves from games played by human experts until the AI could predict the human move 57% of the time. Google wrote that the previous record for AlphaGo was 44%.

The company said that its main goal was to beat the best human players, not just mimic them. AlphaGo was able to do this by discovering new strategies by using reinforcement learning, which was done with computing power from the Google Cloud Platform.

The big test happened when Google held a tournament between AlphaGo and other top Go computer programs, according to the company. The AI won all but one of its 500 games. Then, Google pitted it against Go champion Fan Hui, an elite player who has been playing since the age of 12. AlphaGo won all five games. This is the first time an artificial intelligence system has beaten a professional Go champion.

Google said that the next step will happen in March, when AlphaGo will compete in a five-game challenge match in Seoul against “legendary” Go player Lee Sedol, considered the top Go player in the world. Google hopes that this system can be used in the future for more than just games—for instance solving the world’s problems like climate modeling or disease analysis.

Here is a video of AlphaGo in action: