End users today have been conditioned to expect a lot from their applications. First, they should have an amazingly easy experience. They also should be able to utilize all the features of the device they’re running on. Apps should be intuitive and actually help users along, by anticipating and pre-filling data entry into forms and guiding users to the finish of their journey, whether it’s purchasing something, using a calendar, or simply finding out a piece of information.

In fact, a huge number of consumer applications have these benefits. Unfortunately, a huge number of business applications do not. And why is that?

Creating applications for mobile devices is hard. There are different operating systems, different versions of those operating systems, and the applications themselves need to leverage the device in an application-specific way. Plus, finding skilled mobile application developers is hard, and they are expensive.

In the opinion of Praveen Seshadri, founder and CEO of no-code application platform AppSheet, “Mobile app development is a nightmare. Has been and still is, from the point of view of writing software. This is primarily due to the two dominant platforms used for mobile app development with the code for each platform being widely different. So, any successful mobile app development project requires that two codebases must be managed and continually supported.”

Among the great advances of mobile applications has been in their ability to take advantage of the features of the device. Banks are leveraging the cameras in these devices to enable customers to take a photograph of a check and send it in to be deposited. And voice recognition programs like Siri, Cortana and Google help users engage their applications when their hands are otherwise occupied.

“A truck driver should be able to talk to his business app and say, ‘Hey show me where I’m supposed to go next,’ Seshadri said. “In business apps, every one of them is some particular domain; maybe it’s about inspection of trucks. So I should be able to say to it, ‘Show me the trucks that have damage.’ Try saying that to Siri and it’s going to barf, because it has no clue what you’re talking about. It’s not scoped down to specific domains. It’s why every app needs to have app-specific intelligence.”

Seshadri explained that if a company is capturing data about inspecting its fleet of trucks, there should be a workflow that’s kicked off if one of the trucks is damaged. If there’s damage, then an email has to go to a manager, and it needs to carry a photo of the damage as an attachment. Or you’re collecting data, the mobile part is enabled, but you also have to be able to do reports every day, so management can see what’s happening. “You have to build dashboards and analytics,” he said. “And, in order to do this, you need to do auditing, because people capturing data need to worry about compliance.”

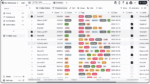

These app development challenges and consumerization of business applications are what led AppSheet to create a no-code app dev platform five years ago, designed for use by non-developers to solve some of their own business problems. And after years of evolving the product based on feedback from its customers, AppSheet this month is introducing two new artificial intelligence-related features to its platform: Optical character recognition (OCR) and predictive models to drive better outcomes.

Using the example of the bank check, Seshadri noted that not only was the camera taking the photo, but OCR under the hood could read and understand the content of the check, including the signature of endorsement. This eliminates the need to copy the content on the check and manually enter the information into a form.

“OCR and AI working together probably needed a data scientist and a whole bunch of training to get the system to learn how to read those checks,” he said. “Maybe Bank of America or Wells Fargo had the resources to do this, but shouldn’t the hundred-person company be able to deliver an app that can read labels on things? That’s the interesting dimension of no code, you can create apps without developers, and you should be able to write intelligent apps, and you shouldn’t need data scientists to do this.”

The OCR feature will be bundled with natural language processing capabilities into what AppSheet is calling its Intelligence Package.

On the predictive modeling side, AppSheet has added features that perform a statistical analysis of users’ app data to make predictions about future outcomes. Each predictive model is powered by a machine learning algorithm that learns to generalize from historical data. One example of this type of predictive model includes categorizing customer input based on examples of the feedback and the categories to which they belong. Another example involves predicting customer churn by using customer instances and data on whether they have ended their relationship. Another predictive model includes estimating the cost of a project given the examples from previous projects, as well as how much they cost.

“We’re trying to not make some esoteric thing that only data scientists and machine learning people with degrees from Stanford can understand,” Seshadri said, “but make it something that anybody can understand and consume.”

Content provided by SD Times and AppSheet