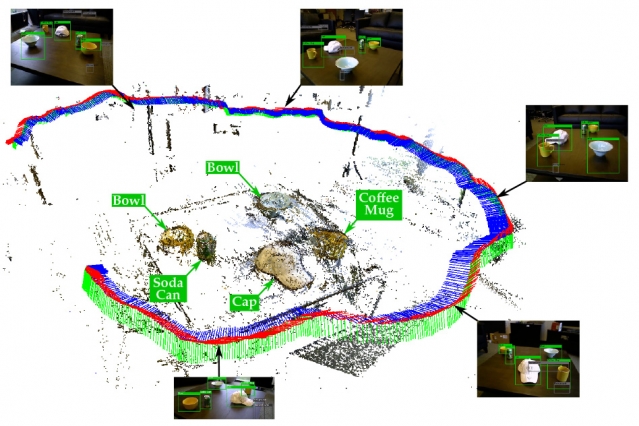

Researchers from MIT’s department of mechanical engineering are working on improving object recognition for robots. The researchers have developed a monocular Simultaneous Localization And Mapping (SLAM) object recognition system designed to provide better performance than traditional object recognition methods.

“The goal is for a robot to build a map, and [to] use that map to navigate,” said John Leonard, professor of mechanical and ocean engineering at MIT. “The idea with SLAM is that as you move to an environment, you combine the data from multiple vantage points and are able to estimate the geometry of the scene and estimate how you move.”

(Related: A new robot company is asking for donations)

Classic object recognition systems that aren’t SLAM-aware only recognize objects on a frame-by-frame basis, according to the researchers.

“We refer to a ‘SLAM-aware’ system as one that has access to the map of its observable surroundings as it builds it incrementally and the location of its camera at any point in time,” the researchers wrote in a paper.

Providing robots with SLAM-aware object recognition is going to be a key component for providing useful information and tasks in everyday situations for robots, according to Leonard.

“We want to have the equivalent of Google for the physical world,” he said. “Google can crawl around the Internet and find Web pages and answer queries. Imagine if you had that ability for the physical world, where robots or mobile devices can find your car keys, or tell you what object you are looking at.”

In other instances, Leonard said the system can provide better experiences for virtual reality by keeping the virtual world and physical world aligned.

In the future, Leonard hopes to see SLAM as an on-demand feature. “If you could just download this ability to have a physical understanding of the world from mobile sensors, that is sort of my dream,” he said.

The researchers are still working on whether or not object detection can similarly aid SLAM and overcome loop closure, as well as the robustness of the system (such as seeing in bad lighting and weather circumstances).

“We believe that robots equipped with such a monocular system will be able to robustly recognize and accordingly act on objects in their environment, in spite of object clutter and recognition ambiguity inherent from certain object viewpoint angles,” the researchers wrote.