Robots can walk, talk, fly and even tell jokes, and now they are adding another special ability to that ever-increasing list: X-ray vision. Researchers at the University of California, Santa Barbara (UCSB) have developed a way for robots to see through solid walls using radio frequency signals.

“This is an exciting time to be doing this kind of research,” said Yasamin Mostofi, professor of electrical and computer engineering at UCSB. “With RF signals everywhere, we need to understand what they can tell us about our environment. Furthermore, with robots starting to become part of our near-future society, the automation of the process becomes a possibility.”

Mostofi and her team have been working on this X-ray vision for the past few years, trying to figure out how to get these robots to see objects and humans behind thick walls.

“My own background is in both wireless systems and robotics and thus putting the two together seemed like a very exciting possibility,” she said.

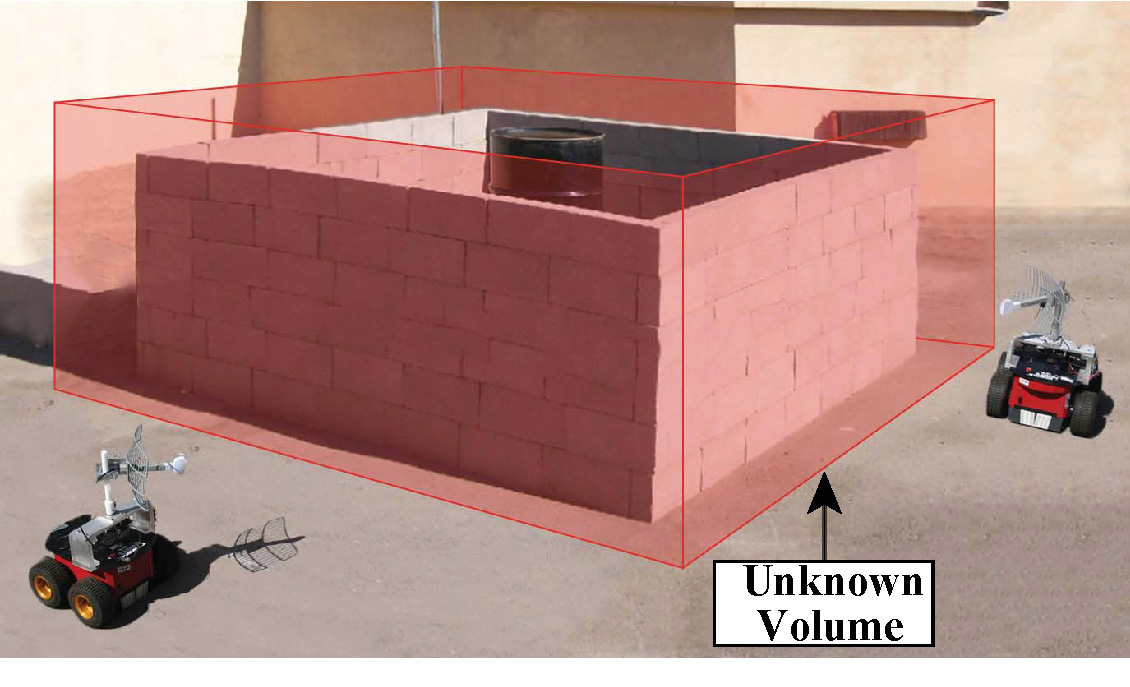

Currently, the robots work in pairs to use their superpower. One transmits a WiFi signal while the other receives it, enabling them to provide an accurate map that not only sees what’s behind a wall and where objects and spaces are positioned, but also classifies the type of material of each occluded object.

Researchers at MIT developed a similar technology, WiTrack, which was designed to sense the motion of a person through radio signals reflecting off their body. Mostofi note that her technology can sense objects behind walls made of brick or concrete, and objects do not have to be moving in order for the technology to detect them.

“We are constantly improving it to see more complex and cluttered areas at a high resolution,” Mostofi said. “Also, it can be implemented on any WiFi-enabled gadget such as a cellphone or a WiFi network. There are also several possible applications for it.”

Some applications Mostofi hoped to see included search and rescue, surveillance, occupancy detection, object classification, archeology, robotic networks, and localization for smart environments.

“Taking it to some of these domains requires further development and research tailoring it that specific area,” she said.

While Mostofi has high hopes for this technology in the future, she has to overcome a few setbacks first.

“The received signal carries the information of the visited occluded objects,” she said. “However, this information is completely buried and mixed up in the received signal, meaning that extracting this information is very challenging, which is what we have been working on. It requires addressing several challenges in wave modeling, signal processing and robot navigation.”

More information about the technology is available here.