The Android development team is adding new features to its ML Kit, which is currently being used in over 25,000 iOS and Android apps.

ML Kit is the company’s solution for integrating machine learning into mobile applications. It was launched in 2018 at its I/O conference.

The team is introducing a new SDK that doesn’t rely on Firebase like the original version of the ML Kit did. According to the team, they got feedback from users that they wanted something more flexible. The new SDK includes all of the same on-device APIs, and developers can still choose to use ML Kit and Firebase together if they choose.

According to the team, this change makes ML Kit fully focused on on-device machine learning. The benefits of this over cloud ML include speed, the ability to work offline, and more privacy.

The Android development team is recommending that developers using ML Kit for Firebase’s on-device APIs migrate to the standalone SDK. Details on how to do so can be found in their migration guide.

In addition to this new SDK, the team added a number of new features for developers, such as the ability to ship apps through Google Play Services. Features such as Barcode scanning and Text recognition could already be shipped through Google Play Services, and now Face detection/contour support is supported. Shipping through Google Play Services results in smaller app footprints and allows models to be reused across apps, the team explained.

The team has also added Android Jetpack Lifecycle support for all APIs. According to the team, it is now easier to integrate CameraX, which provides image quality improvements over Camera1.

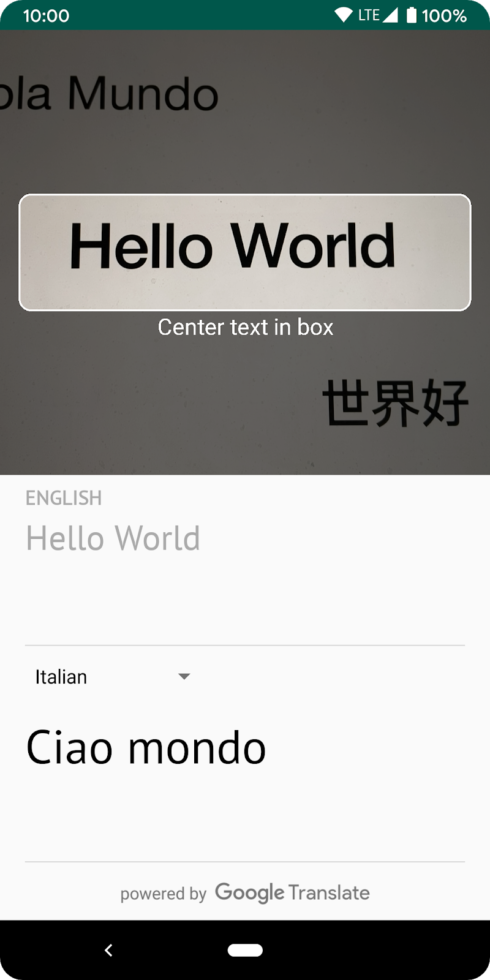

They introduced a new code lab to help developers get started with this CameraX integration and the new ML Kit. In the Recognize, Identify Language and Translate text code lab, developers learn how to build an Android app that uses ML Kit’s Text Recognition API to identify text from a real-time camera feed. It then uses the Language Identification API to determine the language, and then translates the text to a chosen language.

The Android development team also offers an early access program for developers wishing to get access to upcoming features. Two new APIs included in the program are Entity Extraction, which detects entities in text, such as a phone number, and makes them actionable, and Pose Detection, which can detect movement for 33 skeletal points, including hands and feet.