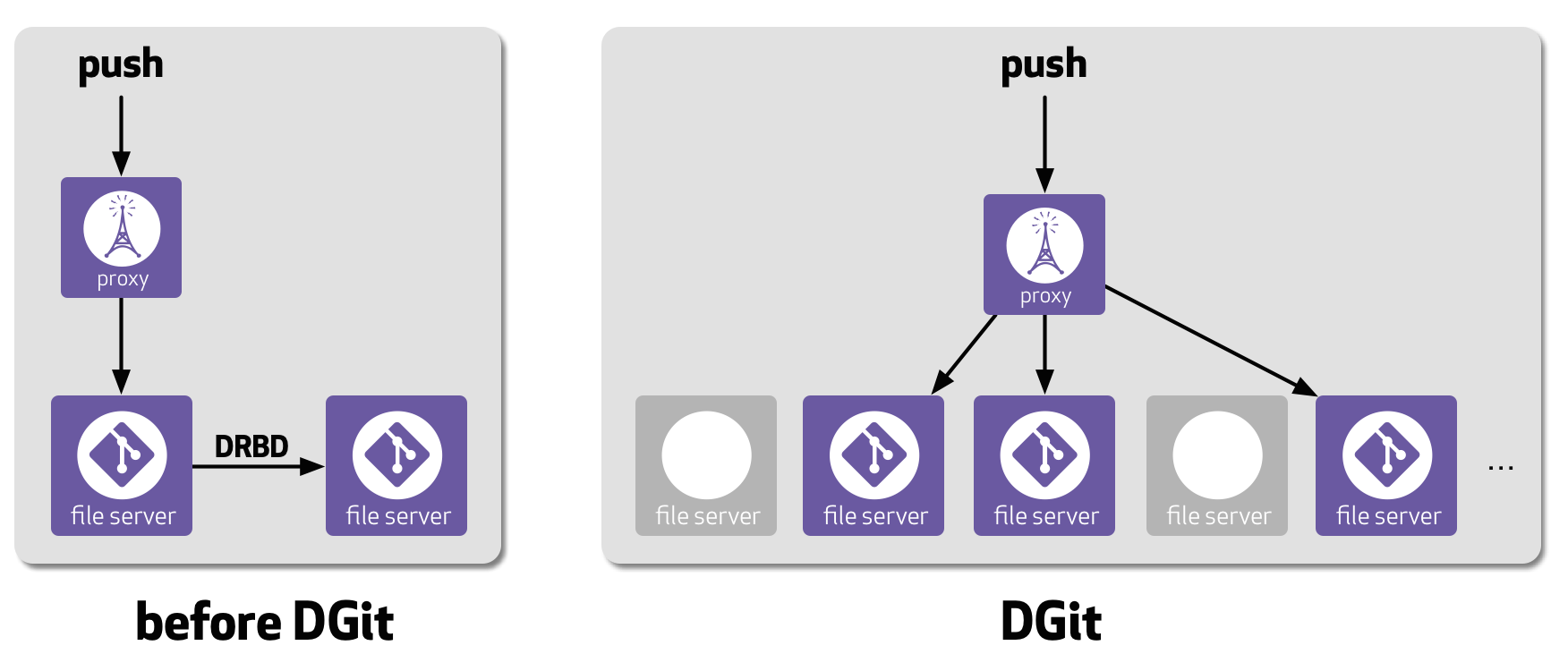

GitHub wants to be able to maintain the performance and reliability of its storage system, so it introduced DGit, a storage system that is designed to keep repositories fully available without interruption even if one of the servers goes down.

DGit stands for “Distributed Git.” Git itself is distributed, so any copy of a Git repository contains every file, branch and commit in the project’s entire history. DGit uses this Git property to keep three copies of every repository on three different servers. The goal is to make GitHub less prone to downtime, so even in extreme cases where two copies of the repository become unavailable at the same time, the repository still remains readable.

(Related: What’s new in Git 2.8)

This change has been rolled out gradually over the past year as DGit was being built. A complicated aspect of DGit is that the replication is no longer transparent. This means every repository is now stored on three servers rather than the one server. DGit must implement its own serializability, locking, failure detection, and resynchronization, rather than relying on DRBD and the RAID controller to keep copies in sync, said GitHub in a blog post.

Instead of identical disk images full of repositories, DGit performs replication at the application layer, with replicas as three loosely coupled Git repositories kept in sync via Git protocols, according to the company.

If a file server needs to be taken offline, DGit automatically determines which repositories are left with fewer than three replicas and creates new replicas of those repositories on other file servers. This “healing” process uses all remaining servers as both sources and destinations—without any downtime—said GitHub.

With DGit, each repository is stored on the three servers distributed around the pool of file servers. DGit selects the servers to host each repository, keeps those replicas in sync, and picks the best server to handle each incoming read request, said GitHub. Then, writes are streamed at the same time to all three replicas, but they are only committed if at least two replicas confirm success.

There are a few added benefits of DGit, including file servers no longer having to be deployed as pairs of identical servers. When a server fails, replacing it used to be urgent, said GitHub, because its backup server was operating with no spare. Now, when a server fails, DGit manages to make new copies of repositories that it hosted, and it automatically distributes them throughout the cluster.

The rollout process included several steps, such as:

- Moving the DGit developers’ personal repositories first

- Moving private, GitHub-owned repositories that weren’t part of running the website

- Moving the rest of GitHub’s private repositories

- Stopping moving repositories for three months while GitHub conducted testing, automated DGit-related processes, documented DGit, and fixed an occasional bug

As of the rollout yesterday, 58% of repositories and 96% of Gists (representing 67% of Git operations) are in DGit, according to GitHub. It plans on moving the rest as it turns pre-DGit file server pairs into DGit servers.