AWS is trying to make it easier for developers to leverage machine learning with new integrations with Amazon Aurora.

According to AWS, in order to use machine learning on data in a relational database, you would need to create a custom application that would read the data from the database, then apply the machine learning model. This can be impractical for organizations because developing the application requires a mix of skills, and once created, the application needs to be managed for performance, availability, and security, the company explained.

“Developers have historically had to perform a large amount of complicated manual work to take these predictions and make them part of a broader application, process, or analytics dashboard. This can include undifferentiated, tedious application-level code development to copy data between different data stores and locations and transform data between formats, before submitting data to the ML models and transforming the results to use inside your application. Such work tends to be cumbersome and a poor way to use the valuable time of your developers. Moreover, moving data in and out of data stores complicates security and governance,” Matt Asay, cloud and open source executive at AWS, wrote in a post.

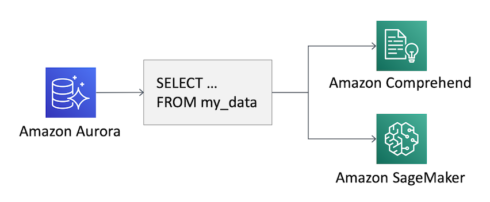

To alleviate some of that burden, AWS is integrating Amazon Aurora with Amazon SageMaker and Amazon Comprehend. This new integration will allow developers to use a SQL function to apply machine learning models to data.

Query outputs, including the added information from machine learning services, can be stored in a new table. Alternatively, it could be used interactively in an application by changing the SQL code run by the clients.

“Though we offer services that improve the productivity of data scientists, we want to give the much broader population of application developers access to fully cloud-native, sophisticated ML services. Tens of thousands of customers use Aurora and are adept at programming with SQL. We believe it is crucial to enable you to run ML learning predictions on this data so you can access innovative data science without slowing down transaction processing,” Asay wrote.